Tidy3D

Case studies

Choose the category you are interested in:

Renowned photonics researcher and Stanford professor honored for pioneering work in computational electromagnetics and nanophotonics

[Boston, April 30, 2025] – Flexcompute proudly congratulates Dr. Shanhui Fan, co-founder of Flexcompute and a world-renowned expert in photonics and electromagnetism, on his election to the U.S. National Academy of Sciences (NAS), one of the highest honors in American science.

Dr. Fan is a professor of electrical engineering and applied physics at Stanford University and has played a pivotal role in shaping the theoretical and computational foundations of modern photonics. His research spans nanophotonics, photonic crystals, metamaterials, plasmonics and solar energy conversion, with a strong emphasis on computational methods, a core strength that he brought to Flexcompute.

As co-founder of Flexcompute, Dr. Fan helped establish the company’s mission to revolutionize scientific computing, delivering breakthrough simulation performance for electromagnetics, fluid dynamics and beyond. His vision continues to drive Flexcompute’s innovation at the intersection of physics and high-performance computing.

Dr. Fan earned his Ph.D. from the Massachusetts Institute of Technology under the mentorship of John Joannopoulos. With an h-index of 177, he is one of the most highly cited researchers in his field.

“Shanhui’s election into the National Academy of Sciences is not only a recognition of his extraordinary scientific achievements, but also a validation of the deep scientific principles that guide our work at Flexcompute,” said Vera Yang, Flexcompute Co-Founder and President. “We’re honored to have him as a co-founder and collaborator.”

Dr. Fan is one of 120 new U.S-based members elected in 2025 for distinguished and continuing achievements in original research. For more information on the NAS 2025 elections, visit nasonline.org.

Innovation is hard, but it’s non-negotiable.

At Flexcompute, we just celebrated 10 years of leading innovation in physics simulation. It’s been an exhilarating journey: assembling a team of the brightest minds, building a full-stack software-hardware architecture for modern GPUs, designing algorithms from scratch, and cutting simulation time without sacrificing accuracy.

Starting with a revolutionary idea is one thing.

Pushing beyond it, again and again, at startup speed is where true innovation lives.

Our mission is simple: make hardware innovation as easy as software innovation.

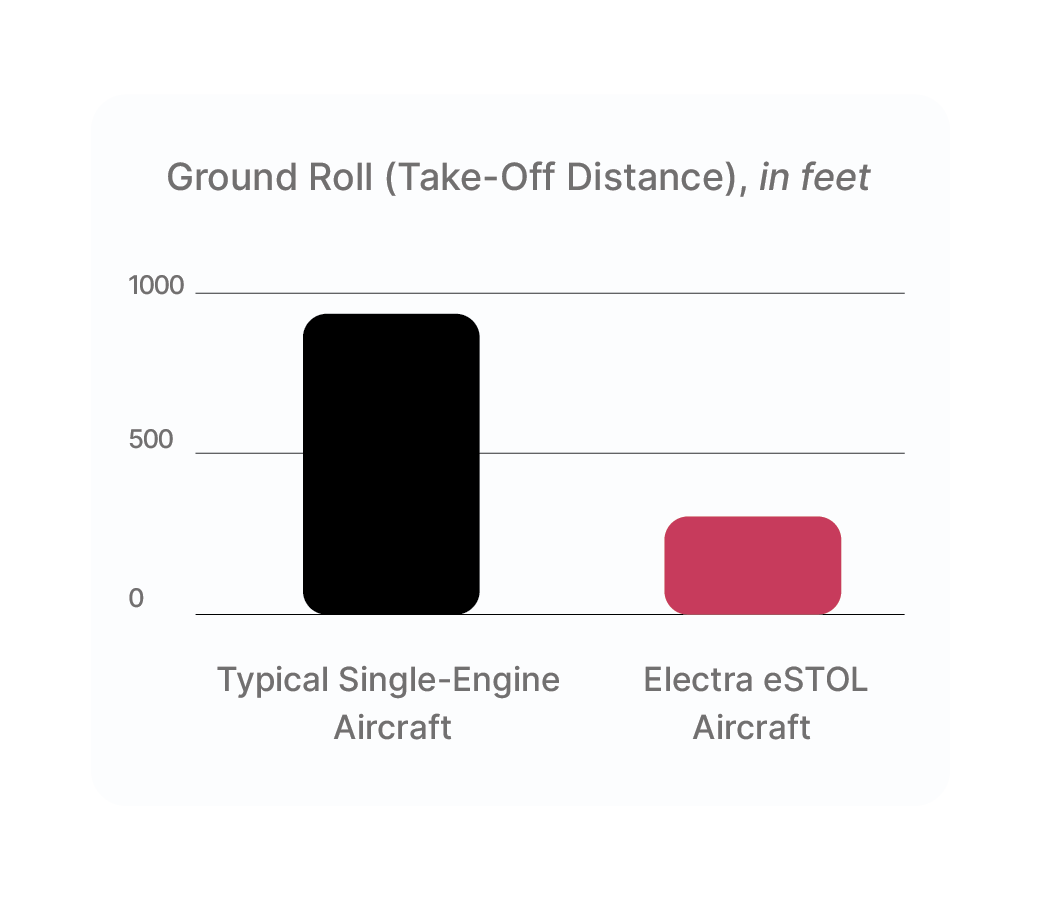

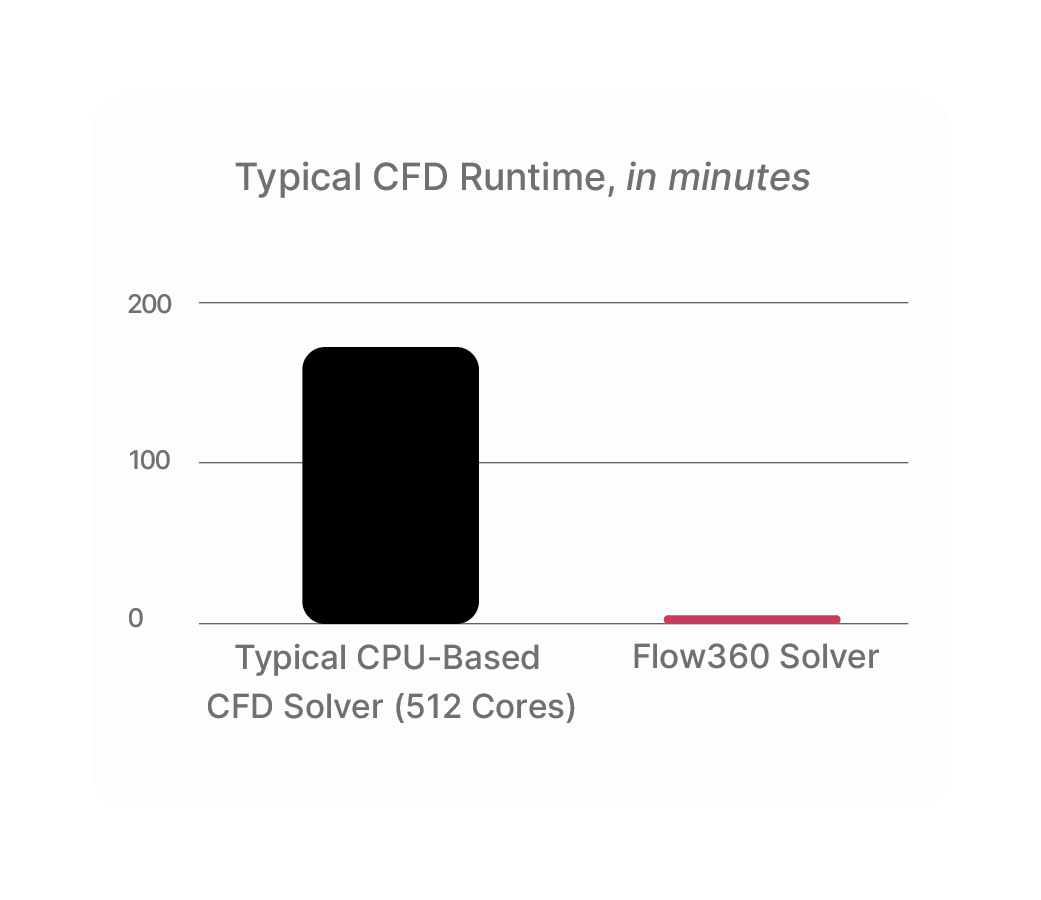

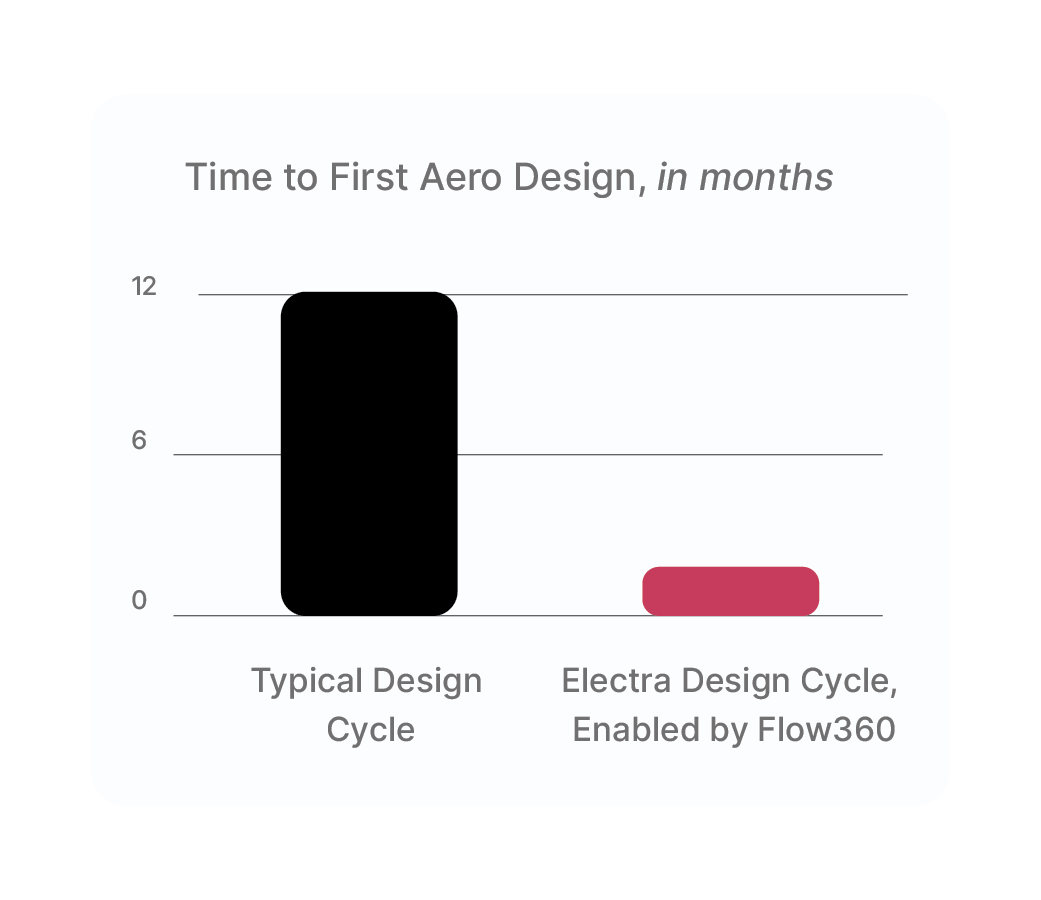

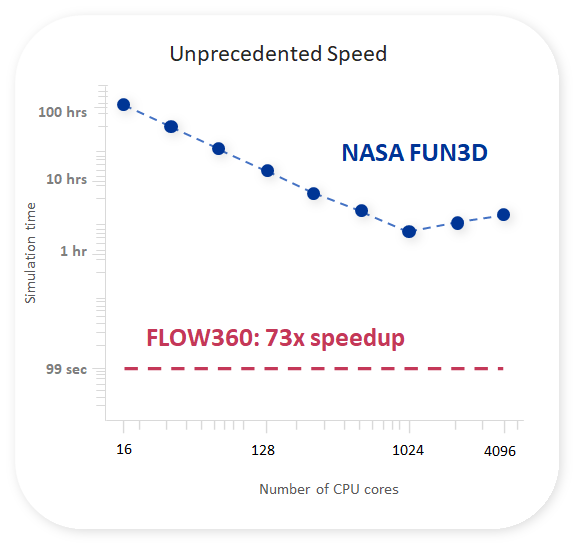

That’s why we built Flow360, the world’s first GPU-native Computational Fluid Dynamics (CFD) solver, delivering 10–100 times faster performance than traditional CPU-based tools.

But we didn’t stop there.

Flow360 is not just fast, it keeps getting faster.

Speeding Up What’s Already Fast

By late 2022, most obvious speed-up opportunities had already been captured. Improving beyond that point wasn’t easy. It demanded deep innovation and smarter, more adaptive methods.

Yet, over the past two years, the Flexcompute team pushed even harder, making Flow360 significantly faster once again.

Here’s what we achieved:

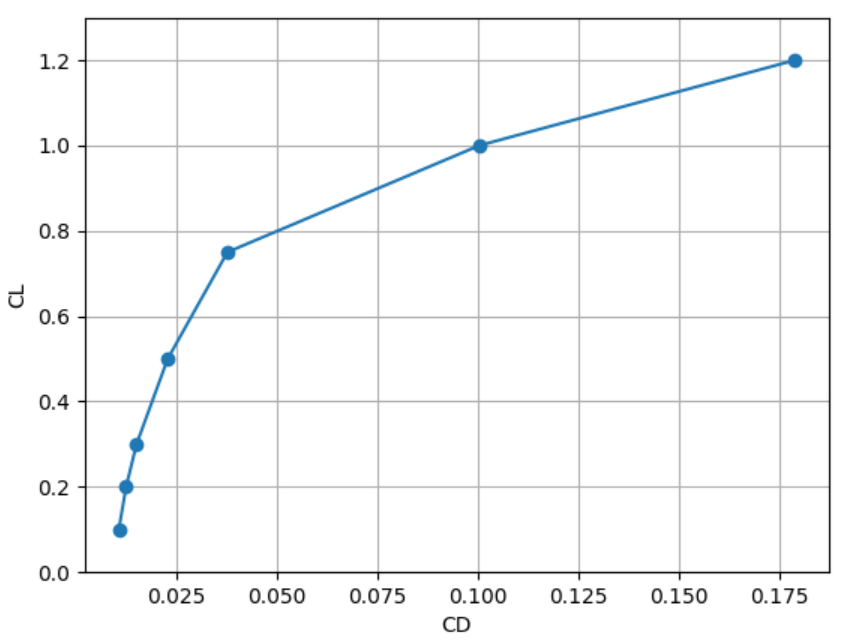

Real-World Performance: 3X Faster in Two Years on Same Hardware

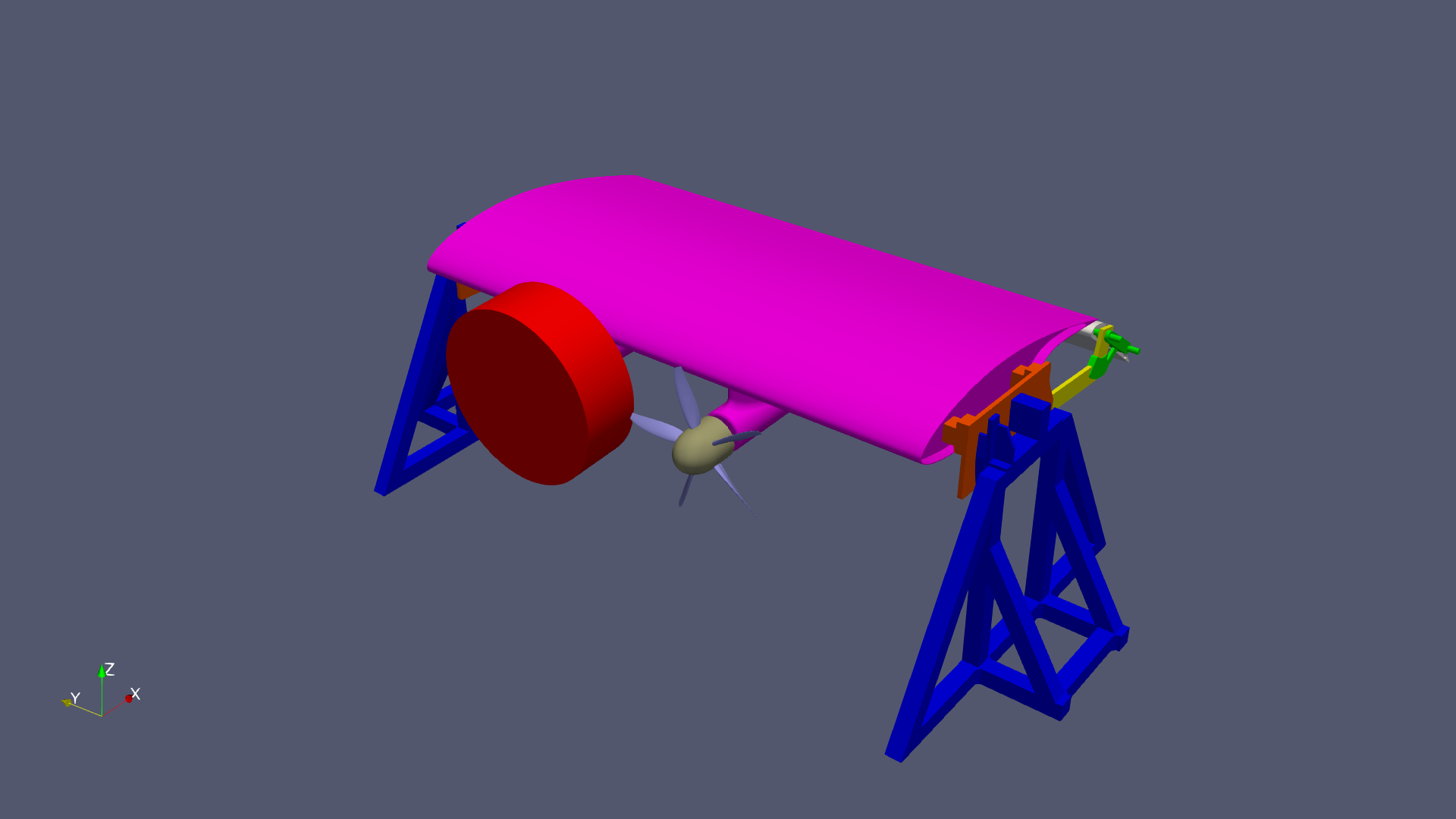

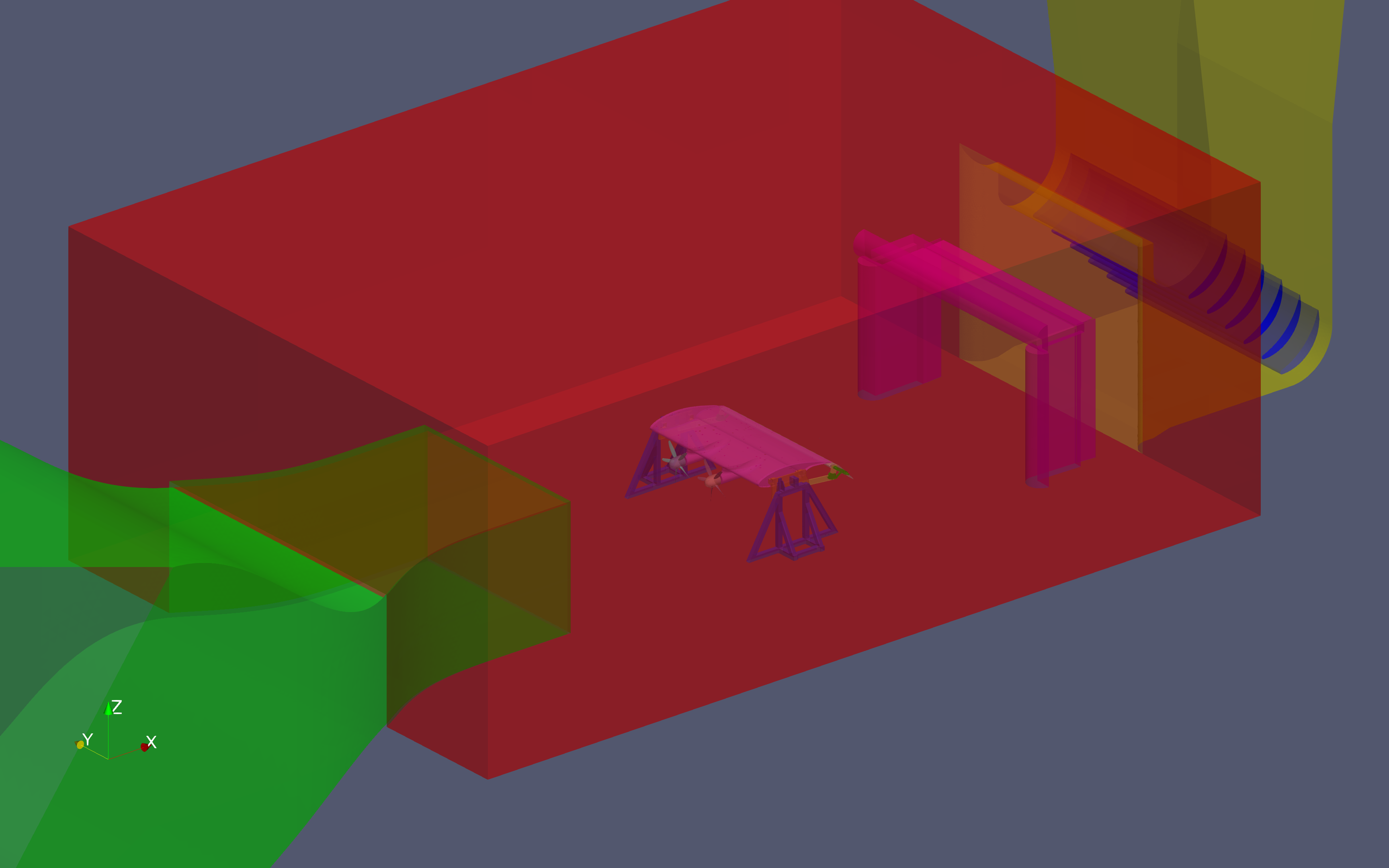

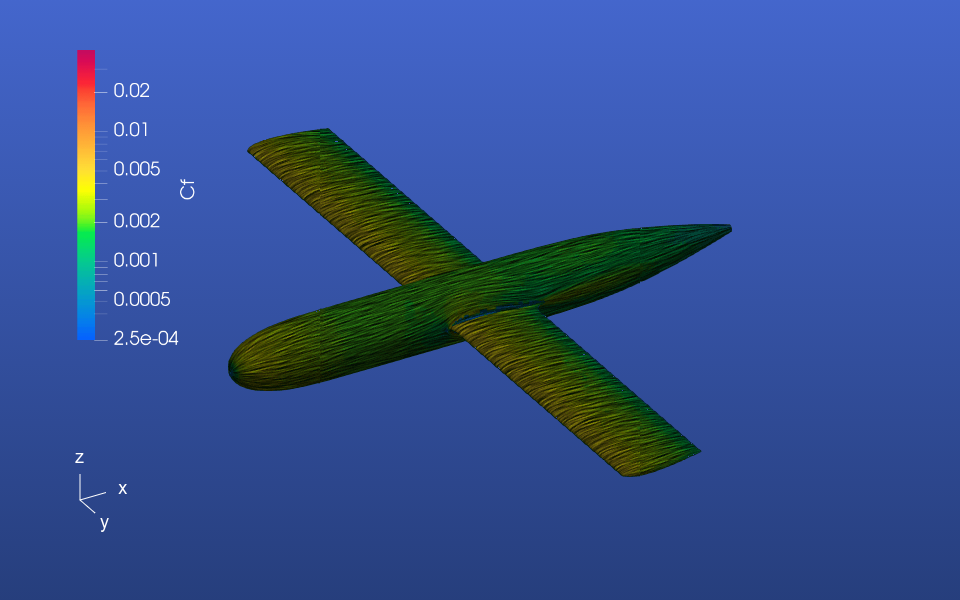

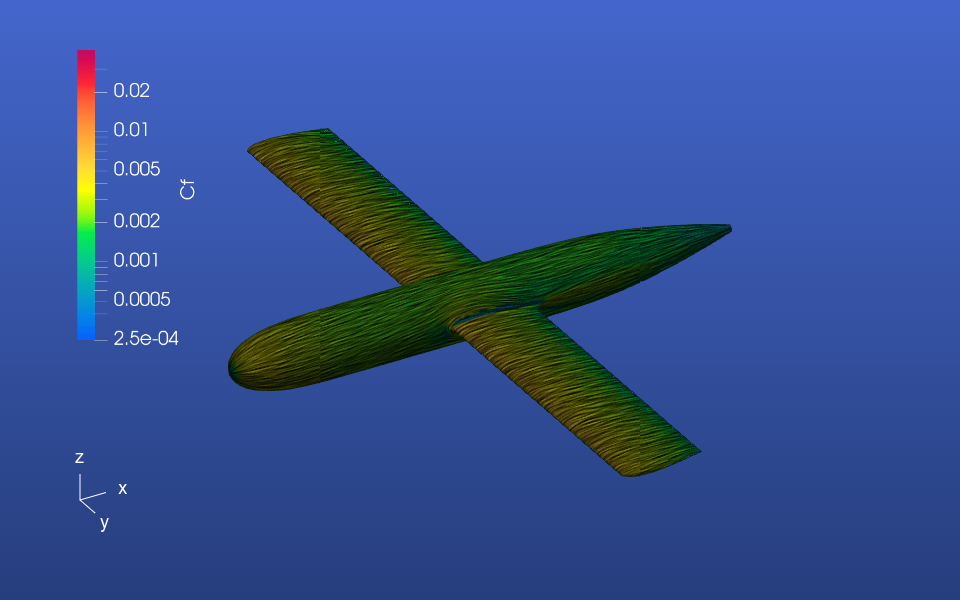

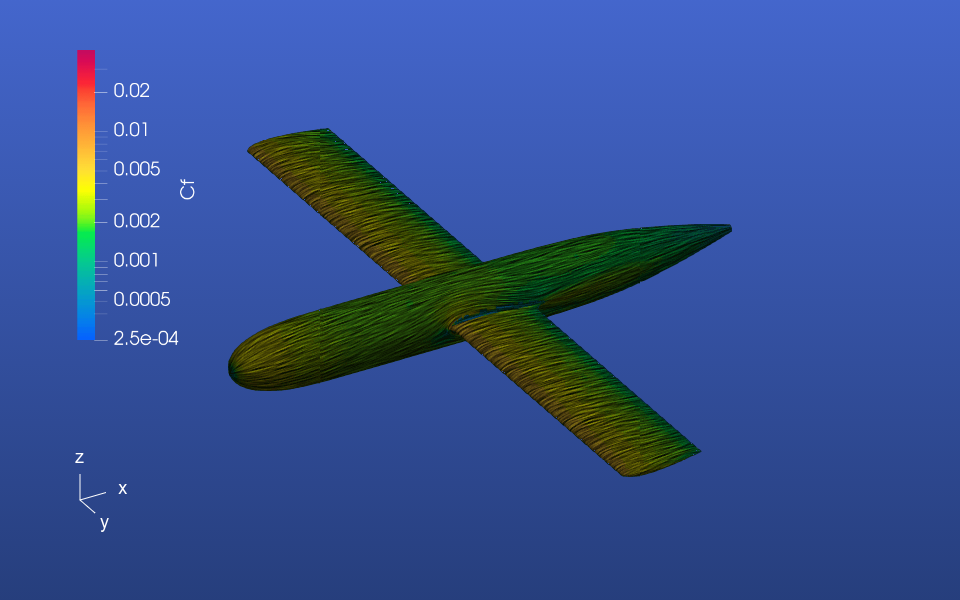

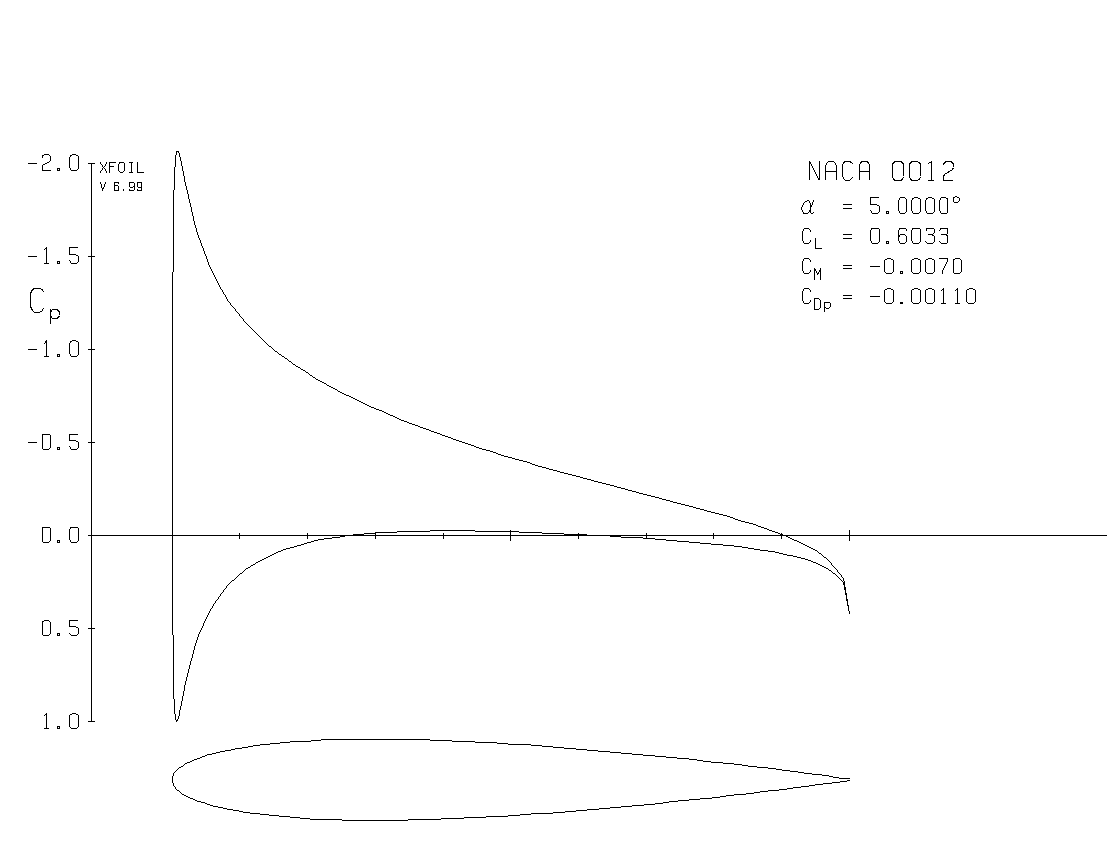

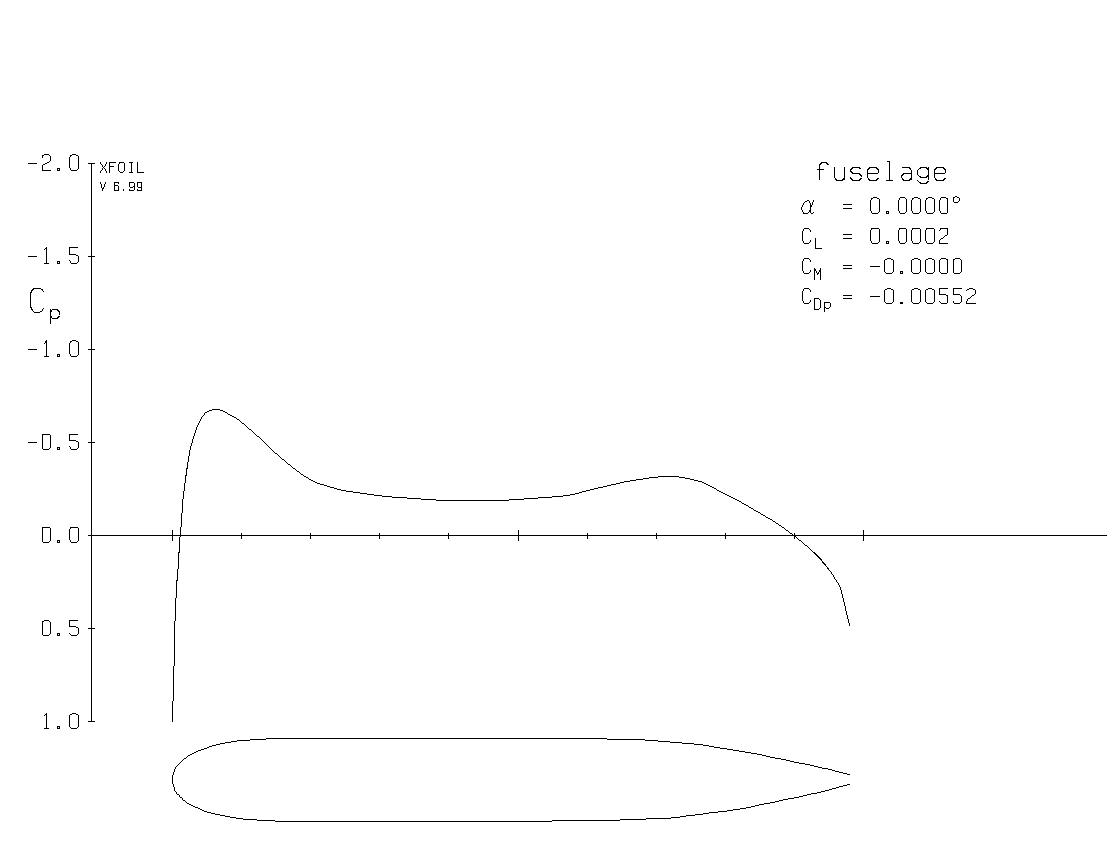

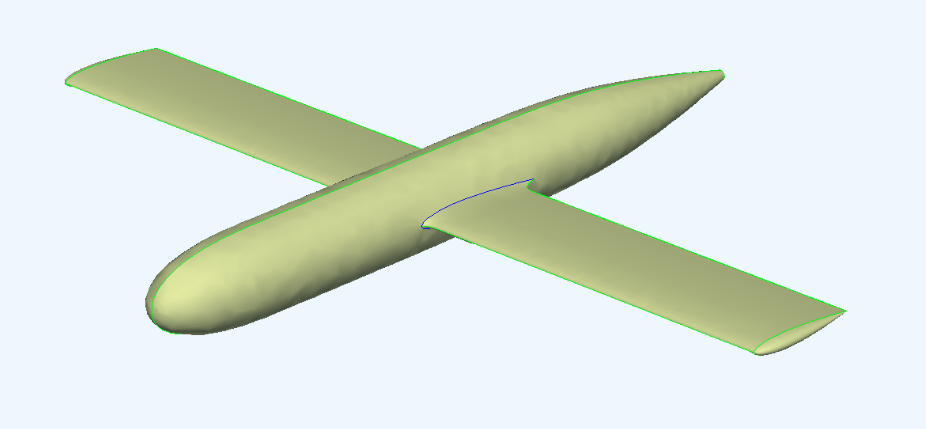

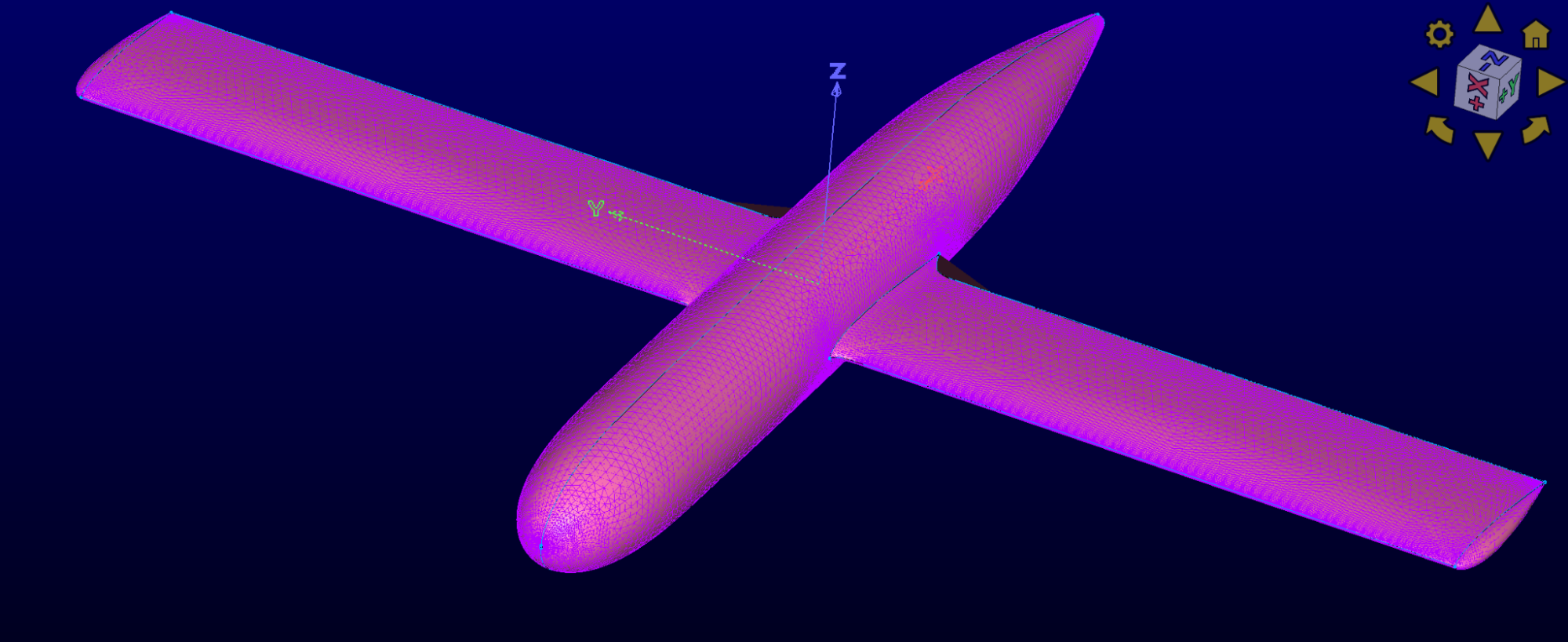

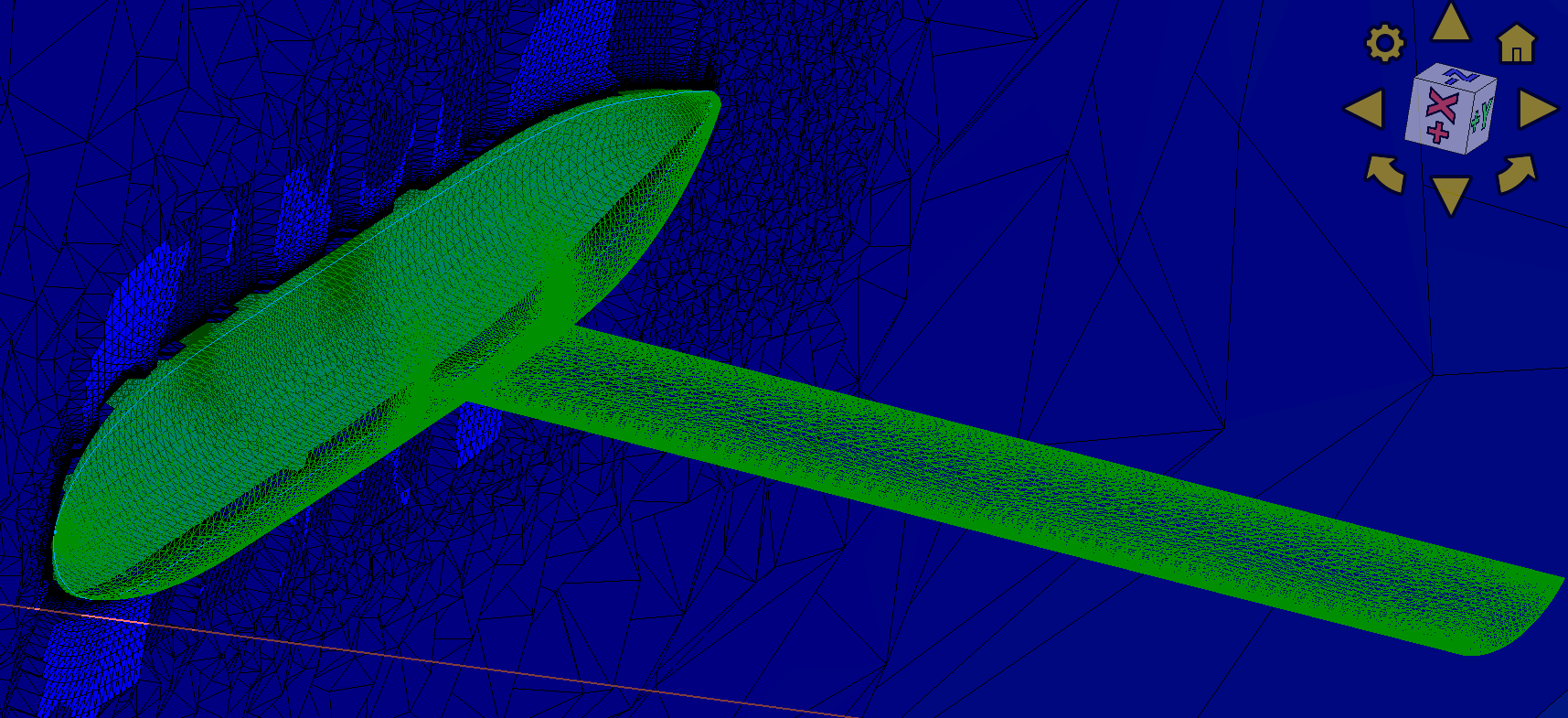

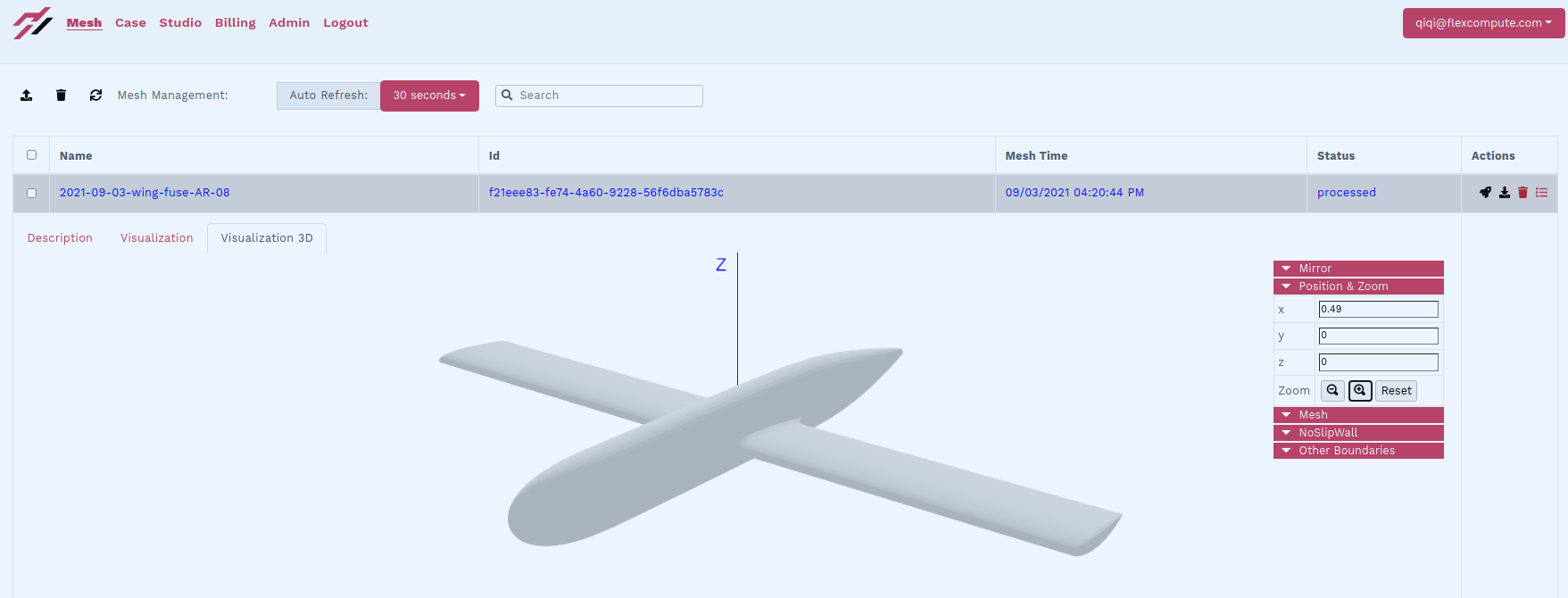

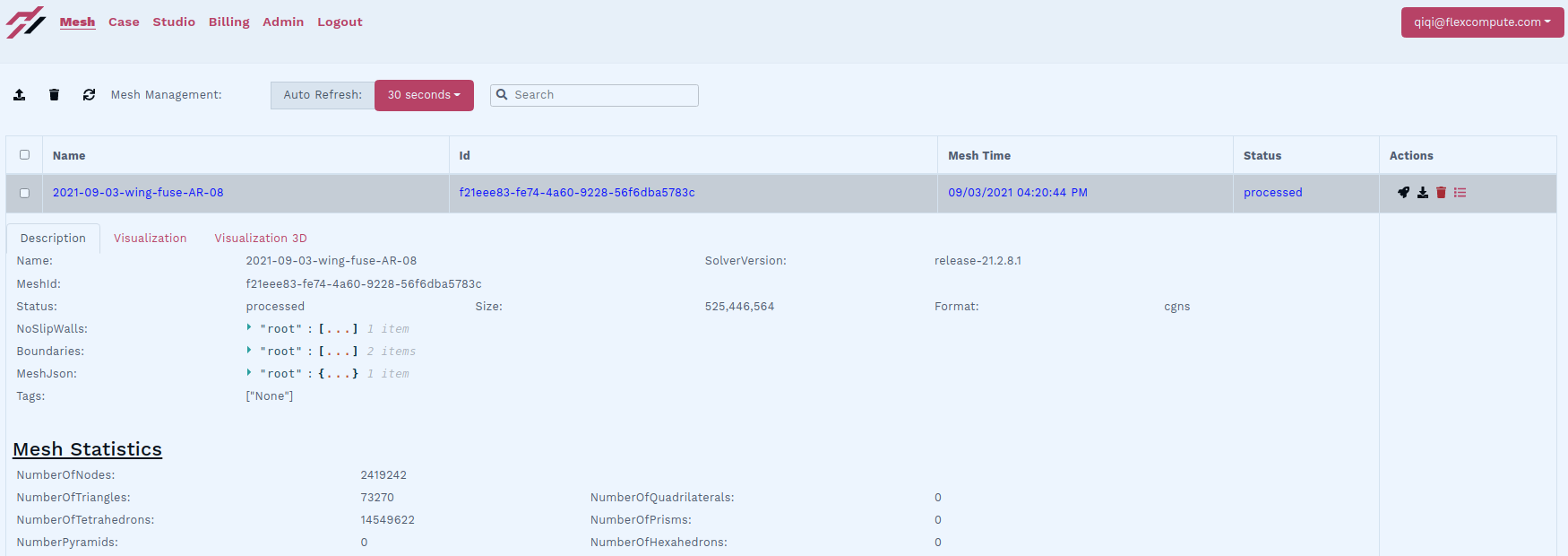

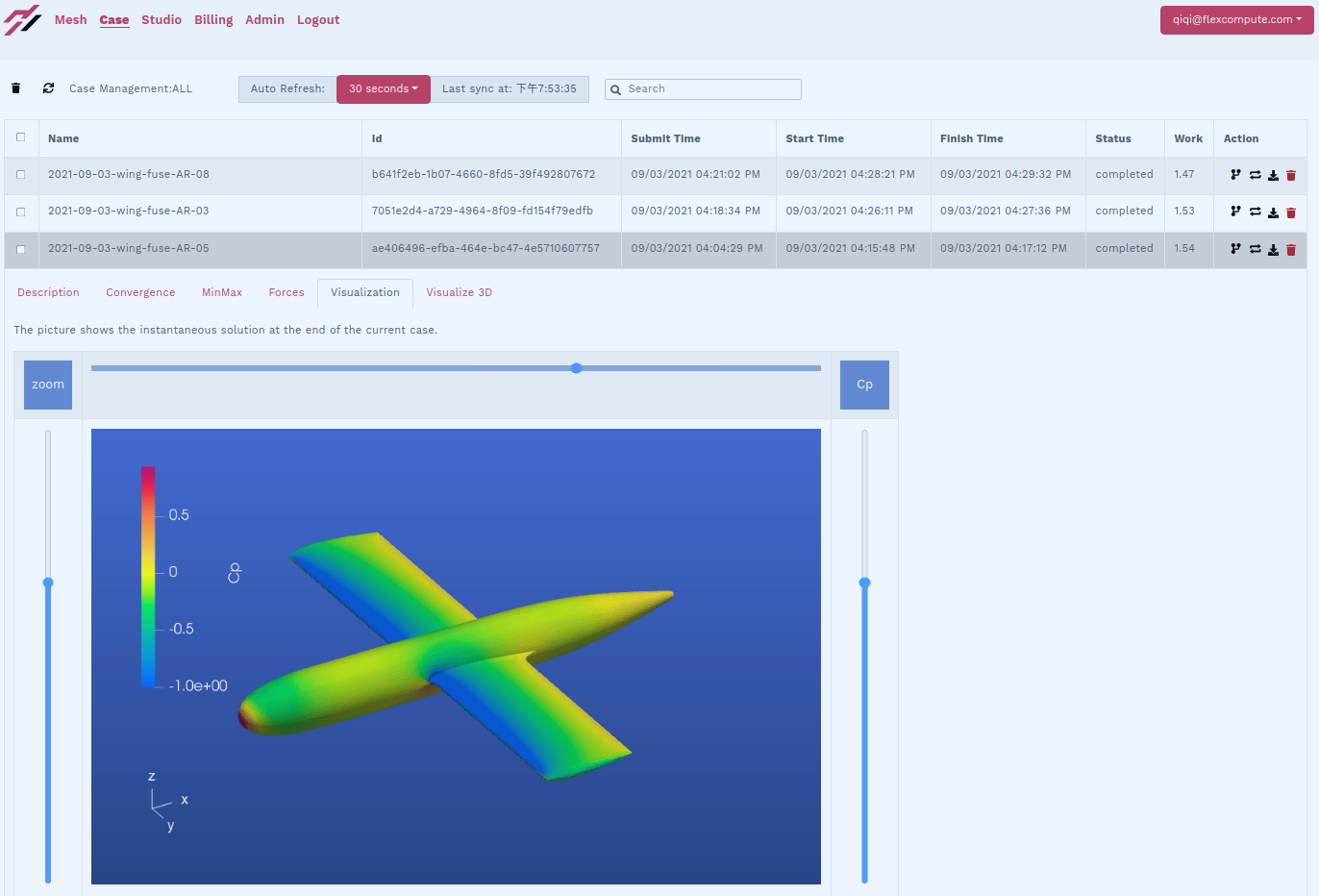

Geometry and Simulation Setup:

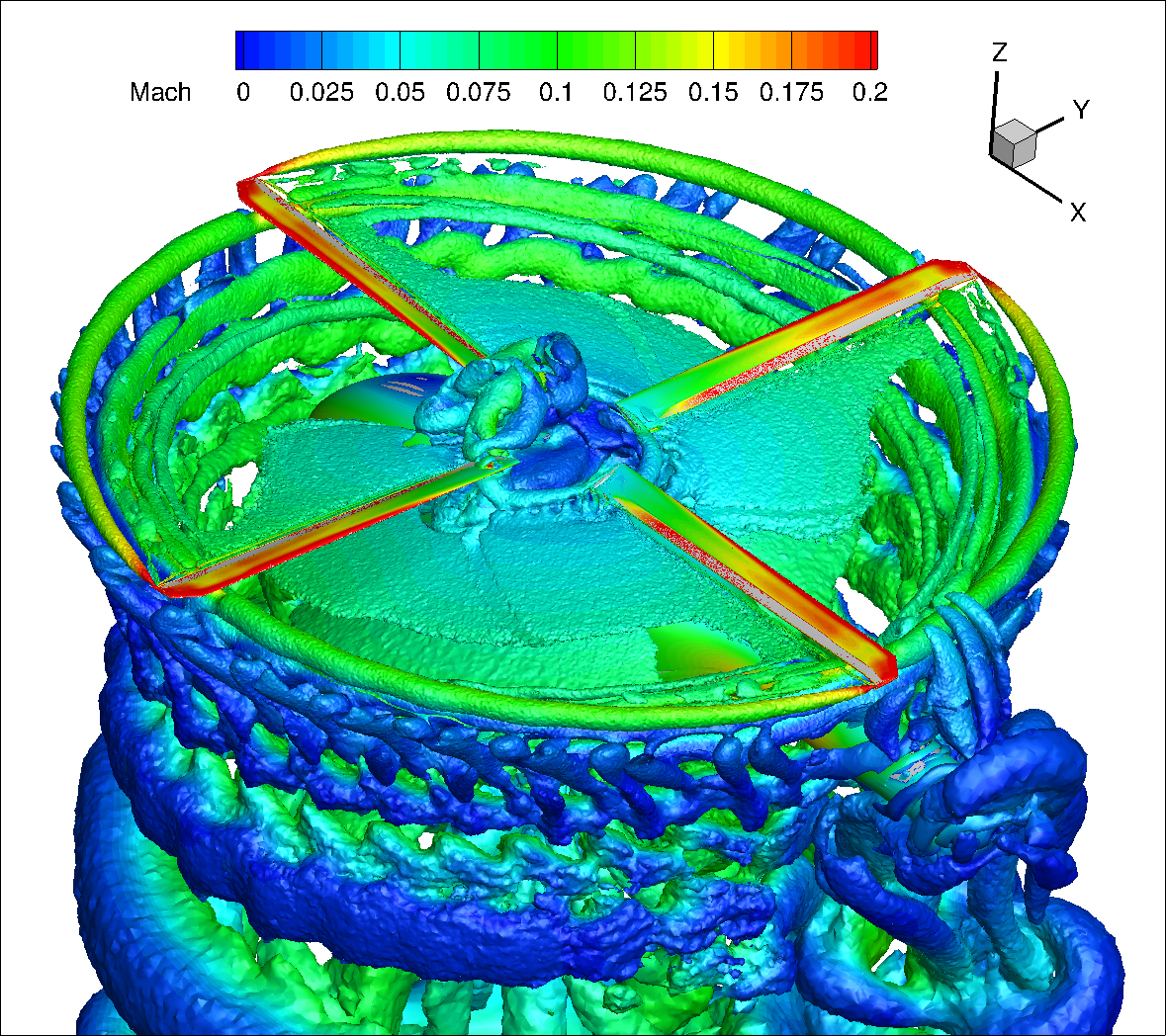

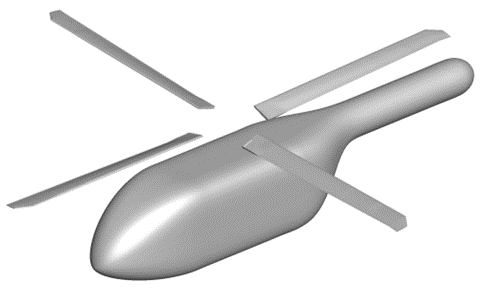

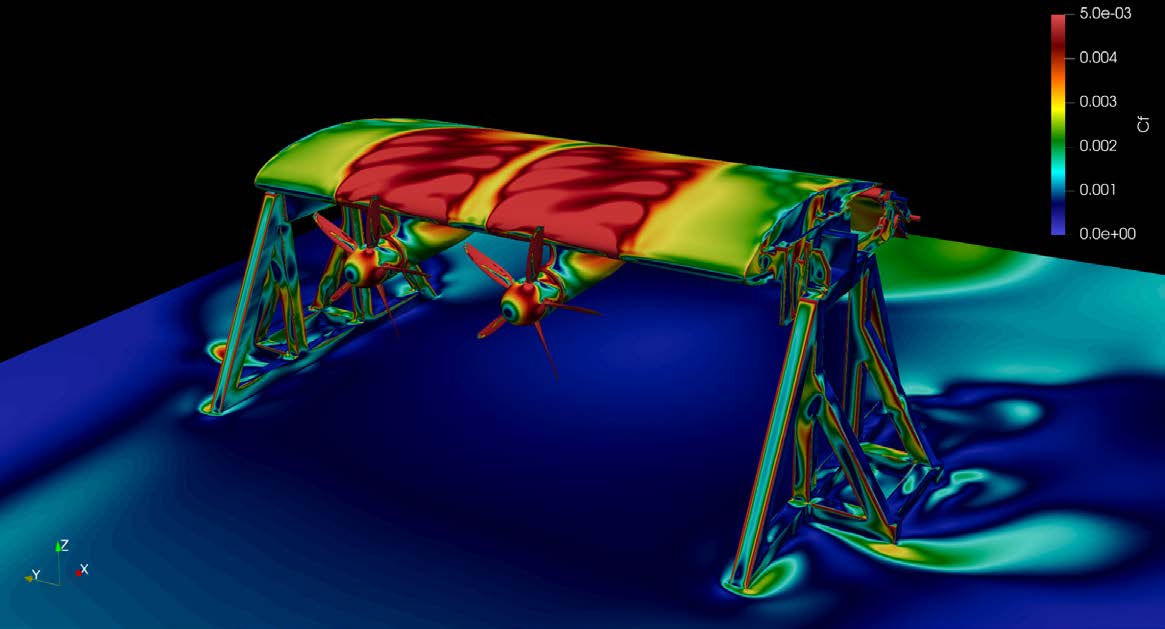

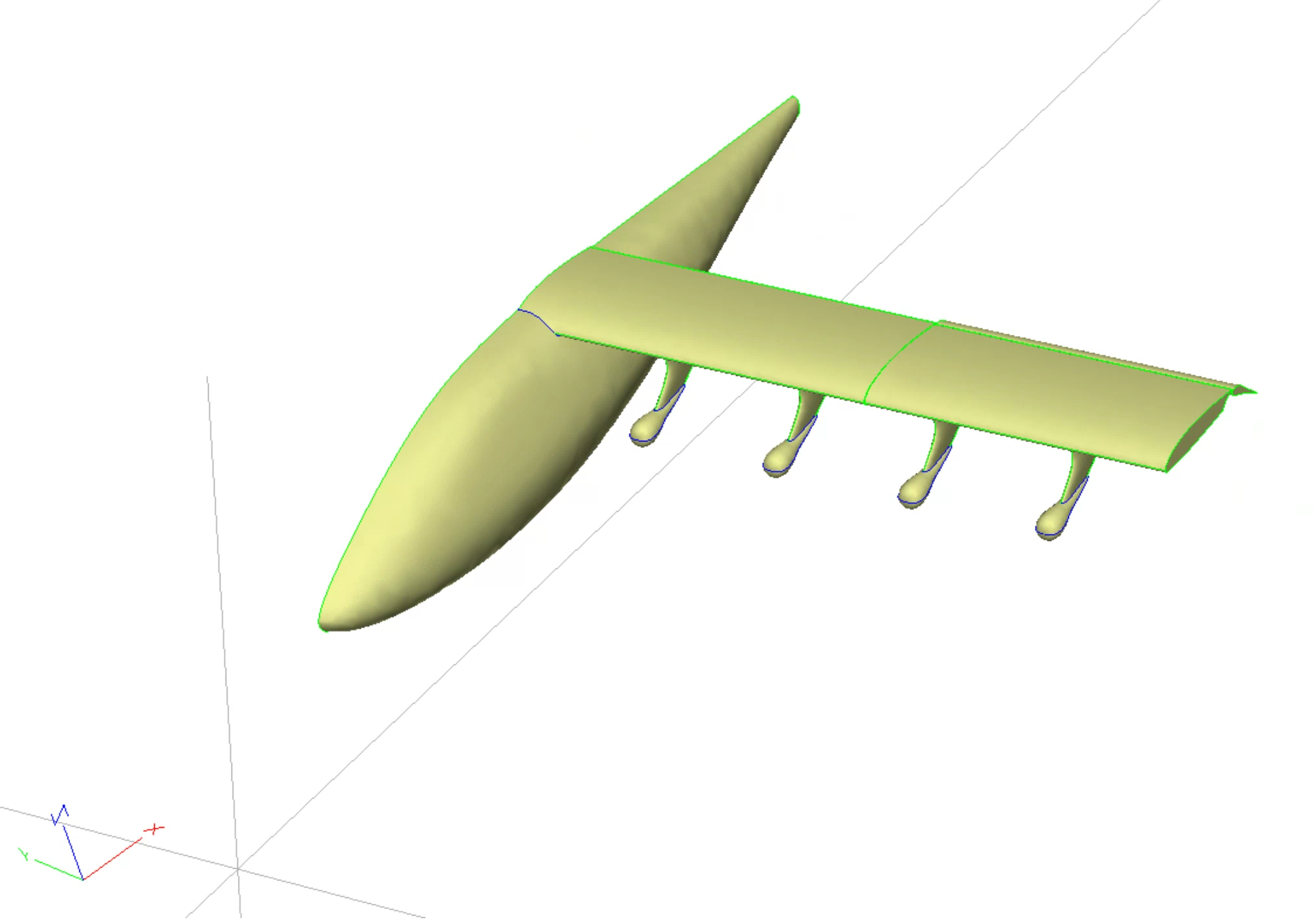

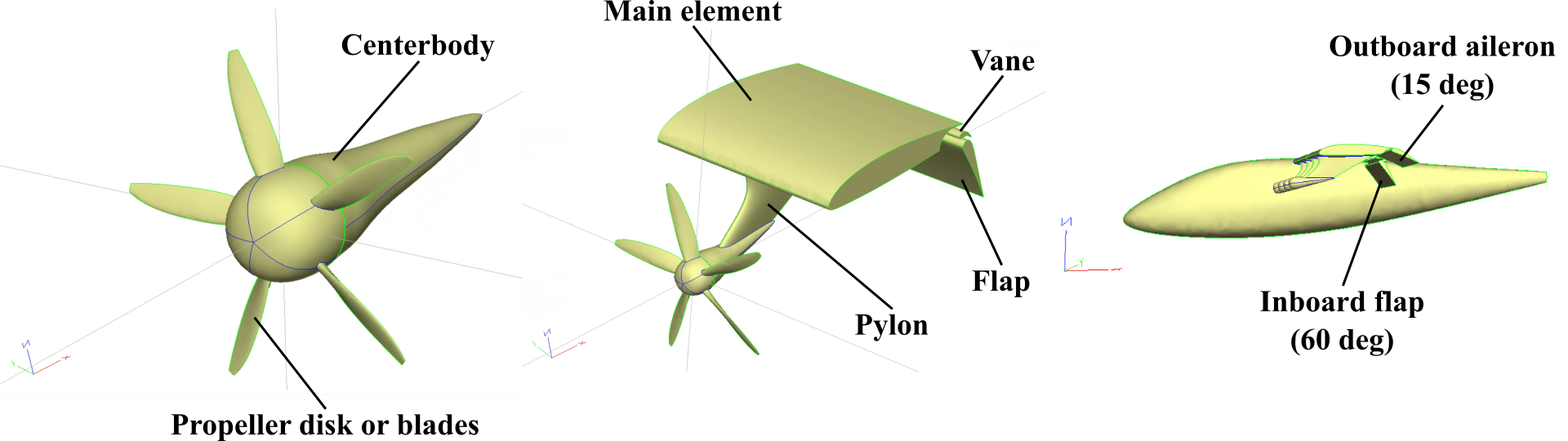

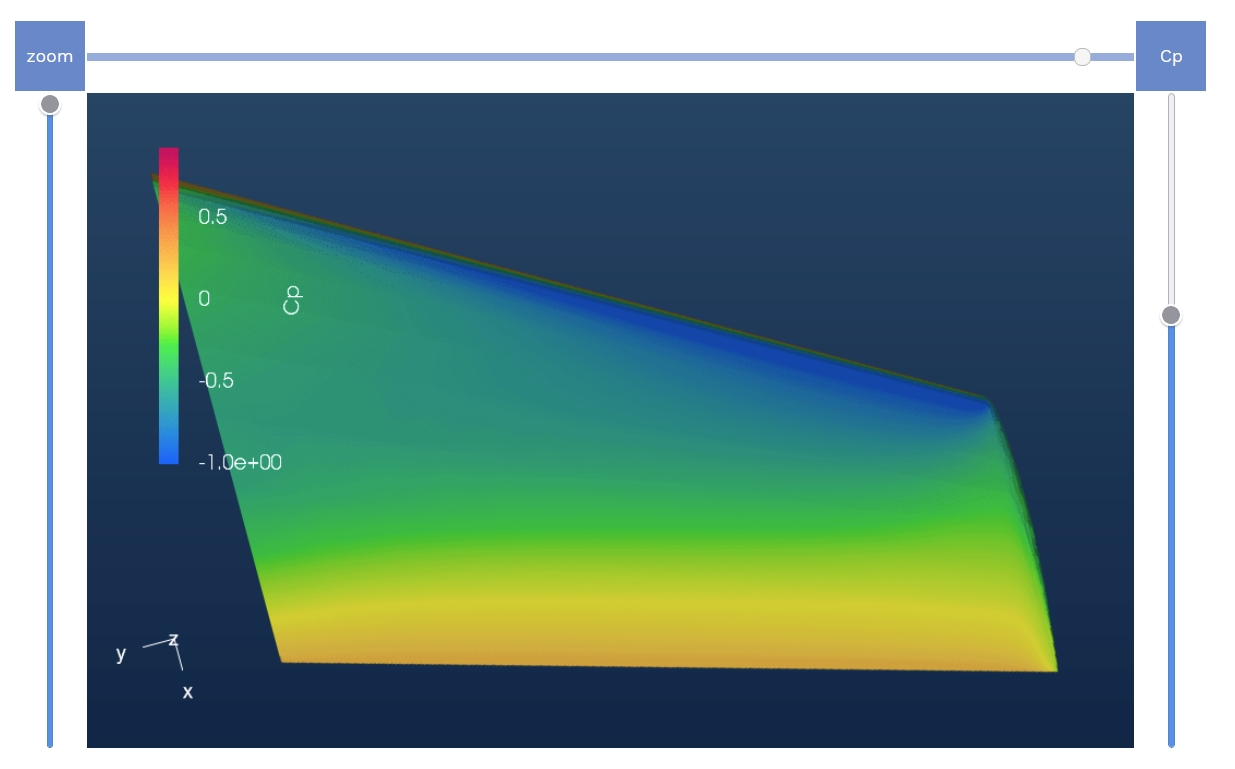

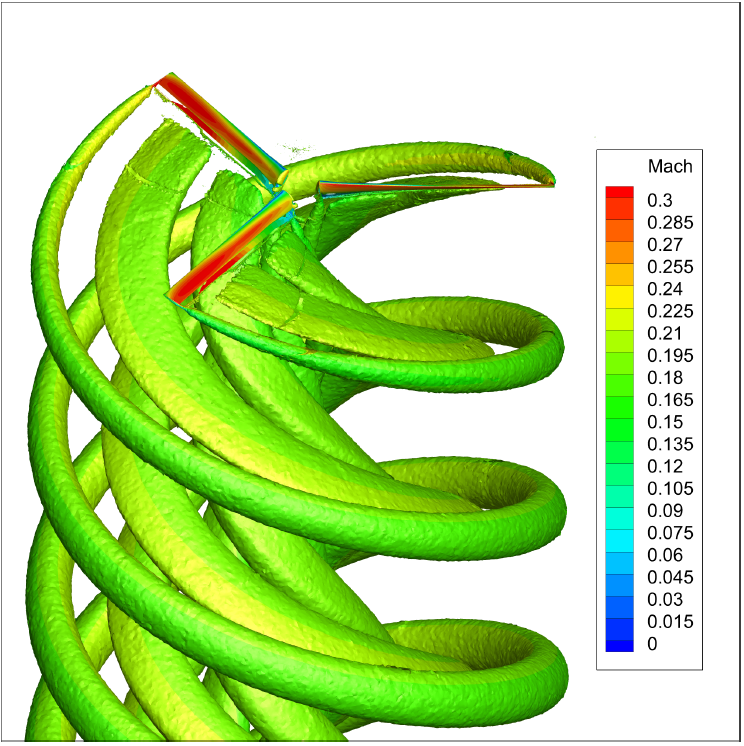

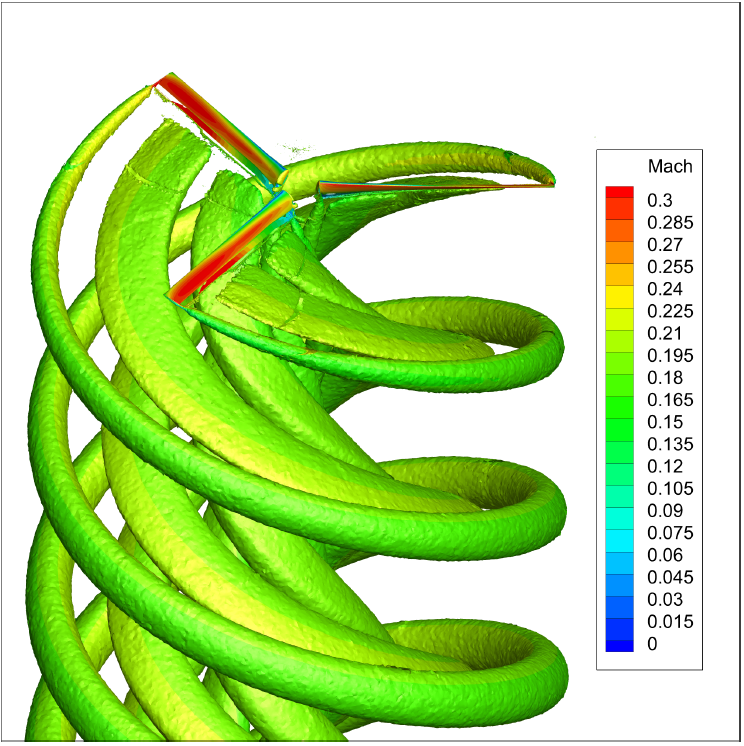

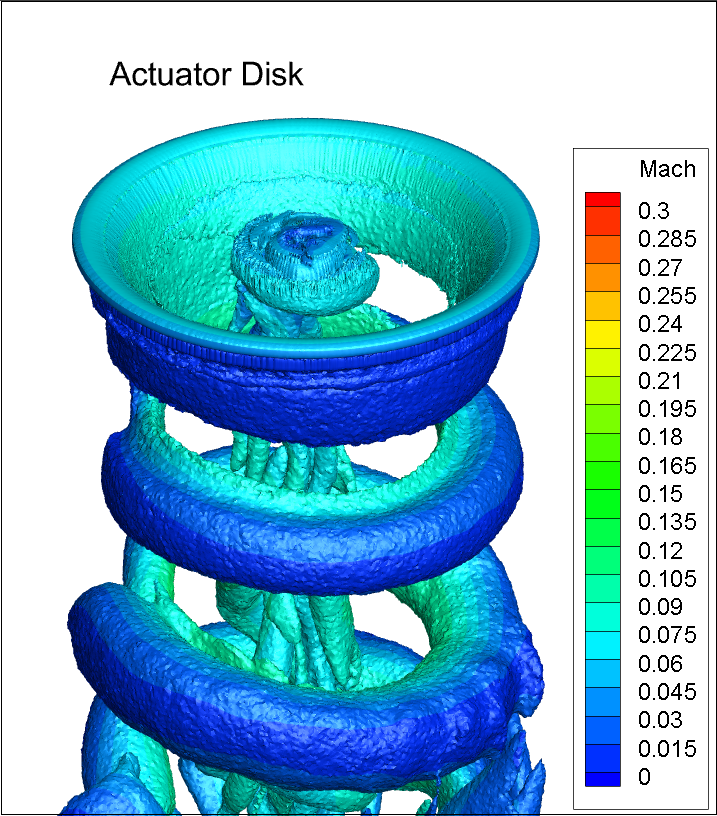

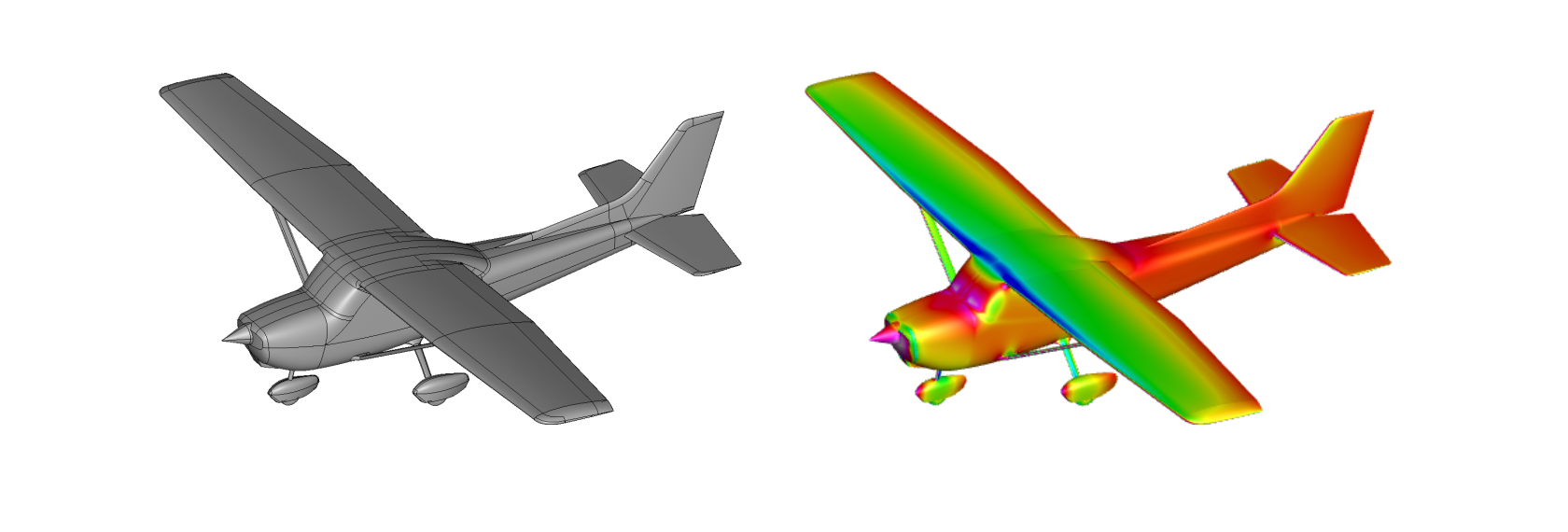

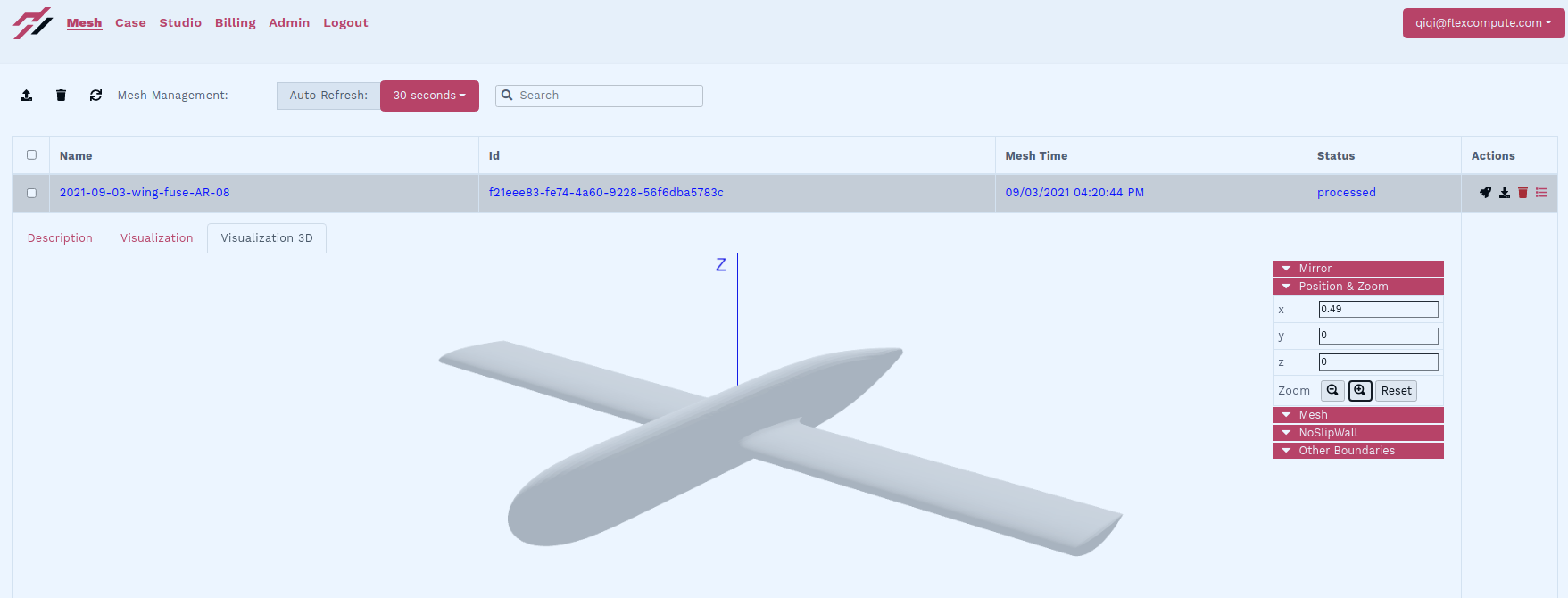

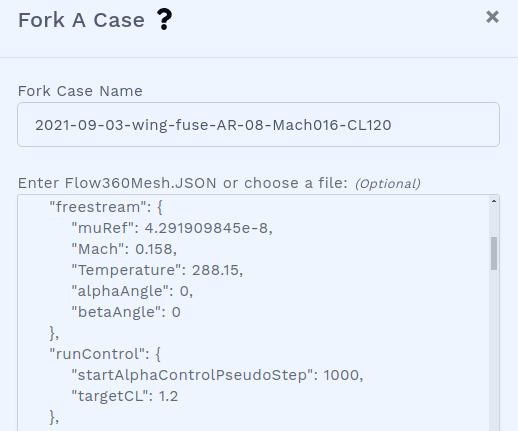

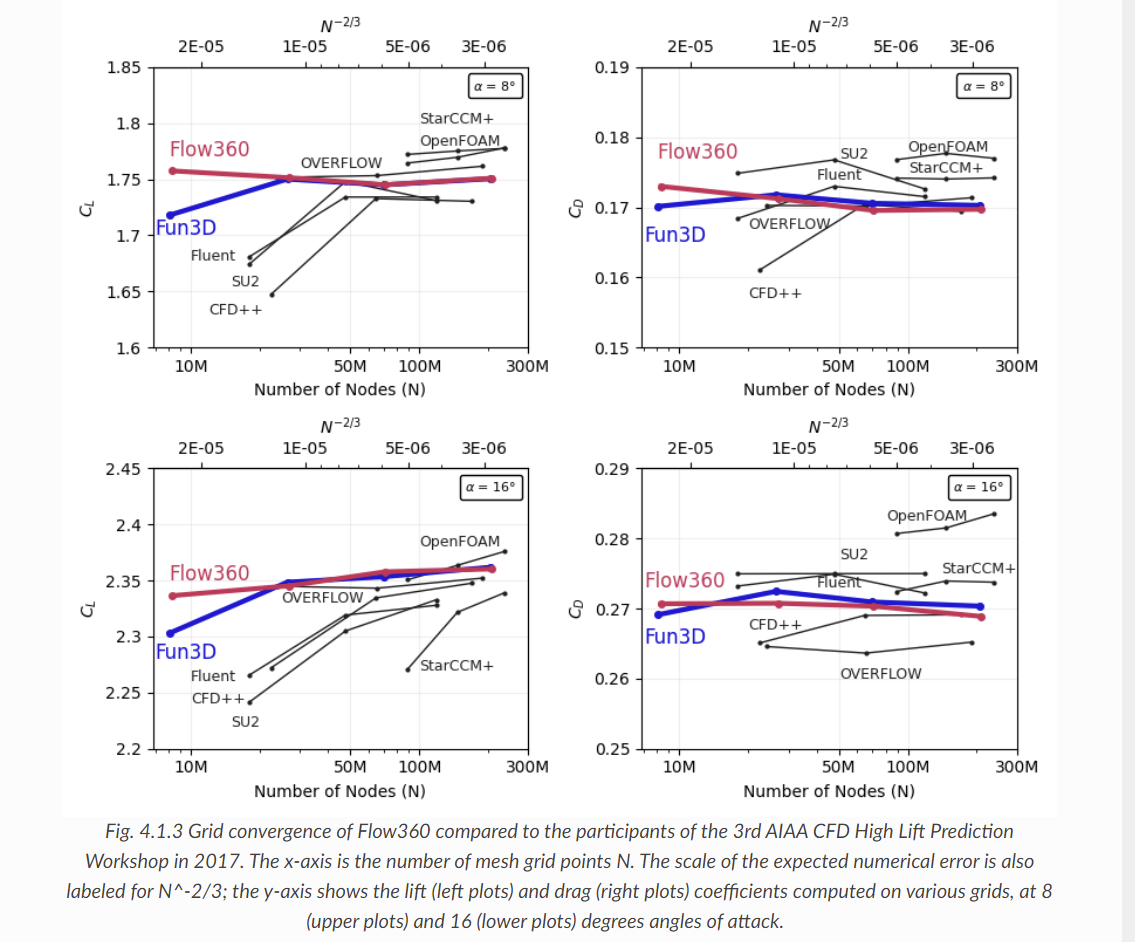

We used a typical aerospace configuration of an aircraft with fuselage, wings, horizontal and vertical tails, and propeller booms meshed automatically by Flow360 (~25 million nodes).

Simulations were run at Mach 0.15 and 2 degrees angle of attack (AoA), consistently across two years of solver versions.

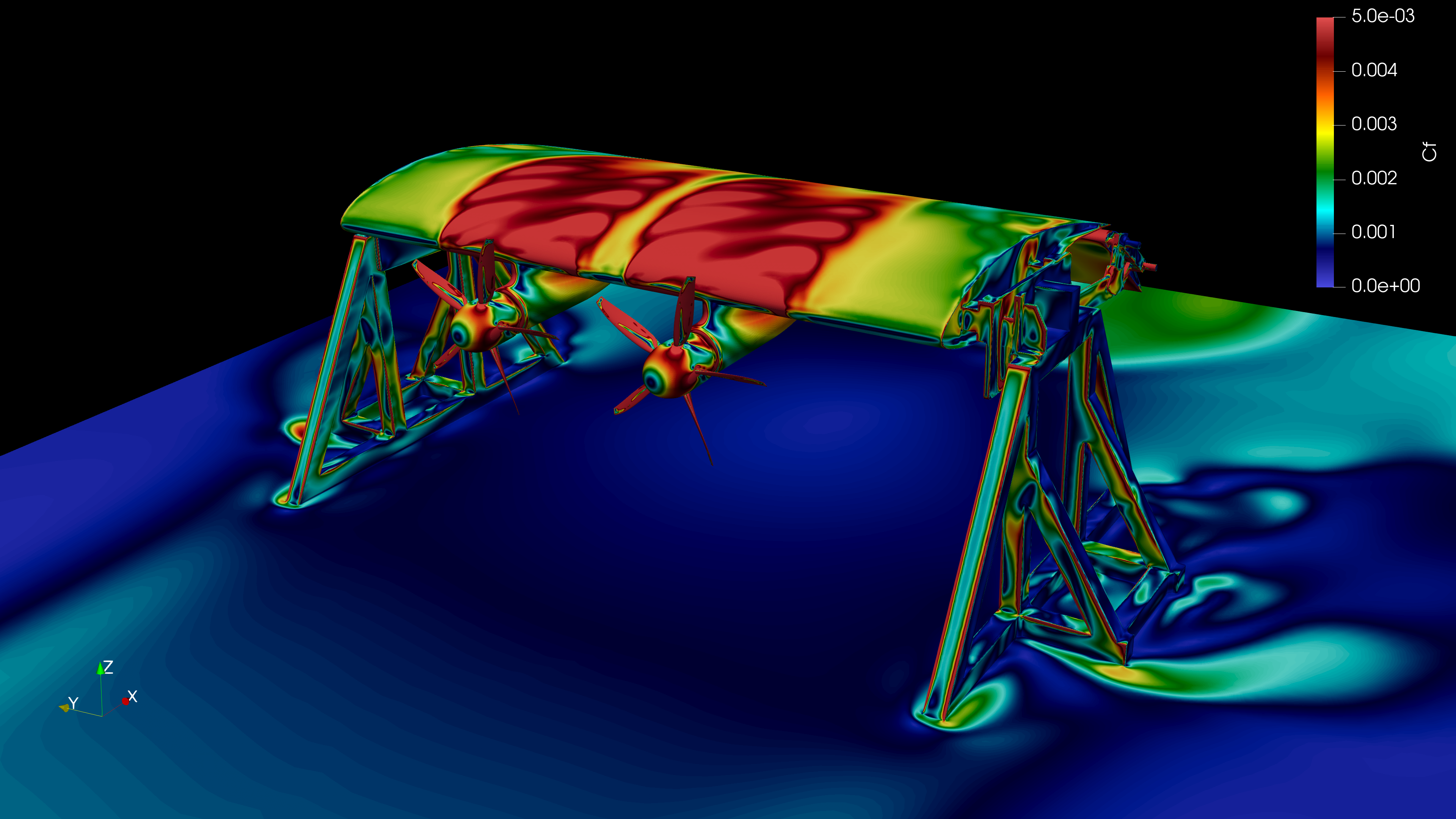

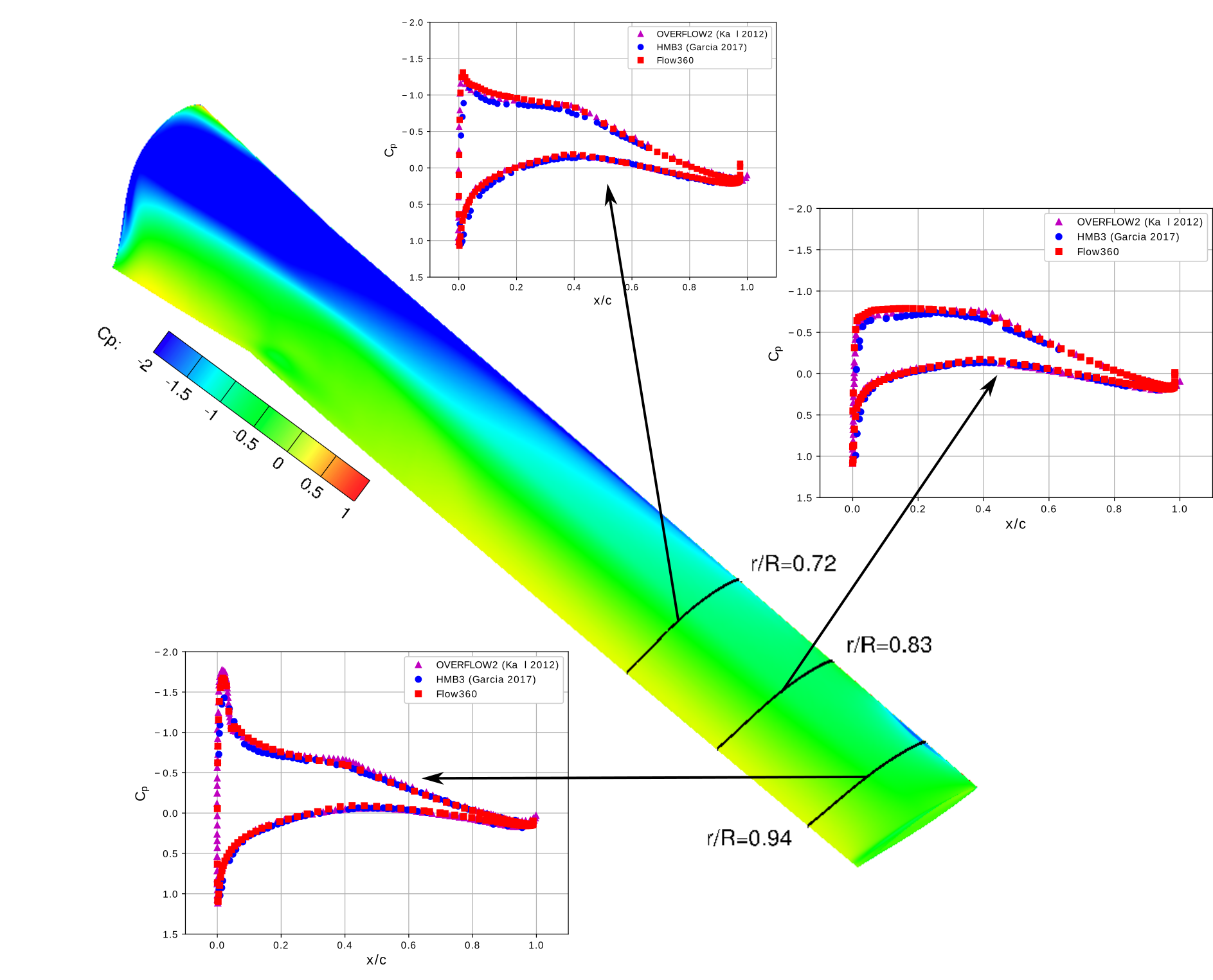

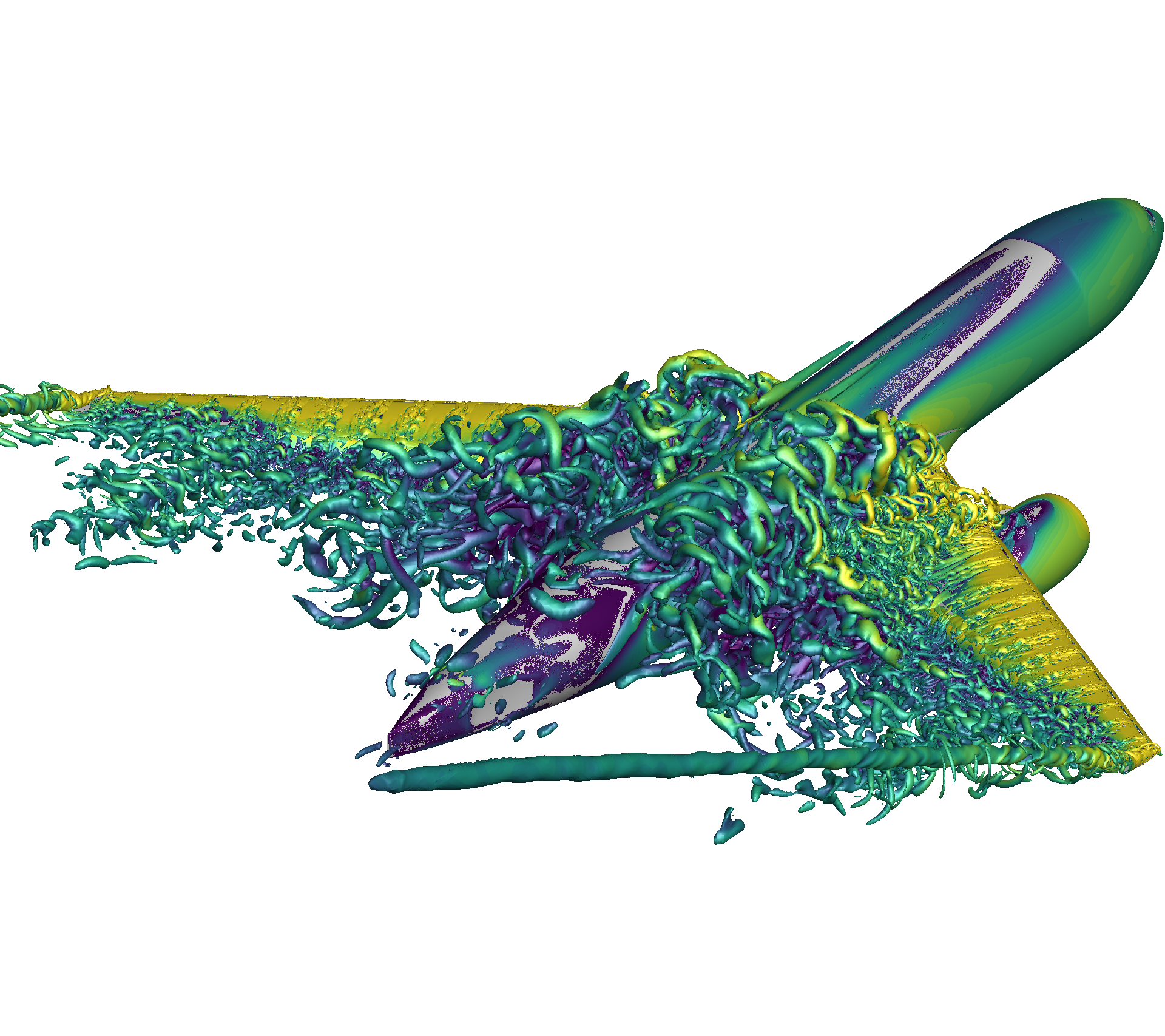

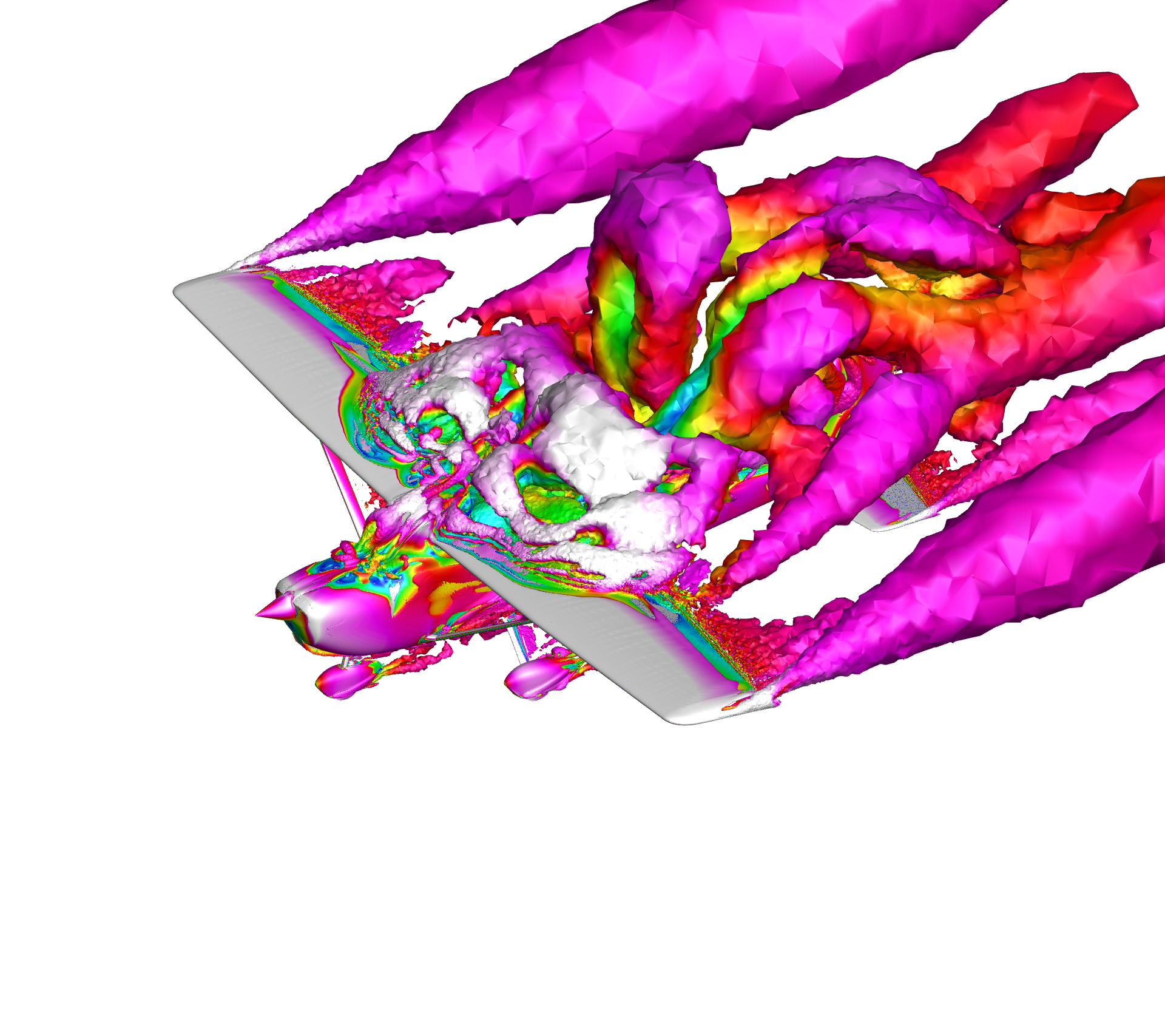

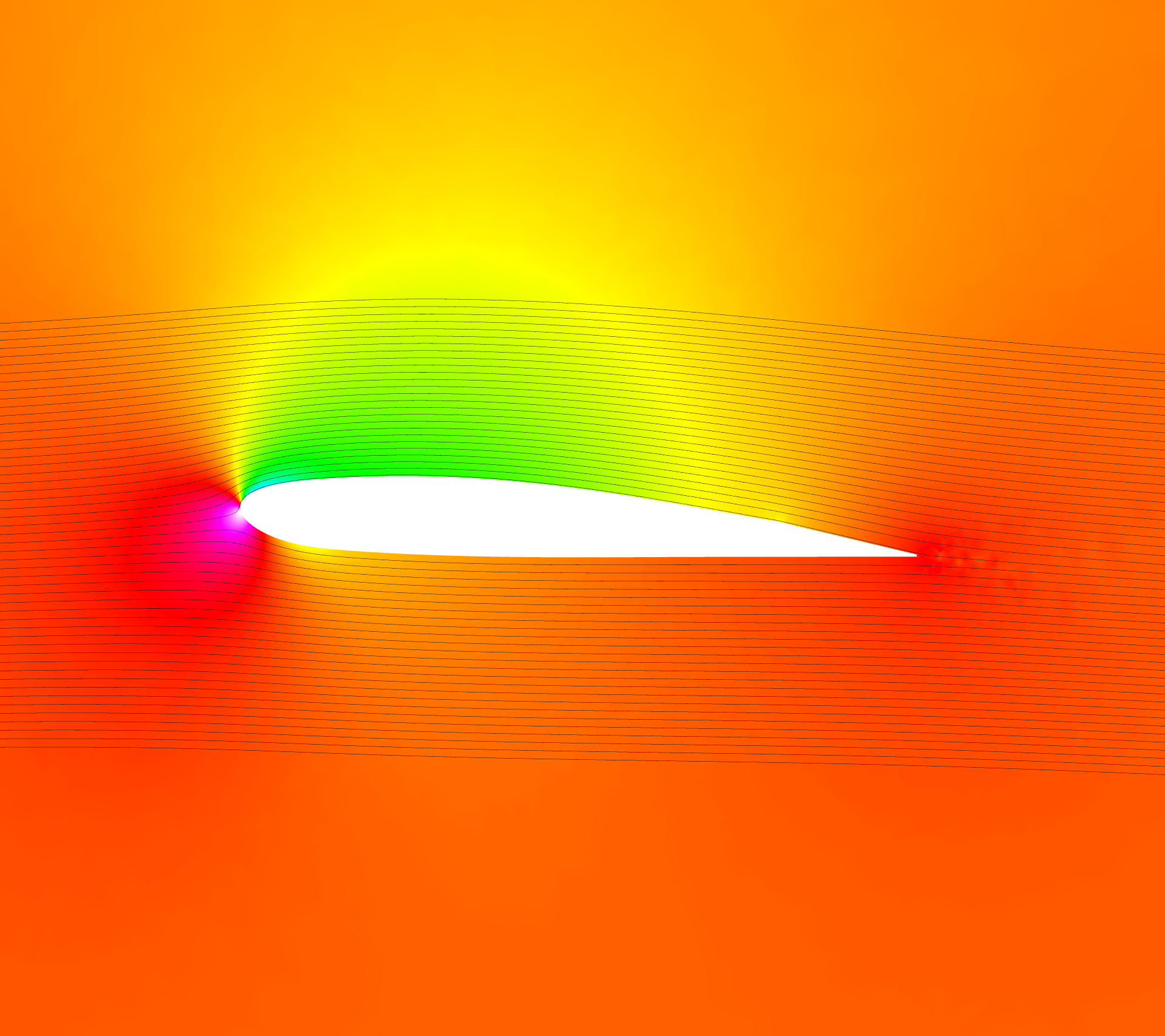

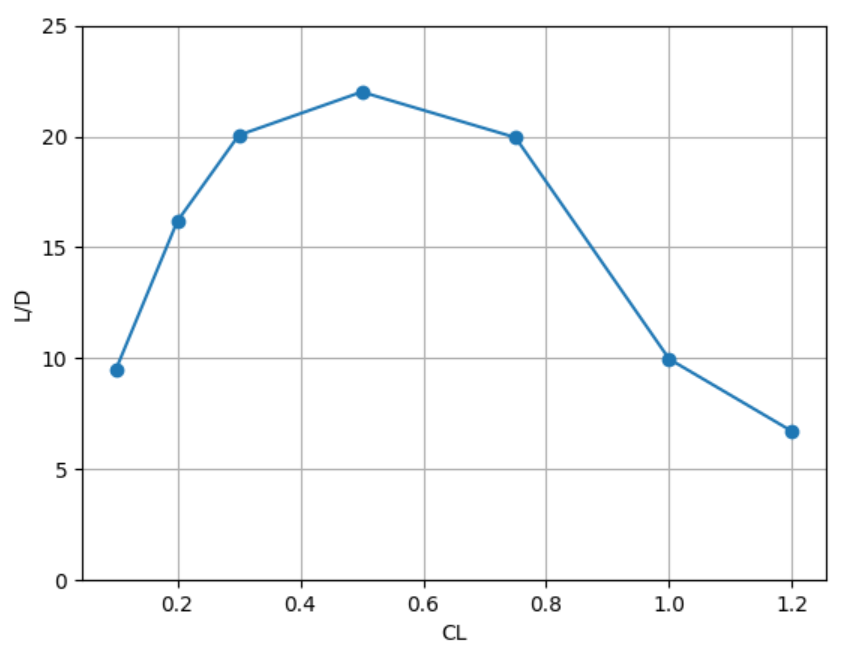

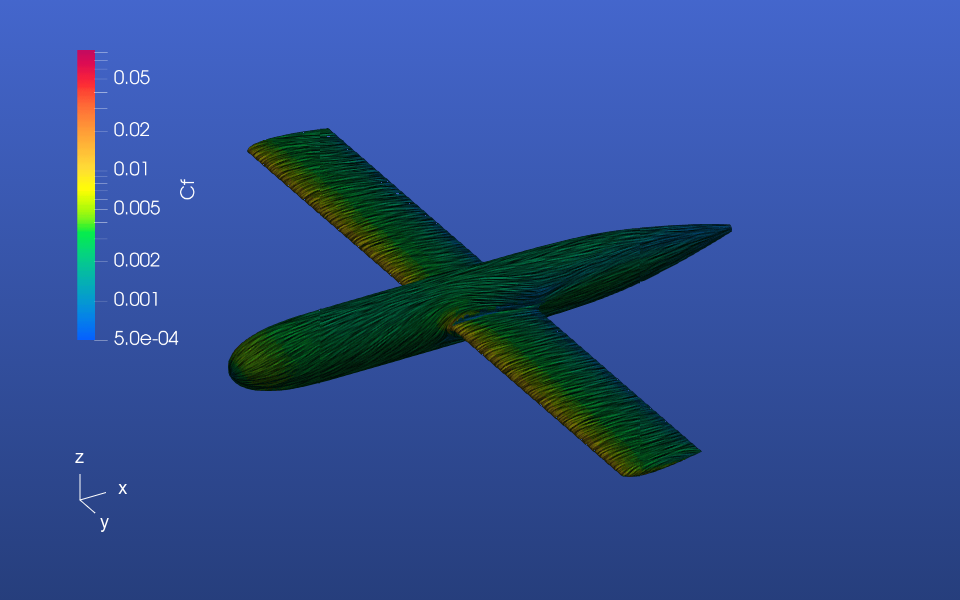

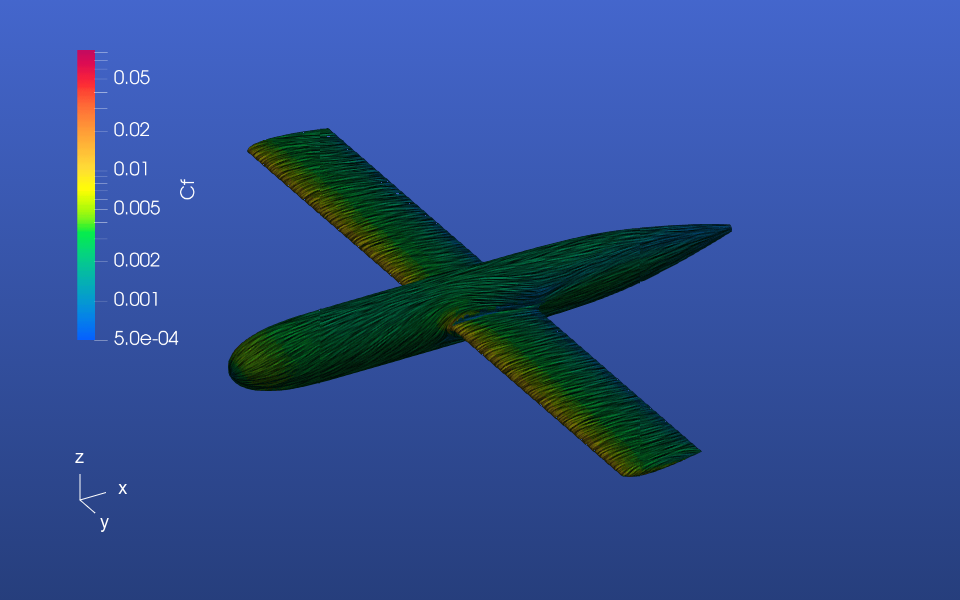

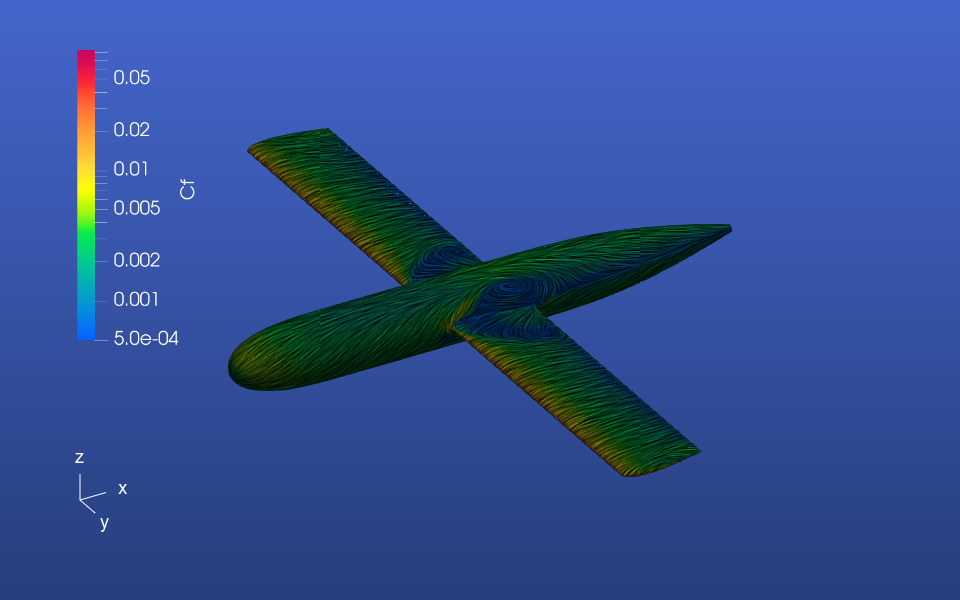

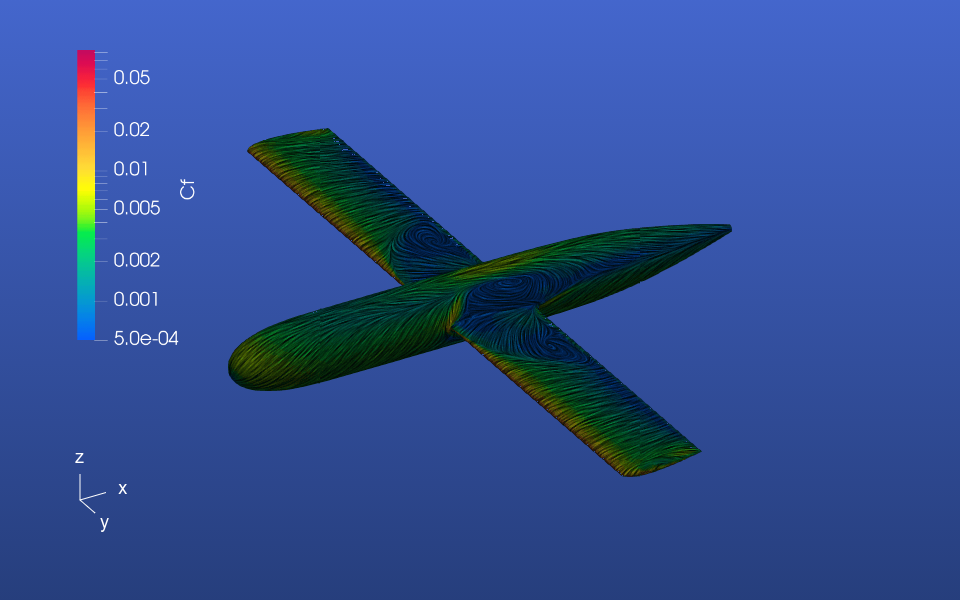

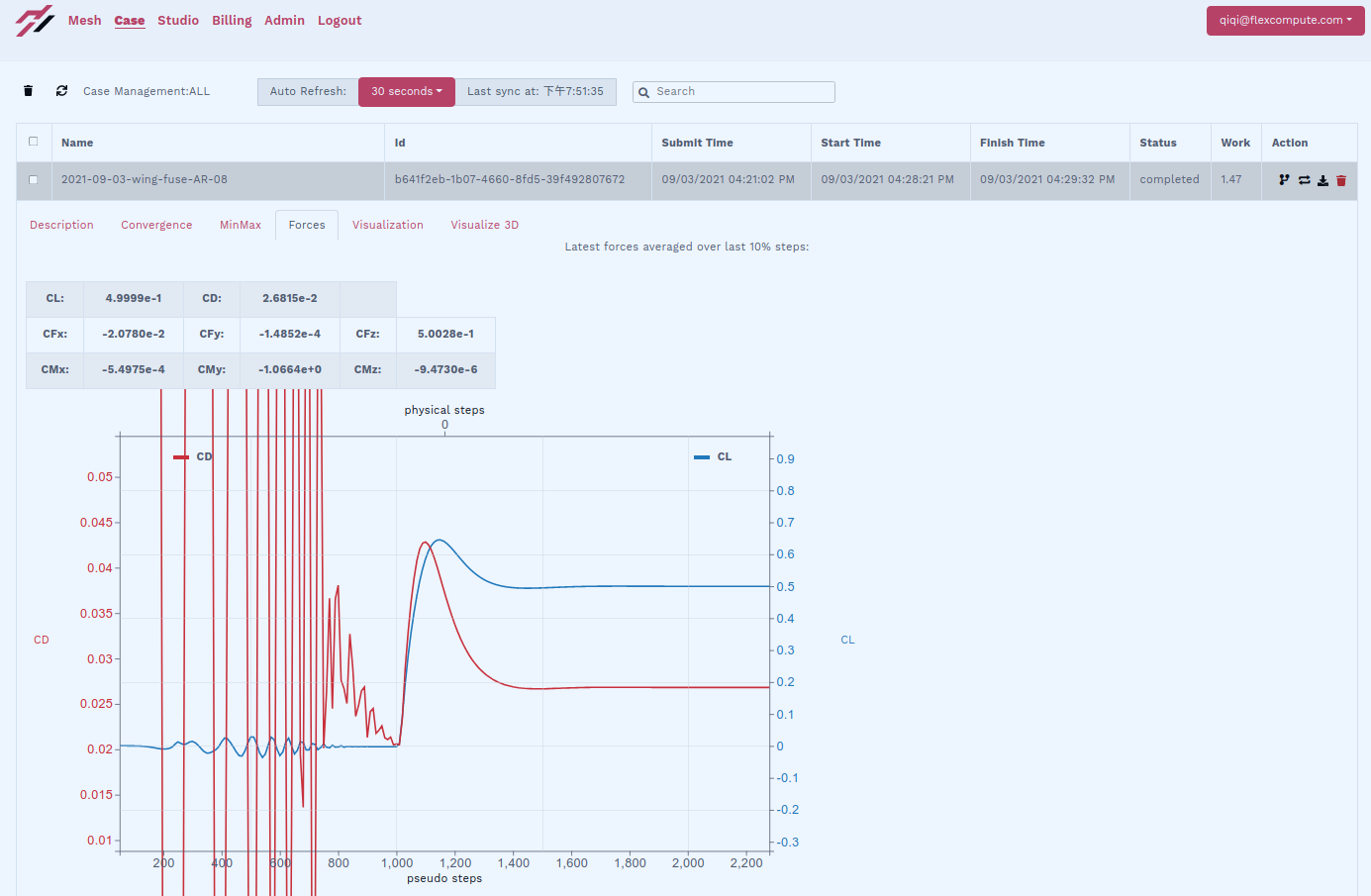

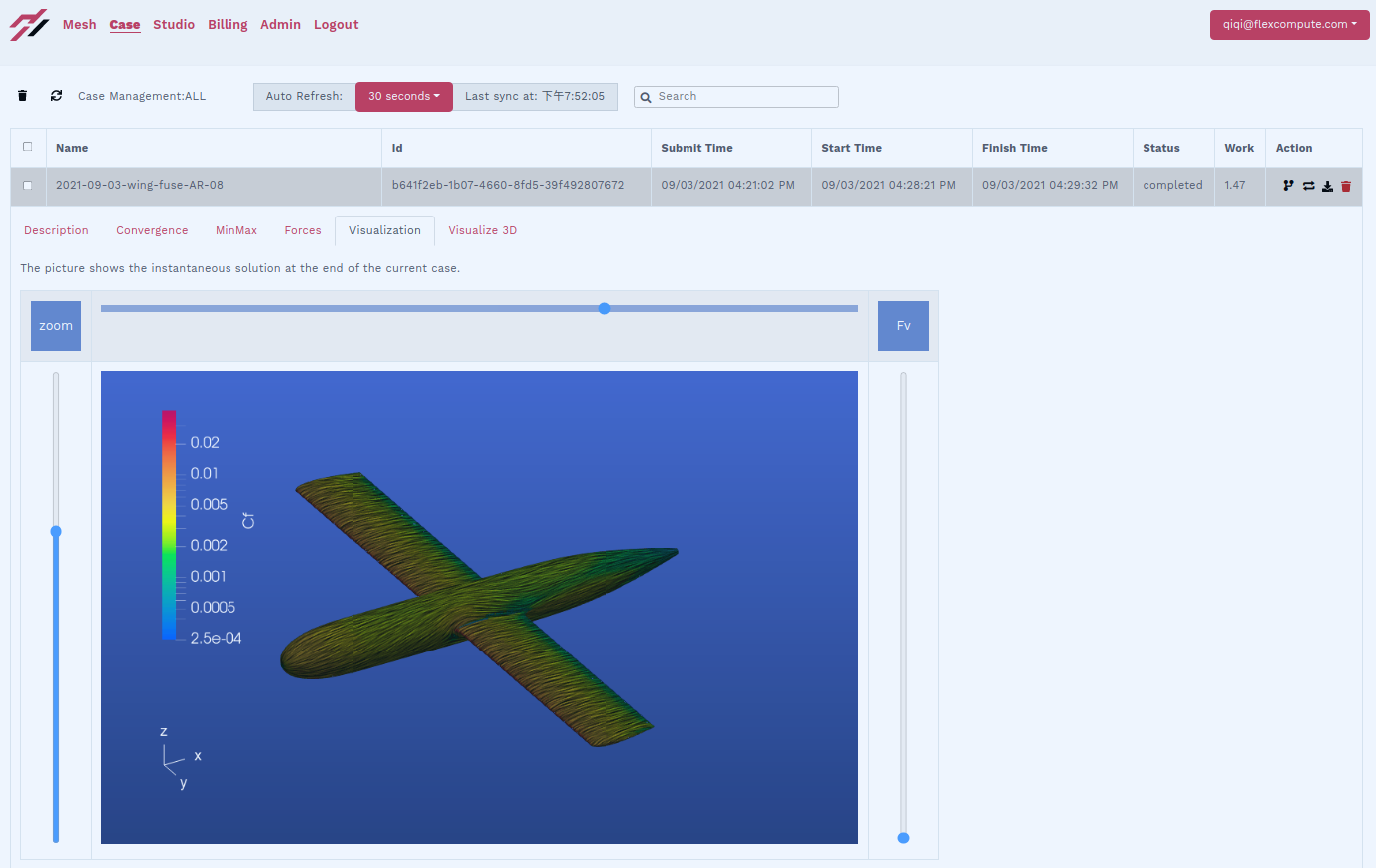

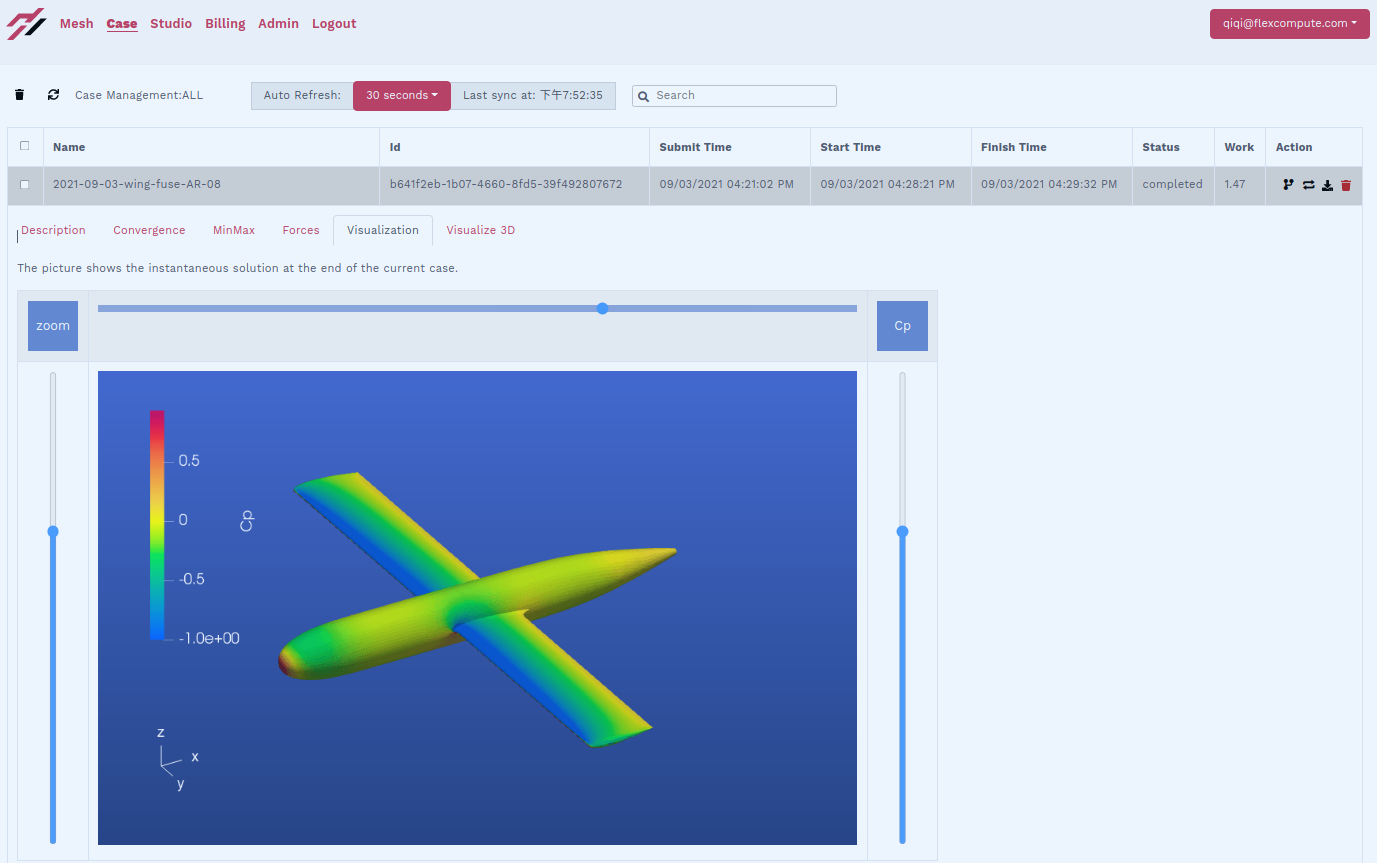

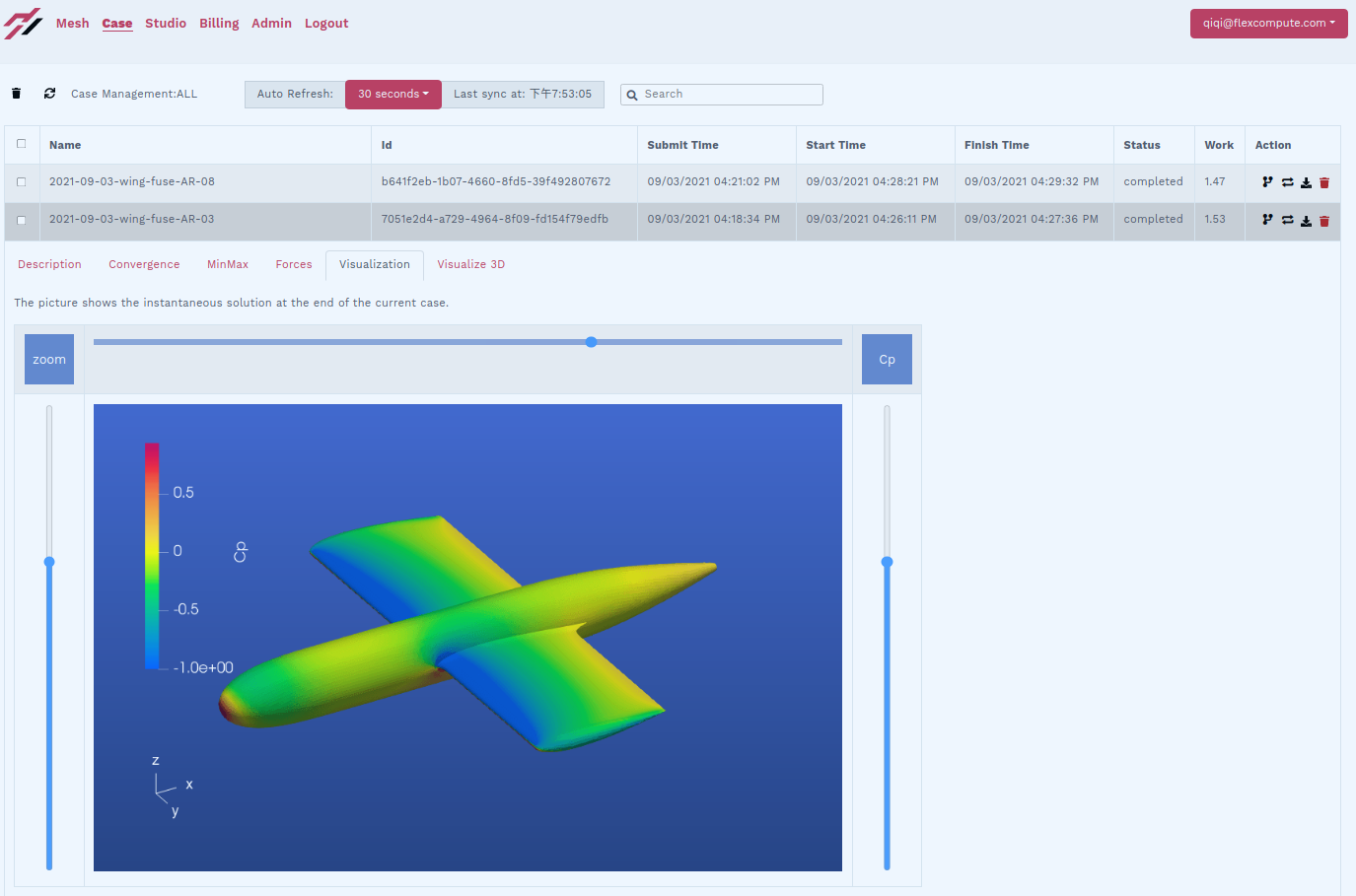

Figure 1: Flow visualization over the aircraft with skin friction vectors.

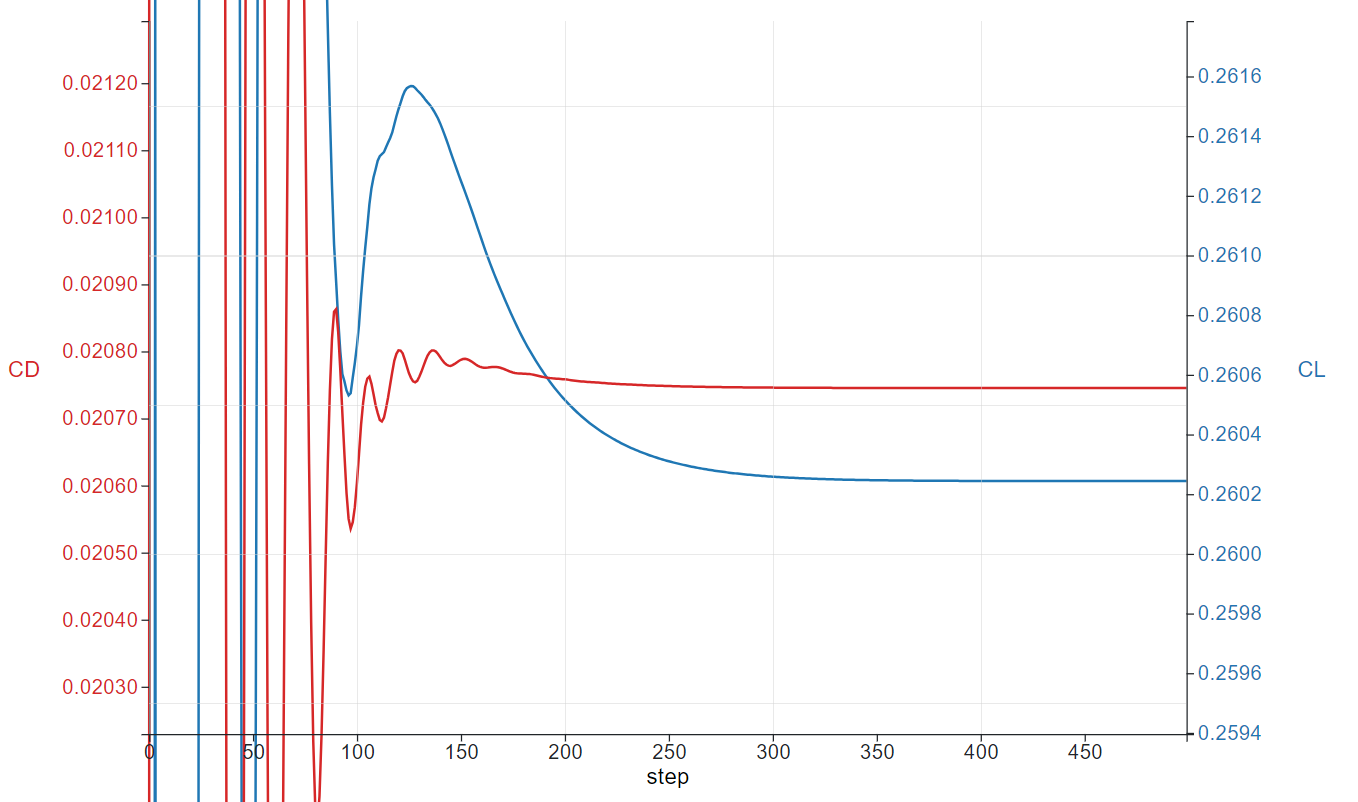

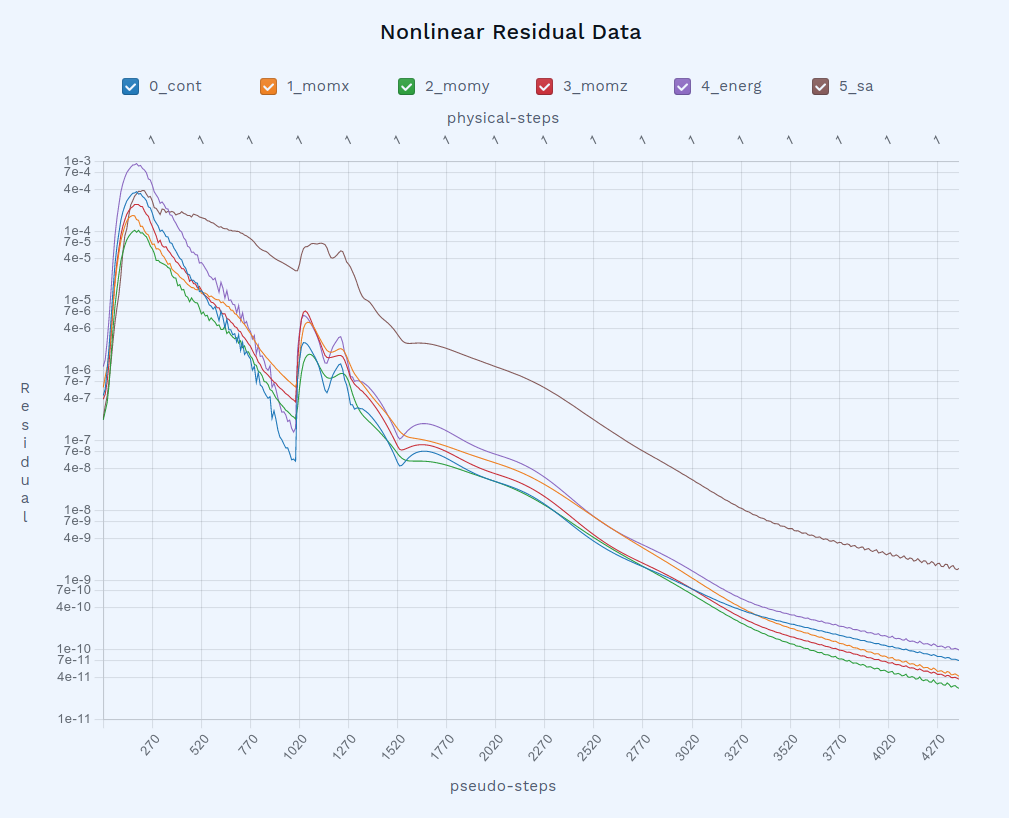

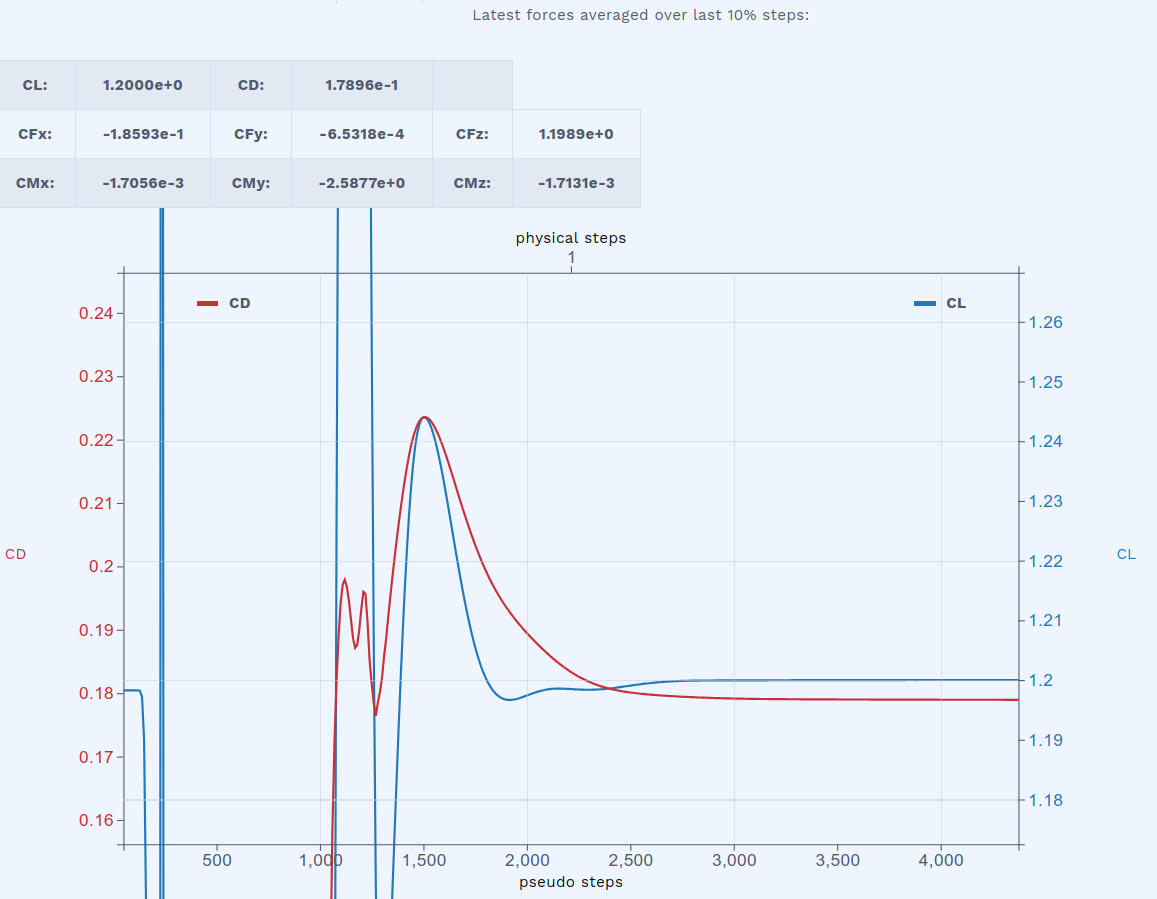

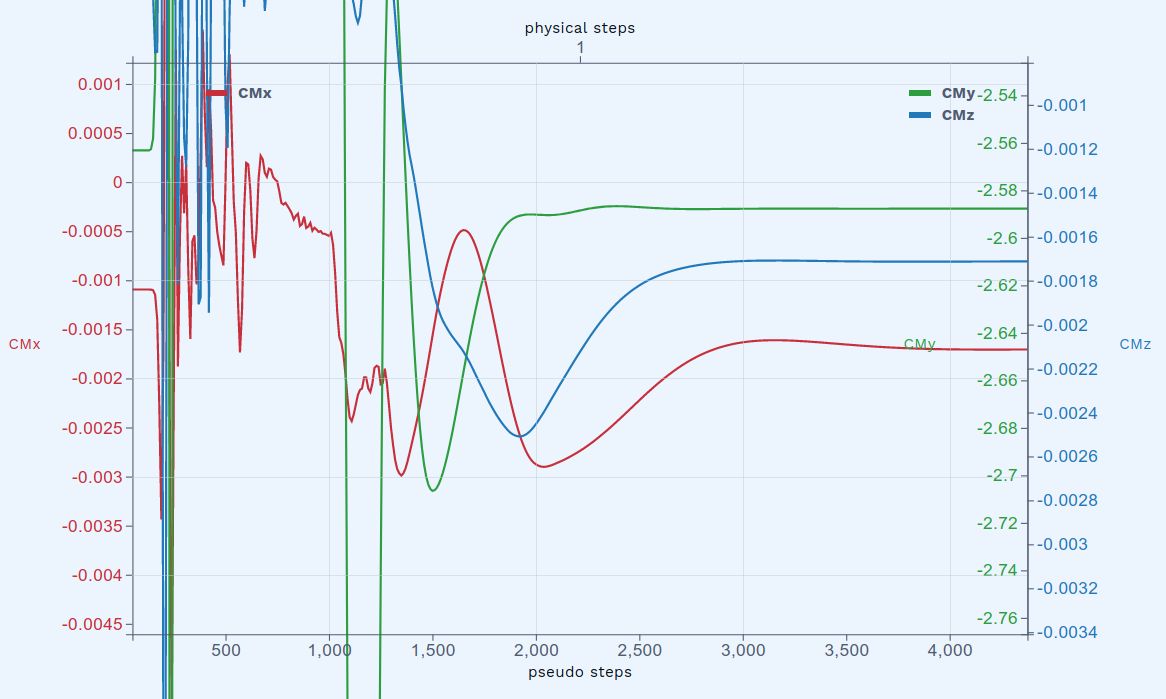

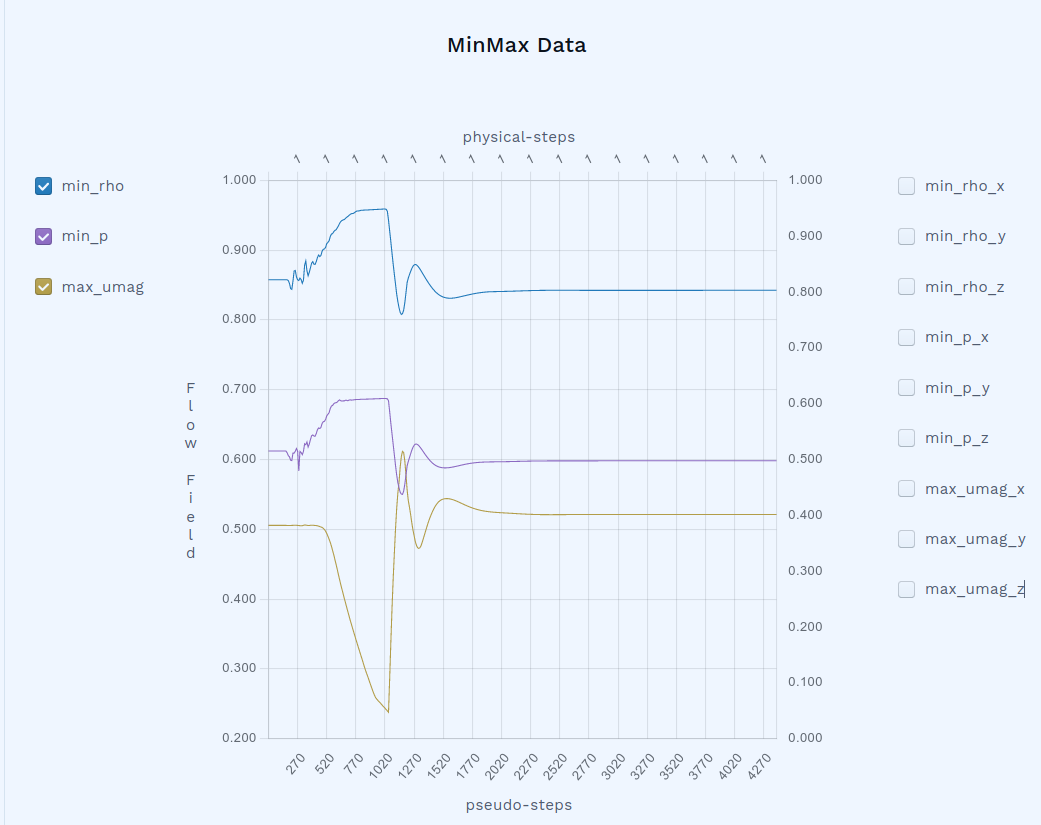

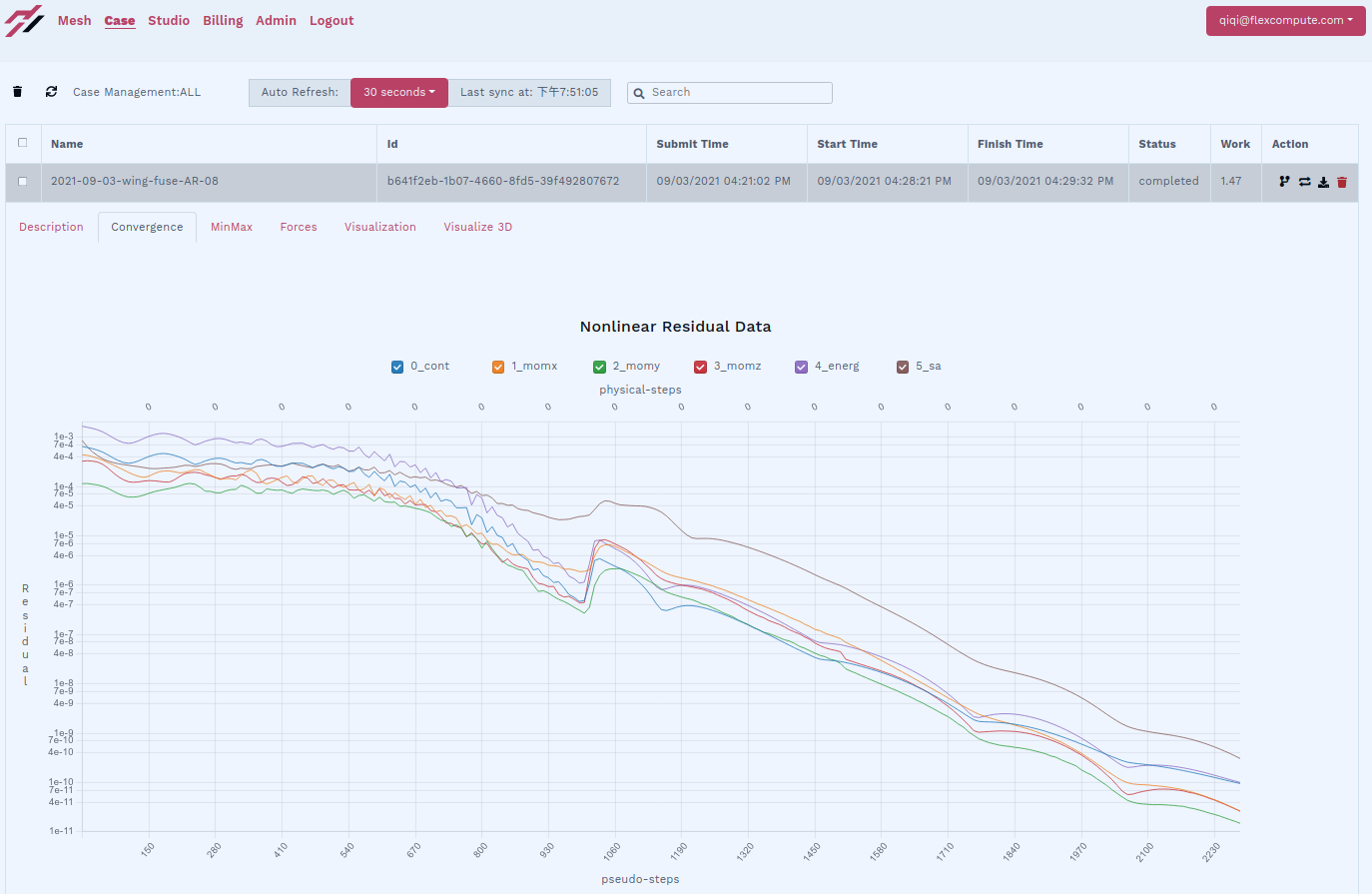

Convergence Efficiency:

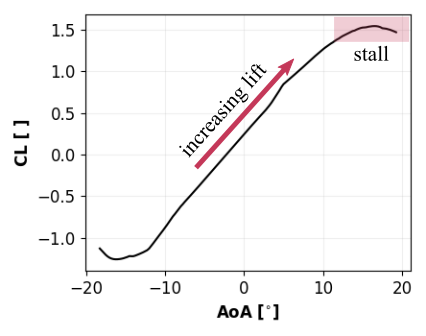

A fair comparison was maintained: convergence was defined when lift (CL) and drag (CD) changed less than 0.05% across 500 pseudo steps.

Results:

-

January 2023: 4,900 pseudo steps to converge

-

February 2025: 1,600 pseudo steps to converge

A massive 3 times reduction in steps, without compromising accuracy.

Figure 2: Convergence of CL and CD across solver releases from last 2 years.

Runtime Efficiency:

All tests were performed on identical hardware (8× A100 GPUs).

-

January 2023: ~10 minutes total runtime

- February 2025: 3.2 minutes total runtime

Figure 3: Evolution of runtime across solver releases from last 2 years.

Even in early 2023, Flow360 was already setting a new standard, simulating a 25-million-node mesh with a stringent convergence criterion in just 10 minutes.

In contrast, traditional CPU-based CFD tools would take several hours to complete a similar case providing a 100X speed advantage.

Today, with the latest release, Flow360 cuts that time down even further to just 3.2 minutes on the same hardware. Another 3X improvement in just two years.

How We Made Flow360 Even Faster

The speed-up didn’t come from shortcuts. It came from smarter, more robust techniques, each rigorously tested across a wide range of cases before every release.

Smarter Time-Stepping: Adaptive CFL

In early 2023, Flow360 used a traditional Ramp CFL method requiring users to manually set CFL values. Set it too aggressively, and simulations could diverge. Set it too conservatively, and convergence dragged painfully slow.

We eliminated this trade-off by introducing Adaptive CFL, an automatic system that dynamically adjusts CFL based on real-time solver behavior. Now, the solver accelerates convergence while guarding against instability with no manual tuning needed.

Expanded Solver Robustness

Throughout 2023, we continually strengthened Flow360’s performance across a wider range of cases. Even with only a minor increase in runtime, solver reliability and accuracy improved dramatically.

Major Leap: Low-Mach Preconditioning

In mid-2024, we implemented Low-Mach Preconditioning, boosting convergence and accuracy for low-speed flows critical to:

- Take-off and landing of commercial aircraft

- VTOL hovering operations

- Automotive aerodynamics simulations

The result: faster convergence and lower runtimes across key real-world applications.

Streamlined WebUI: End-to-End Workflow

In late 2024, we overhauled the WebUI, consolidating geometry, meshing, and case management into a single unified project workspace. The upgrade drastically simplified workflows and cut setup time.

Pushing Limits: Smart CFL-Cut

In early 2025, we pushed Adaptive CFL even further by introducing Smart CFL-Cut. If instability is detected, Flow360 automatically trims CFL values, allowing users to run simulations more aggressively, without risking divergence.

The result?

Every innovation compounds.

Every release moves faster, smarter, and more reliably which sets a new standard for GPU-native simulation.

Why It Matters for Customers

Every breakthrough in speed directly translates to lower simulation costs.

When your case converges in 3 times fewer pseudo steps, you pay 3 times less, without any compromise in accuracy or reliability.

Faster, cheaper simulations mean you can:

- Explore broader design spaces with greater confidence

- Evaluate more flight conditions to capture edge cases earlier

- Build more comprehensive aerodynamic databases which reduces risk in later-stage testing

- Run large-scale, high-fidelity simulations that better replicate real-world conditions

- Accelerate product development and get to market faster

Innovation isn’t just happening behind the scenes, it’s happening for you, in every simulation, every decision, and every breakthrough.

What’s Next?

Hold on tight, we’re just getting started. Our team is already charging full-speed into the next wave of breakthroughs:

- We’re boosting even faster solver speeds, especially for aeroacoustics simulations and unsteady high-fidelity runs, so time-to-solution will never slow down your innovation again.

- We’re ramping up mesh generation speed by an entire order of magnitude. Imagine starting a hundred-million-point unstructured simulation in minutes instead of hours.

- But we’re taking it even further, streamlining the earliest stages of your analysis workflow, geometry handling, so those gnarly, “dirty” CAD models become simulation-ready in minutes, not days of grunt work!

- Plus, our upcoming adaptive meshing and intelligent geometry technologies take the grind out of the process, automatically optimizing for the highest accuracy at the fastest turnaround.

Stay tuned. At Flexcompute, we don’t just keep pace with innovation. We set it.

Ready to move faster than ever before?

Contact us to learn how Flow360 can transform your engineering workflows.

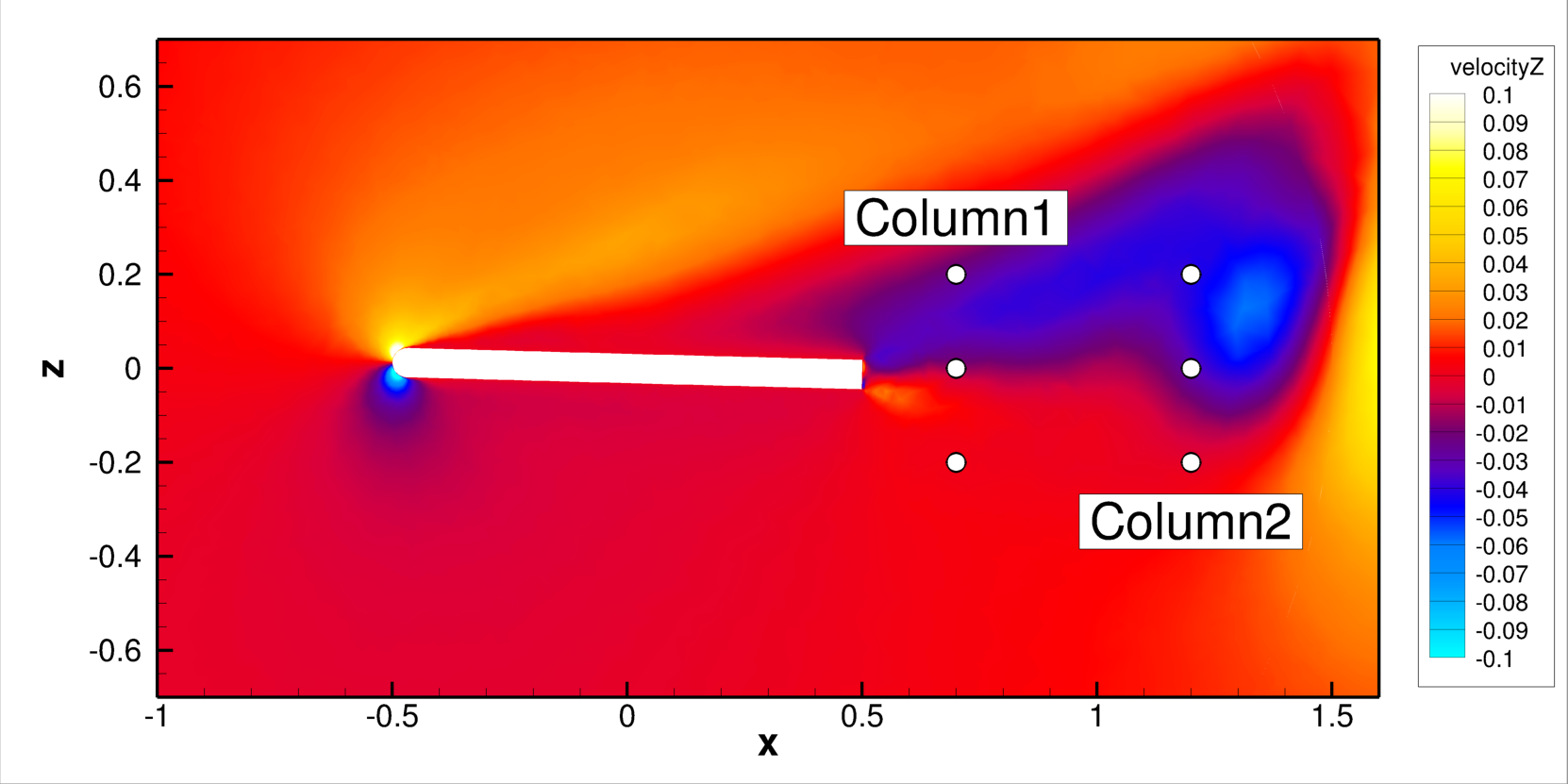

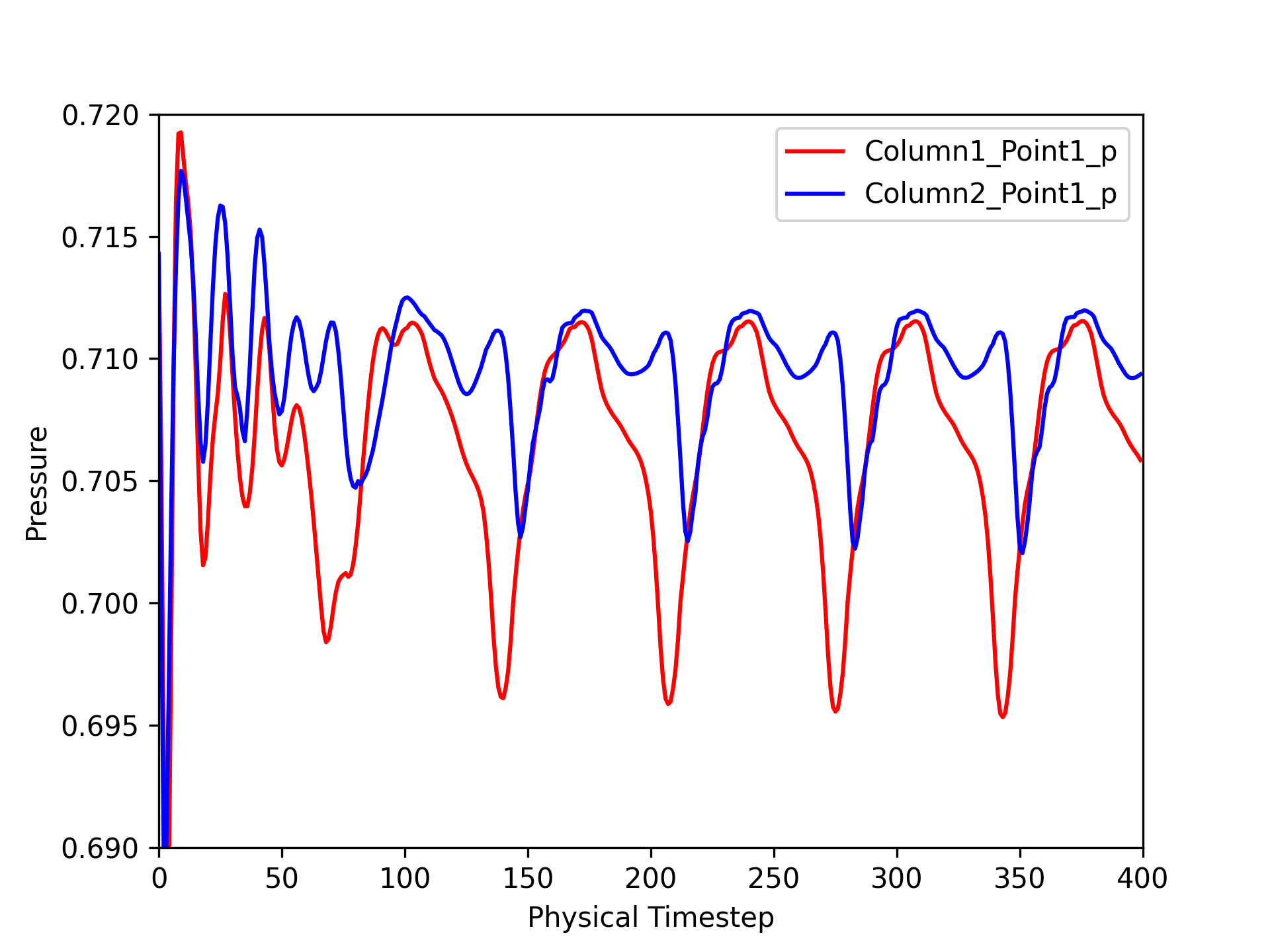

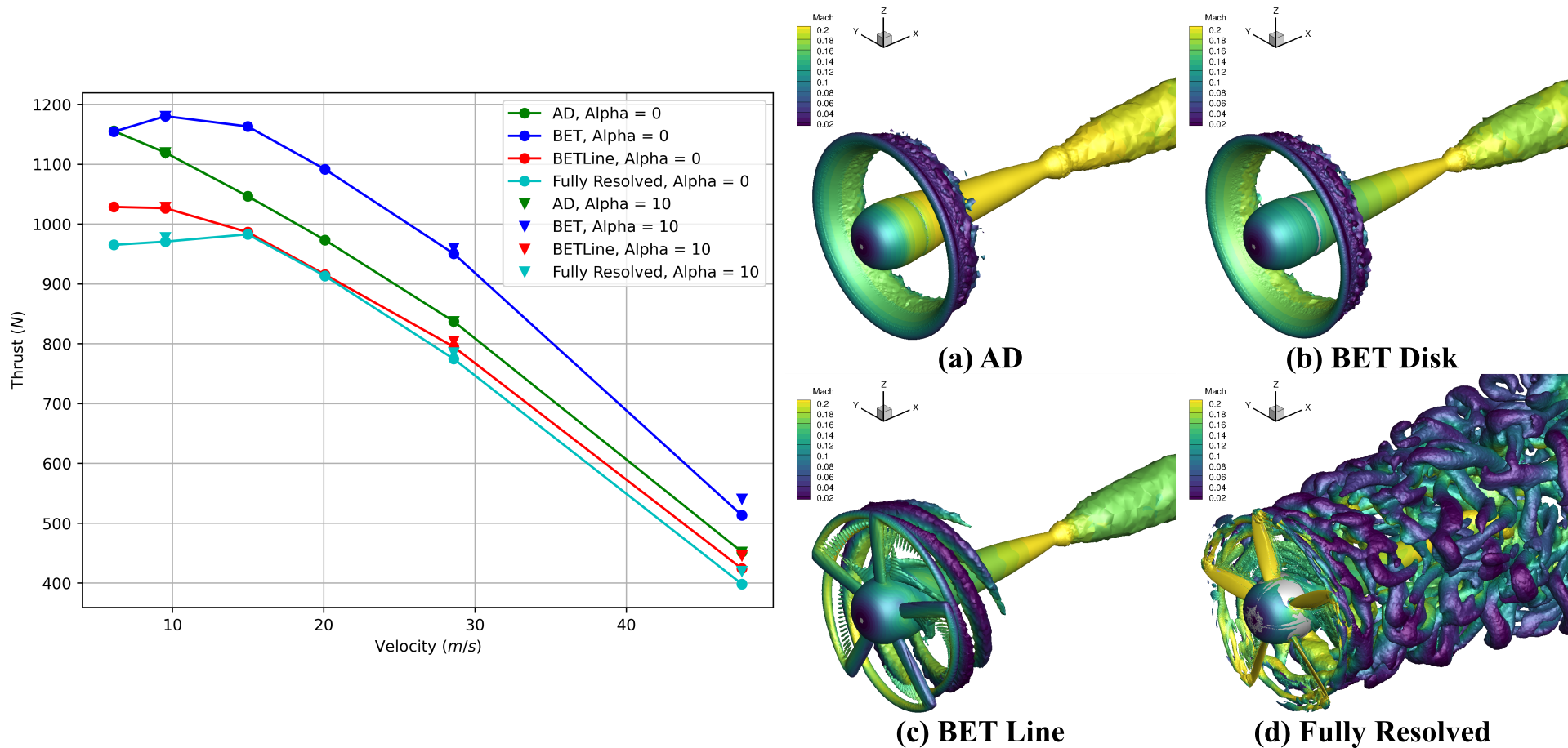

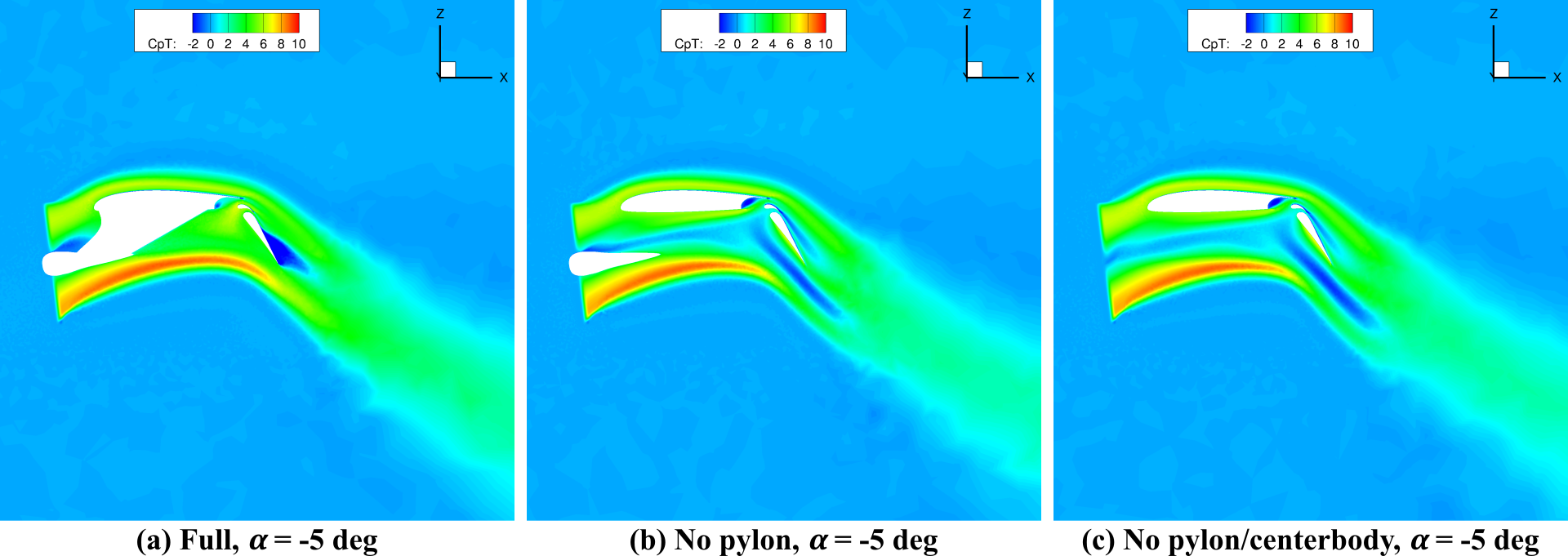

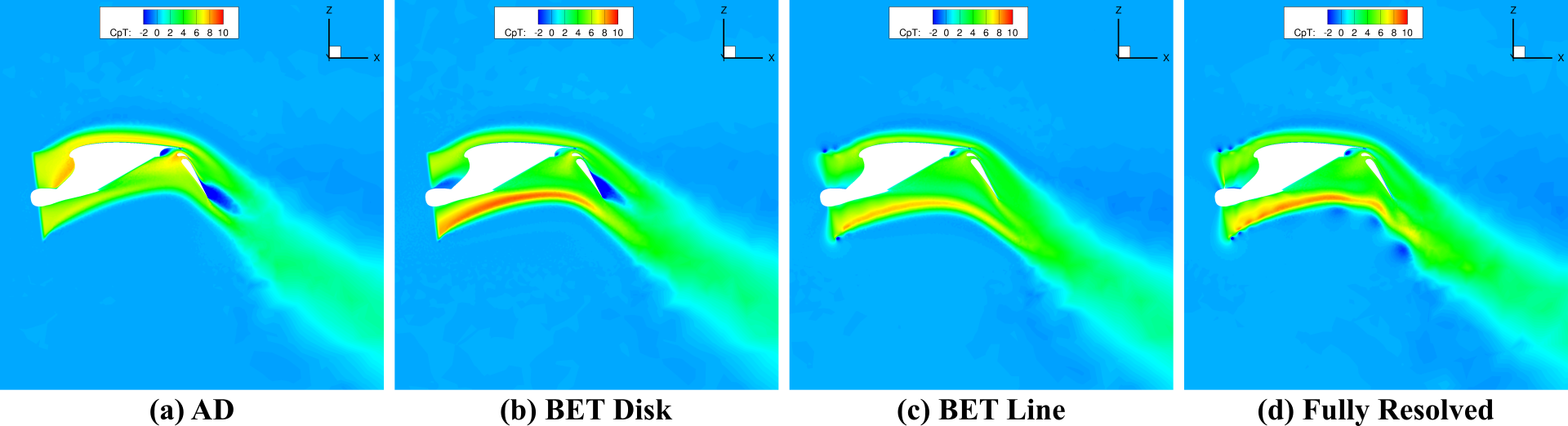

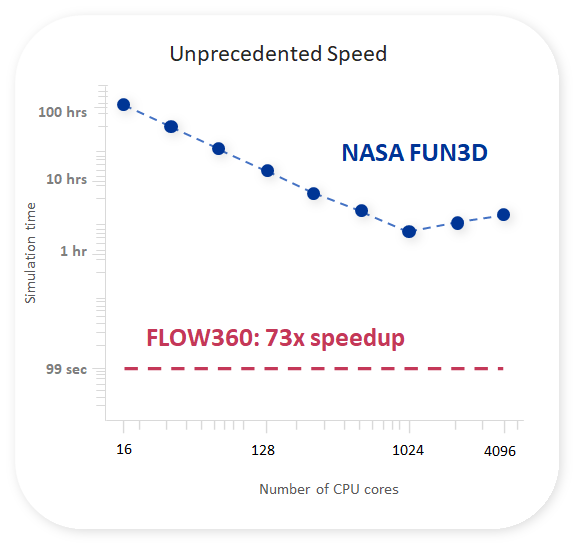

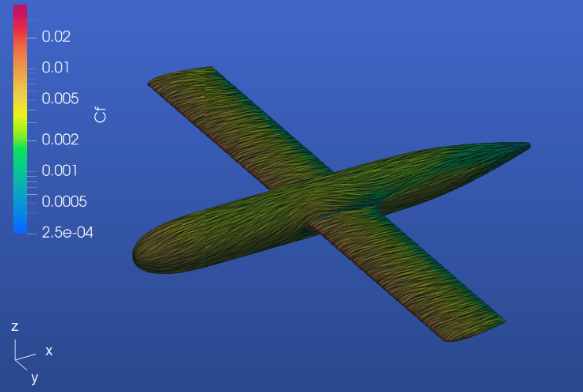

Introduction

In 2024, Flexcompute participated in the AutoCFD4 workshop, where we presented our highly accurate and fast results for the DrivAer model. The AutoCFD4 workshop is an international forum for researchers and practitioners in the field of automotive Computational Fluid Dynamics (CFD). The workshop provides a platform for the exchange of ideas and experiences on the latest developments in automotive CFD.

DrivAer Model

The AutoCFD4 workshop focuses on two test cases: the Windsor model and the DrivAer model. However, it’s the DrivAer model that generally garners more attention, primarily because it’s a reasonably accurate representation of a real-life commercial car It is a complex geometry that is used to benchmark the accuracy and efficiency of CFD solvers. While the geometry, boundary condition, and computation grid are provided by the committee, the workshop offers interesting insights into various turbulence models and numerical schemes.

Speed and Accuracy of Flow360

Traditional CFD tools require days or even weeks to perform a simulation of an automotive geometry. In contrast, Flow360 requires only 10-15 min for a RANS simulation and 1-2 hours for a DDES simulation of the DrivAer model with the committee-provided grid. With this speed, CFD engineers working on Formula 1, commercial cars, or sports cars no longer need to wait for lengthy periods to evaluate design changes. Design iterations can be done at a much faster pace, with the advantage of high accuracy of DDES simulations. Flow360’s accuracy aids automotive companies in making informed design decisions, while its speed significantly reduces time for design cycles, resulting in faster time-to-market.

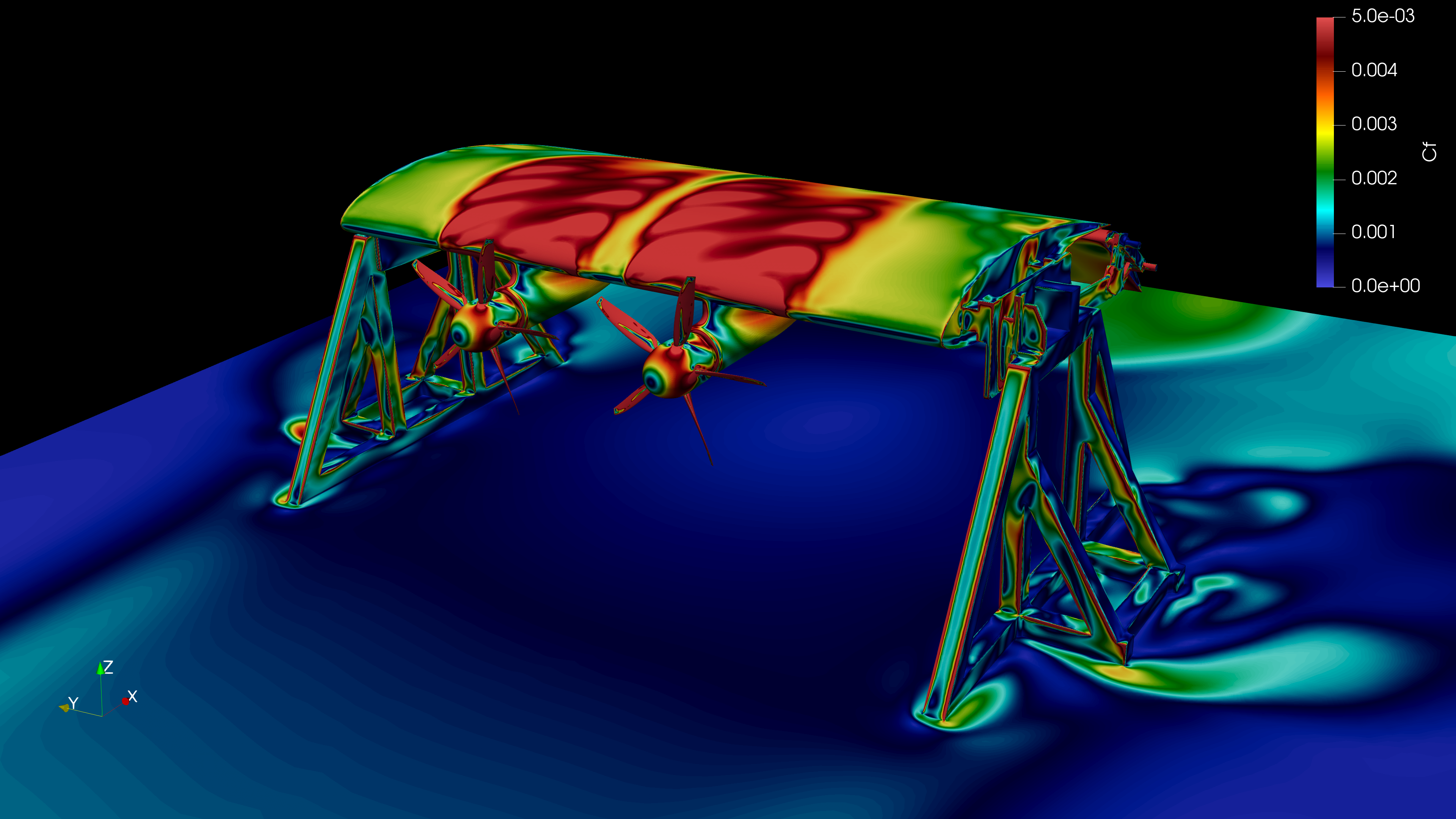

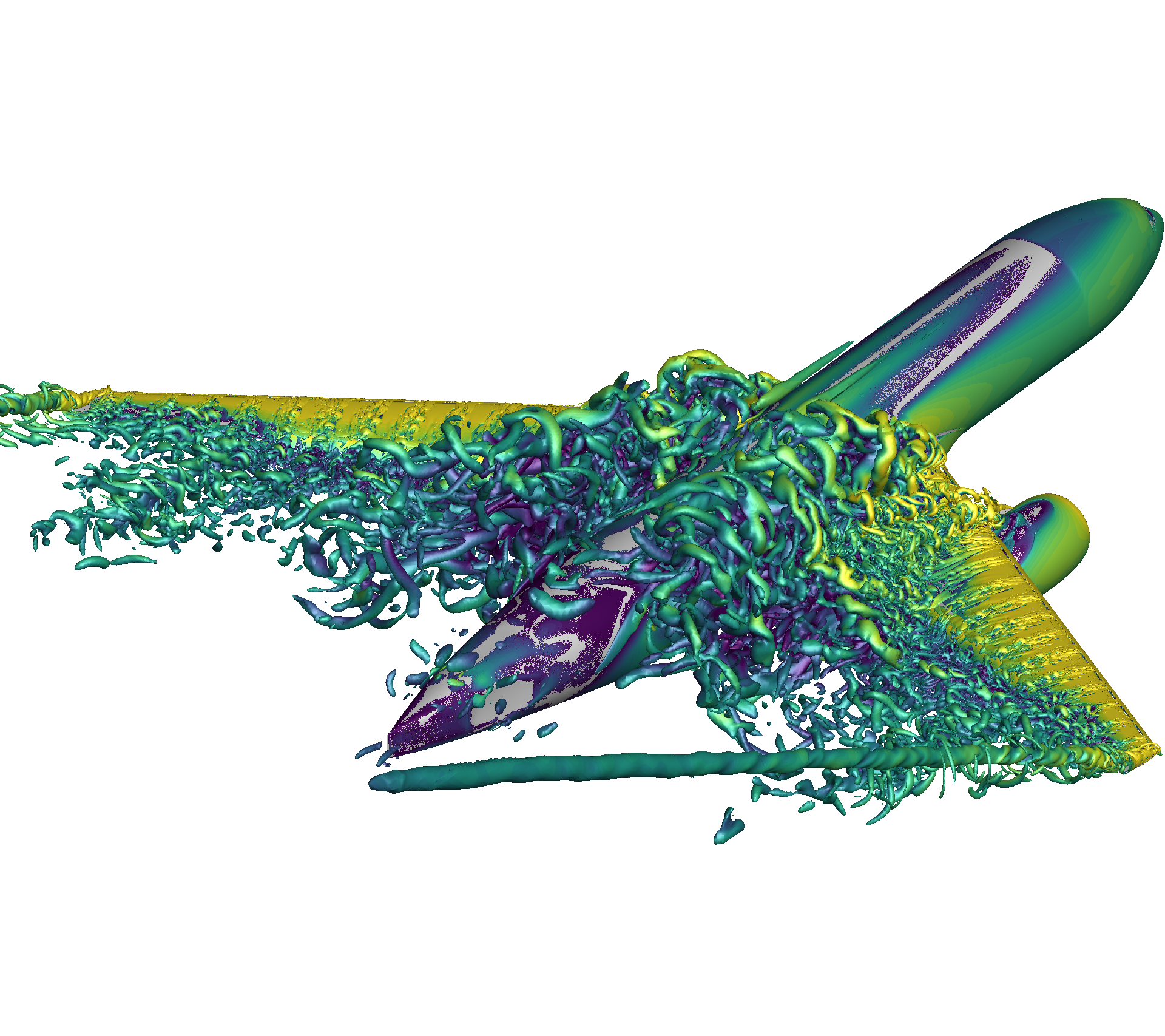

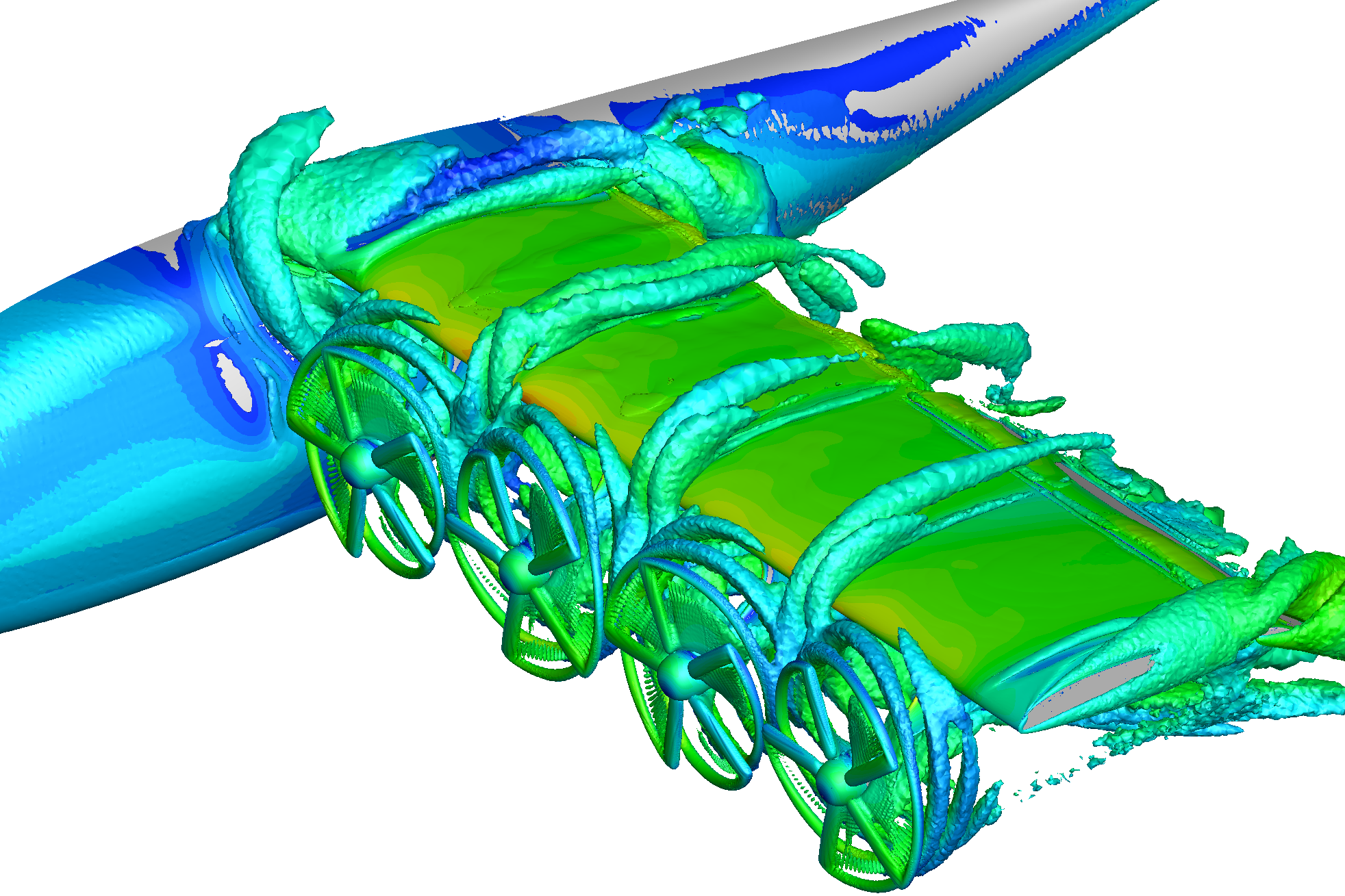

A comparison of Flow360’s DDES results for the DrivAer model with the test performed in the Pininfarina wind tunnel shown above highlights the capability of Flow360. For a CFD tool to be used in the rigorous design and optimization of automotive, it’s essential to capture complex flow features including corner flow, origin and evolutions of vortices, and tiny wake structures. Without this capability, it is challenging to determine whether a marginal design change is acceptable or not. Flow360’s accuracy with the combination of speed exactly addresses this concern by providing an efficient solution.

Conclusion

Flow360’s remarkable speed, coupled with the high accuracy of its results, showcases its potential to revolutionize automotive CFD workflows. We invite you to experience the Flow360 advantage today. Talk to an expert to learn more about Flow360.

“In our pursuit of excellence, we don’t compromise one aspect to enhance another.” – Qiqi Wang, Co-founder of Flexcompute and architect of Flow360

At Flexcompute, we live by this philosophy. During the Automotive CFD Prediction Workshop (AutoCFD4), Flow360 showcased its exceptional ability to deliver world class speed and accuracy—simultaneously.

AutoCFD is an international forum that brings together leading OEMs, universities, and CFD practitioners to benchmark simulations against wind tunnel data on standardized automotive geometries. It’s a proving ground—and Flow360 delivered.

While we’re proud to offer the fastest solver (see below), our mission goes beyond speed. Flow360 is an end-to-end platform that automates the tedious parts of simulation—geometry cleanup, meshing, setup, and reporting—so engineers can focus on what matters: innovation.

Benchmarking Flow360 on the DrivAer Model

We focused on the DrivAer model, a highly detailed and realistic geometry widely adopted for CFD benchmarking. Provided by the workshop committee, the standardized geometry, boundary conditions, and mesh ensure a level playing field. With comprehensive validation data—pressure taps, velocity probes, and PIV imagery—the DrivAer model is a rigorous testbed, and Flow360 passed with flying colors.

DrivAer Model

| Figure 1: Visualization of an isosurface of Q-criterion for DrivAer model |

Fast, Automated Meshing

Flow360 includes a powerful, feature-rich meshing tool. For AutoCFD4, it generated a hex-dominant mesh with 145 million nodes in just 60 minutes. This meshing workflow is easily automated using our Python API, enabling seamless integration into streamlined engineering pipelines.

| Figure 2: Visualization of the mesh for the DrivAer model generated with Flow360’s meshing tool | |

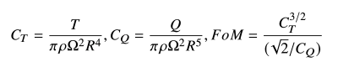

RANS Predictions by the Time You Finish Your Coffee (10 Min)

Traditional CFD tools take days to simulate realistic automotive geometries. Flow360 changes the game.

We completed a full RANS simulation of the DrivAer model in just 10 minutes on 48 A100 GPUs—without compromising accuracy. This was made possible by:

- A blended central/upwind scheme to reduce numerical viscosity

- A low Mach number preconditioner to enhance convergence

- An adaptive CFL strategy for stability and robustness

- User control over turbulence model coefficients, including an α₁ adjustment in the K-ω SST model (set to 0.4 to better capture separation over the roof)

The integrated force predictions from Flow360 showed excellent agreement with physical test data, with just 2 drag counts of difference—a level of accuracy rarely seen at this speed.

Figure 3: Comparison of integrated forces between Flow360 RANS and Test data having only 2 drag count difference

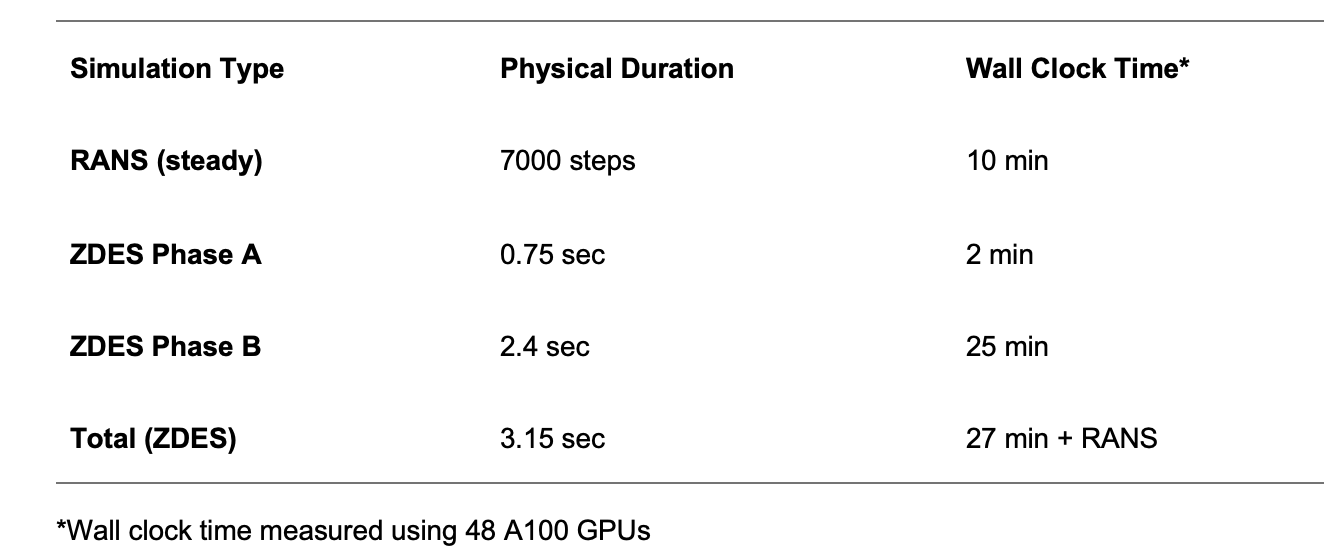

Scale-Resolved Simulation by the Time You Finish Lunch (37 min)

As OEMs increasingly adopt transient simulations for greater accuracy and faster design cycles, Flow360 continues to push boundaries. One major leap: support for Zonal Detached Eddy Simulation (ZDES) using the Deck-Renard shielding function, enhancing separation prediction in critical flow regions.

For AutoCFD4, we submitted a ZDES simulation using this approach. Total wall time: just 37 minutes, including both phases below:

Table 1: Simulation Timing Summary | *Wall clock time measured using 48 A100 GPUs

This two-phase ZDES approach done automatically in sequence with adaptive time-stepping:

- A coarse time step (5e-3 s, CFL = 50 on a resolved mesh zone) to flush the RANS solution for 0.75 sec—completed in 2 minutes on 48 A200 GPUs

- A fine time step (1e-3 s, CFL = 10) for the main ZDES run, averaged over the last 2 seconds—completed in 25 minutes on 48 A100 GPUs

All of this, without sacrificing fidelity. Flow360’s ZDES results match physical testing within 1 drag count, capturing complex flow physics that engineers can trust.

Figure 8: Flow360 ZDES’s excellent prediction of delta forces prediction between two DrivAer configurations with the Test data

Right Answers for the Right Reasons

Physically accurate flow features are essential for design optimization. Many CFD tools struggle to resolve complex flow phenomena, particularly around critical regions like the A-pillar and side mirror, where flow separation and downstream vortices significantly impact the pressure distribution on the side window. Flow360’s ZDES results, however, show close agreement with test data, validating its ability to capture the true physics of the flow.

This high level of accuracy continues along the symmetry plane of both the upper body and underbody, where Flow360’s pressure predictions align closely with wind tunnel data. Such consistency reinforces trust in the solver’s reliability for production use.

Even in the most challenging regions—such as the rear wake and underfloor—Flow360 delivers. The wake contours show excellent agreement with test results, clearly demonstrating that Flow360 captures not just trends, but the detailed unsteady structures that matter most to real-world aerodynamic performance.

Figure 5: Visualization of flow structure around A-piller and side mirror along with excellent agreement between Flow360 and Test data for pressure prediction on side mirror

Delta Prediction: Where It Counts in Design Optimization

In the production stage, the priority shifts from absolute accuracy to capturing aerodynamic deltas—the impact of design changes on performance. At this point, vehicle designs are largely frozen, and the focus shifts to fine-tuning specific components—such as mirrors, underbody panels, or wheel deflectors—to optimize efficiency. What matters most is the ability to reliably capture how each design tweak impacts overall drag performance.

One of AutoCFD4’s most important test cases was the front wheel arch deflector delta—a highly relevant real-world scenario given how often such features are refined late in the design cycle.

Figure 8: Flow360 ZDES’s excellent prediction of delta forces prediction between two DrivAer configurations with the Test data

Flow360 accurately captured both the magnitude and trend of the delta, enabling confident decision-making and reducing reliance on costly wind tunnel validation. It’s precisely why Flow360 is already trusted in production by leading automotive OEMs.

Real-World Validation: NIO Success Story

Flow360 isn’t just proven in benchmarks—it’s trusted in production. In collaboration with NIO Inc., we validated over 60 designs across SUVs and sedans. The results:

- Fastest Speed and Efficiency: Flow360’s simulation time is 10-100 times faster than traditional methods, completing complex simulations in just an hour on 8 L20 (10 mins on 8 H200)

- Unparalleled Accuracy: More than 94% of simulations show less than 3% deviation from physical wind tunnel tests, ensuring that design decisions are based on reliable data.

Many tools struggle in robustness and consistency across extensive test scenarios —Flow360 delivers.

In the example below Flow360 accurately captured the design delta Cd caused by a small change to air-intake. This was particularly challenging as it is located near the wheel well. With Flow360 you can have confidence in your results to make the right decisions.

Figure 9: Accurate prediction of delta Cd by Flow360 as compared to a competitor for a design change due to air-intake

And the Best Part? We’re Just Getting Started

Flow360 combines unmatched solver speed with high-fidelity results—redefining how automotive CFD gets done. But this is just the beginning.

Behind Flow360 is a world-class team shaping the future of simulation. We’re proud to be working with some of the most respected minds in the field—like Dr. Philippe Spalart, the pioneer behind the Spalart-Allmaras turbulence model; Dr. Mike Park, former NASA researcher and global leader in adaptive mesh refinement; and Dr. Roberto Della Ratta Rinaldi, former senior aerodynamicist at Aston Martin and McLaren, with over 15 years at the forefront of automotive aero analysis and methodology development.

Together, this team isn’t just evolving CFD—they’re accelerating it beyond anything the industry has seen. Flow360 enables interactive workflows at unprecedented speed, taking engineers from geometry to insight in hours, not days.

And there’s more ahead. In Q3 2025, we’ll be launching a major release focused on geometry, further simplifying simulation and introducing new levels of automation and intelligence into the workflow.

If you’re building the future, choose a partner that represents the future.

Experience Flow360—and see how fast innovation can move. Talk to an expert to learn more about Flow360.

Flexcompute Partners with Samsung Display to Advance Optical Simulation in Display Technology

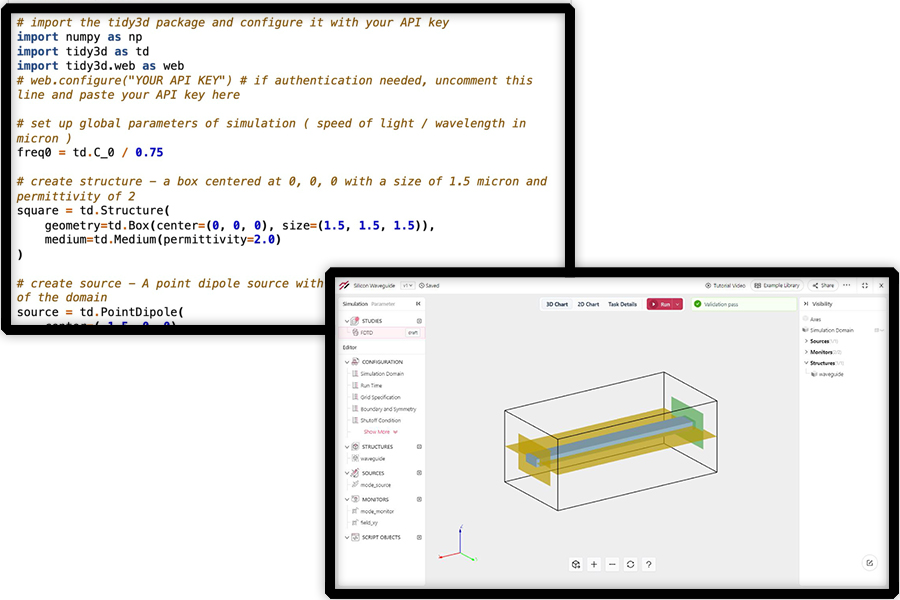

We are excited to announce a groundbreaking partnership between Flexcompute and Samsung Display. As a global leader in advanced display technologies, Samsung Display has chosen Tidy3D, Flexcompute’s flagship electromagnetic simulation platform, to power its next-generation optical display simulations and analyses.

A New Era in Display Innovation

With display technologies becoming increasingly complex and performance-driven, the need for fast, accurate, and multi-physics simulation tools has never been greater. Tidy3D rises to this challenge with its GPU-accelerated architecture, delivering exceptional computational speed without compromising on accuracy. The platform supports a wide range of simulation capabilities that are essential for modeling advanced display structures—from thin-film interference to light extraction and beyond.

Samsung Display’s decision to integrate Tidy3D into their workflow reflects the platform’s ability to meet the highest standards of industrial R&D. Whether it’s evaluating electromagnetic behavior, optimizing light propagation, or ensuring compatibility with multi-physics requirements, Tidy3D enables rapid, detailed insights into complex optical systems.

Why Tidy3D?

Tidy3D is built for performance and precision. It allows researchers and engineers to:

- Run simulations in a fraction of the time required by traditional FDTD solvers, allowing you to publish faster and increase your research output.

- With the native integration of PhotonForge, the unified platform for fabrication-aware photonic design, with Tidy3D, one can design photonic circuits, simulate performance, and generate fabrication-ready layouts — all within a streamlined workflow.

- Incorporate material models and boundary conditions for accurate physical representation.

- Conduct parametric sweeps and optimizations with minimal setup.

- Leverage adjoint-based inverse design for innovative device geometries.

These features are expected to play a critical role in keeping Samsung Display at the forefront of display technology by accelerating innovation through high-performance computing.

Looking Ahead

This partnership not only validates the capabilities of Tidy3D but also signifies a broader movement toward simulation-driven innovation in consumer electronics. Flexcompute is proud to support Samsung Display’s continued innovation, and is committed to empowering engineers and scientists with the tools they need to push the boundaries of what’s possible in display design.

As we move forward, we look forward to sharing more updates on how Tidy3D is being used to develop the next generation of visual experiences.

Flexcompute Unveils High-Fidelity Physics Simulation Powered by NVIDIA Blackwell Platform for a New Paradigm of Speed

Innovative companies including Beta Technologies, Celestial AI, Dufour Aerospace, JetZero, Joby Aviation, and Kyocera SLD Laser, Inc. adopt Flexcompute powered by NVIDIA Blackwell

[Boston, March 18, 2025] – Flexcompute, the leading provider of multi-physics simulation technology, announced support for the NVIDIA Blackwell platform, marking the dawn of a new era in simulation capabilities. As a GPU-native, high-fidelity solution already known for being 100 times faster than leading simulation technologies, Flexcompute products powered by Blackwell will enable its customers to conduct high-fidelity physics simulations faster than ever before.

“We are thrilled to offer our customers early access to our products accelerated by NVIDIA Blackwell GPUs,” said Vera Yang, President of Flexcompute. “Leveraging NVIDIA’s cutting-edge GPU technology to Flexcompute’s industry-leading simulation platform, we are enabling engineers to solve complex real-world problems faster and more accurately than ever before. This collaboration marks a new era of simulation-driven innovation, where design cycles are accelerated, and breakthroughs become reality.”

“NVIDIA Blackwell is powering a new era of computing, delivering exceptional performance for the most demanding applications. Flexcompute’s adoption of Blackwell enables industries to tap into the full potential of this revolutionary technology, transforming the way simulations are created and accelerating the path from concept to reality,” Tim Costa, senior director for CAE and CUDA-X at NVIDIA said.

Some of the most innovative companies in aerospace, automotive, electronics, and technology are already leveraging Flexcompute’s simulation technology powered by NVIDIA Blackwell including Beta Technologies, Celestial AI, Dufour Aerospace, JetZero, Joby Aviation, Kyocera SLD Laser, Inc., and more. Customer use cases include:

- Beta Technologies leverages Flow360 powered by NVIDIA’s Blackwell for high-fidelity blade-resolved simulation to optimize aerodynamics.

- Celestial AI is simulating large metasurface structures at a scale previously impossible.

- JetZero leverages Flow360 powered by NVIDIA’s Blackwell to optimize its designs to reduce carbon emissions.

- Joby Aviation simulates aeroacoustic impact for their aircraft design.

- Kyocera SLD Laser, Inc. simulates optical amplifiers for laser diode manufacturing with speed and scale.

- Wisk is using Blackwell accelerated Flexcompute software to corroborate vertical descent maneuver in ground effect.

The collaboration between Flexcompute and NVIDIA marks an exciting leap forward in simulation technology, empowering companies to dramatically reduce time-to-market while ensuring the highest level of accuracy and precision in complex designs.

About Flexcompute

At Flexcompute, innovation is not just a principle—it’s the foundation of everything we do. Born from the minds of engineers at MIT and Stanford, we push the boundaries of what’s possible in simulation technology. With our GPU-native technology, seamlessly integrated into existing workflows, we enable teams to innovate faster, reduce costs, and minimize risks—bringing better products to market in less time. Our mission is to make hardware innovation as easy as software. Learn more at flexcompute.com.

Flexcompute announces PhotonForge, a groundbreaking photonic design automation platform that unifies the entire Photonic Integrated Circuit (PIC) development process into one seamless environment. With the rise of photonics as the solution to communication bottlenecks in modern data centers, PhotonForge offers an integrated solution to meet the industry’s most pressing challenges.

Computing power has skyrocketed by 60,000 times in recent years and input/output bandwidth and memory speeds have struggled to keep pace. This has created a performance gap that threatens to stall innovation in AI and large-scale computing. PhotonForge empowers designers to unlock the bandwidth and energy efficiency required for tomorrow’s most demanding applications, paving the way for scalable, efficient, and reliable photonic advancements.

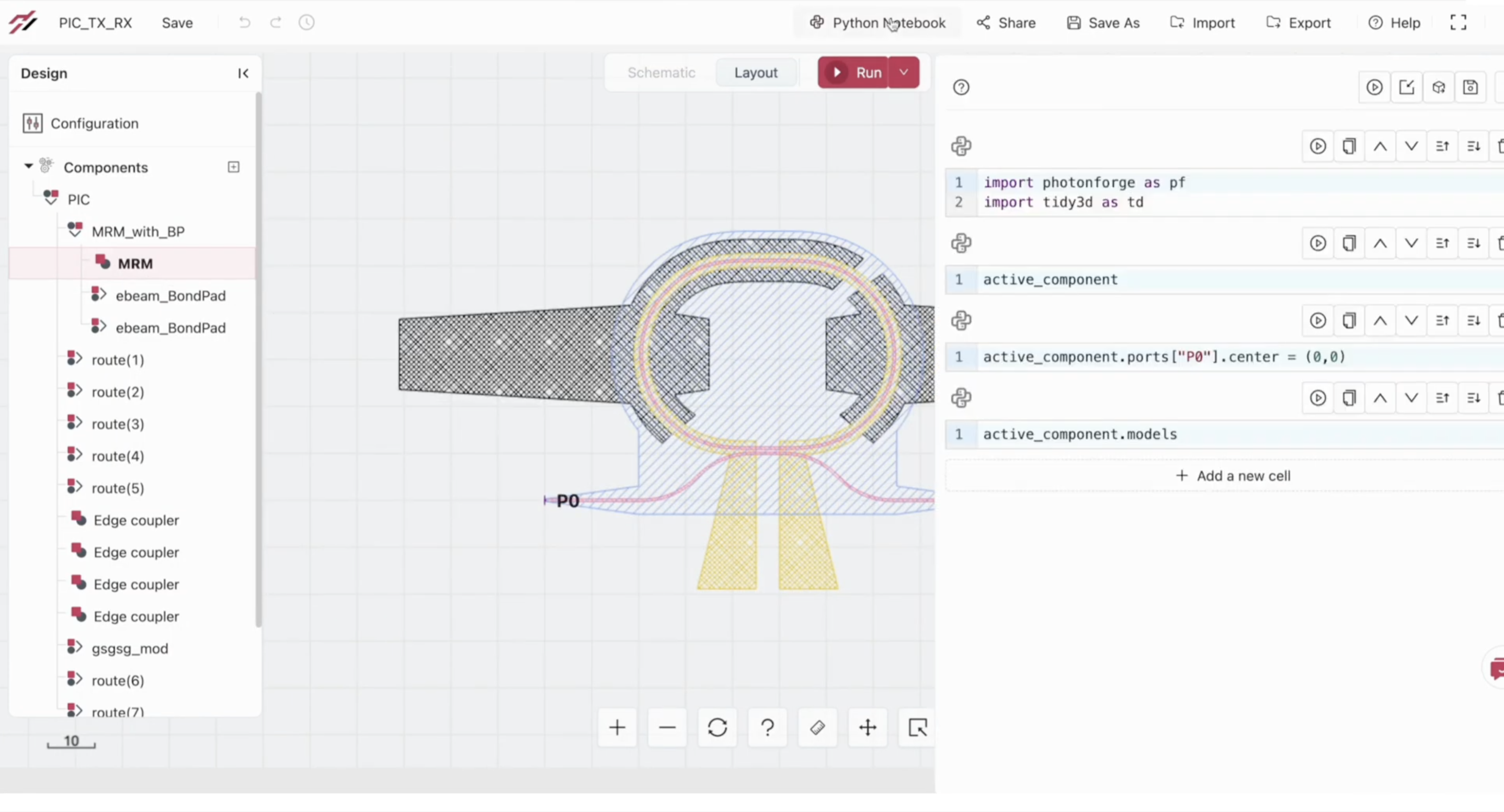

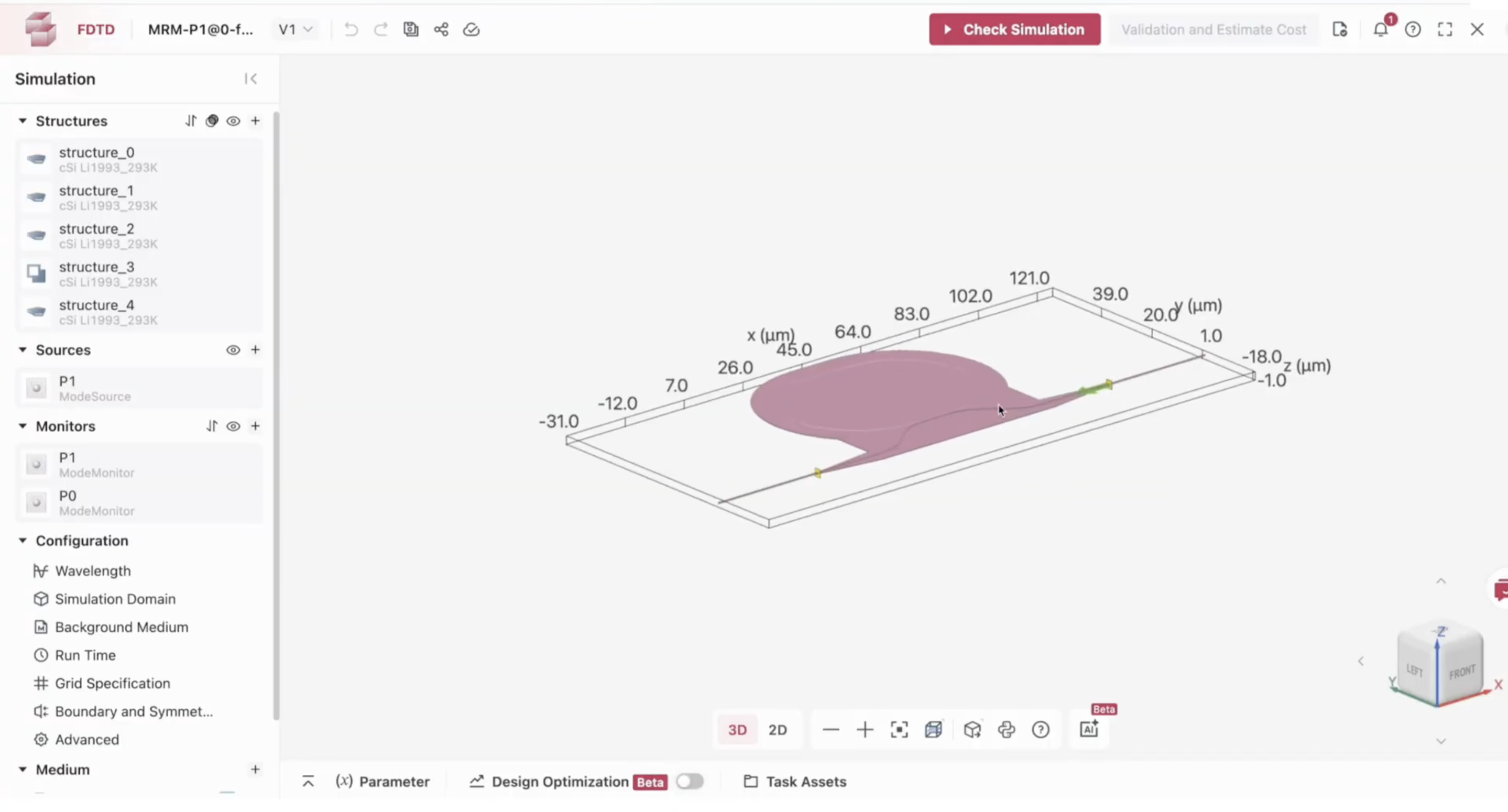

Streamlined End-to-End Workflow for PIC Design

PhotonForge empowers photonic designers by integrating design, optimization, simulation, and fabrication-ready layouts into a seamless interface. With this innovative solution, designers can effortlessly create foundry-ready designs while maintaining the precision and flexibility required in today’s fast-evolving photonics landscape.

PhotonForge addresses a critical challenge in photonics: unifying diverse tools and workflows into a cohesive, end-to-end solution. By leveraging GPU-accelerated, multi-physics solvers and enabling compatibility with foundry Process Design Kits (PDKs), PhotonForge delivers:

- Layout editor

- Device-level simulation (optical, electrical)

- Compact model generation

- Circuit-level simulations

- Signal integrity analysis

This integrated approach reduces tape-out errors, shortens time-to-market, and lowers development costs to accelerate photonic innovation.

“PhotonForge is a groundbreaking solution that redefines photonic device design and automation,” Flexcompute President Vera Yang said. “We are empowering innovators to accelerate design, reduce time-to-market, and unlock new growth.”

Next-Level Performance with GPU-Accelerated Simulations

One of PhotonForge’s most powerful features is its GPU-accelerated multi-physics capabilities. Powered by Flexcompute’s cutting-edge solvers, including FDTD, MODE, RF, and CHARGE, PhotonForge enables simulations up to 500 times faster than traditional methods. This game-changing speed allows designers to explore more possibilities, optimize designs faster, and bring products to market with greater confidence and efficiency.

“The industry’s major players—TSMC, Broadcom, and Intel—are all doubling down on co-packaged optics to turbocharge I/O bandwidth,” said Prashanta Kharel, PhD, Technology Strategist at Flexcompute. “GPU-accelerated computing is the only way to tackle the complex, multi-dimensional problems standing in the way. It’s the future of photonics, and we’re making it happen.”

Pioneering the Future of Photonic Automation

PhotonForge is more than a tool—it’s a platform that empowers designers to push the boundaries of what’s possible in photonics. By combining advanced GPU-accelerated multi-physics simulation technology with an intuitive, unified workflow, it is redefining the future of photonic active device automation and enabling innovation at scale. Learn more.

The world of integrated photonics is evolving rapidly, and with it comes the need for tools that can keep pace with increasingly complex design and simulation requirements. PhotonForge is at the forefront of this revolution, offering a next-generation photonic design automation platform that consolidates the entire Photonic Integrated Circuit (PIC) development workflow into a single, seamless environment.

PhotonForge empowers photonic designers by uniting design, optimization, simulation, and fabrication-ready layouts in a way that is efficient, scalable, and reliable. Let’s explore how PhotonForge is redefining photonic active device automation and enabling foundry-ready designs with ease.

A Unified Platform for PIC Design and Fabrication

PhotonForge addresses a major challenge in photonics: integrating diverse tools and workflows into a streamlined, end-to-end solution. By leveraging GPU-accelerated, multi-physics solvers and incorporating support for foundry Process Design Kits (PDKs), PhotonForge unifies:

- Layout editor

- Device-level simulation (optical, electrical)

- Compact model generation

- Circuit-level simulations

- Signal integrity analysis

This comprehensive approach minimizes the risk of tape-out errors, accelerates time-to-market, and reduces development costs, accelerating photonic innovation.

Powering Photonic Simulations with GPU

One of PhotonForge’s standout features is its GPU-accelerated multi-physics capabilities. Flexcompute’s advanced multi-physics solvers, such as FDTD, MODE, RF, and CHARGE enable simulations up to 500 times faster than traditional methods. For photonic designers, this means drastically reduced iteration times and the ability to explore more design possibilities in less time.

“GPU-accelerated computing is the future of photonics,” said Prashanta Kharel, PhD, Technology Strategist at Flexcompute. “Our tools enable simulations that would take months to complete on CPUs to be finished in minutes, unlocking new possibilities for innovation.”

In a recent demonstration, PhotonForge showcased its ability to load a foundry PDK, perform RF and optical simulations, and conduct time-domain analyses, all while generating fabrication-ready layouts for an ultra-high-speed electro-optic modulator in thin-film lithium niobate (TFLN). This level of integration ensures that designs are both accurate and fabrication-aware, eliminating the costly surprises that can arise during manufacturing.

“We’re talking up to a 500x speed boost in component simulations. Without GPU acceleration, this would be impossible. The simulations would take months—not minutes. This unlocks entire new realms of possibility for innovation,” Lucas Heitzmann Gabrielli, PhotonForge Product Manager at Flexcompute said.

Foundry PDK Integration: The Key to Seamless Design-to-Fabrication

Support for foundry PDKs is a cornerstone of PhotonForge. These PDKs enable designers to create foundry-aware, tape-out-ready designs, bridging the gap between concept and production. By providing pre-validated building blocks and ensuring compliance with manufacturing constraints, PhotonForge helps designers avoid errors and streamline the path to fabrication.

Optical and Electrical simulations from unified interface

The majority of PICs used for real-world applications are active devices where electrical signals are used to generate, manipulate, and also detect optical signals. In PhotonForge, the same simulation setup can be used to run accurate optical and radio-frequency (RF) simulations to design active devices such as high-speed modulators. Users no longer have to jump between tools and deal with fragmented workflow for active device design.

End-to-End Circuit Simulations

PhotonForge doesn’t stop at individual device simulations; it extends its capabilities to circuit-level analyses. With tools for both frequency and time-domain simulations, designers can model and optimize entire systems, encompassing both active and passive components. This holistic approach is critical for ensuring the performance and reliability of photonic circuits in real-world applications.

Get Started with PhotonForge

PhotonForge is transforming the way photonic devices and circuits are designed, simulated, and brought to market. With its unified platform, GPU-accelerated simulations, and foundry-ready design capabilities, PhotonForge empowers designers to tackle the most complex challenges in integrated photonics and is paving the way for the next generation of photonic innovation. Learn more about PhotonForge, or get started using the installation instructions.

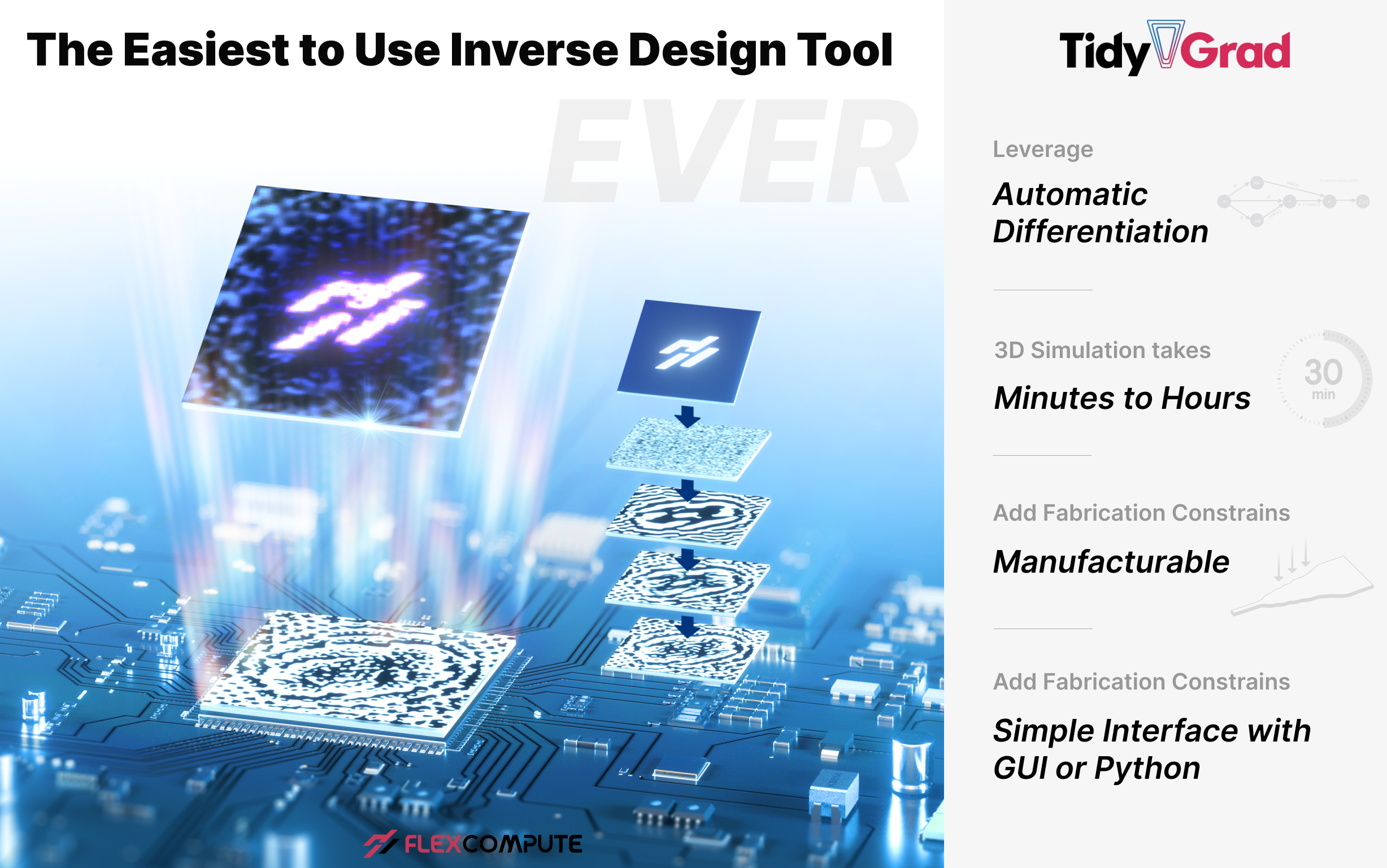

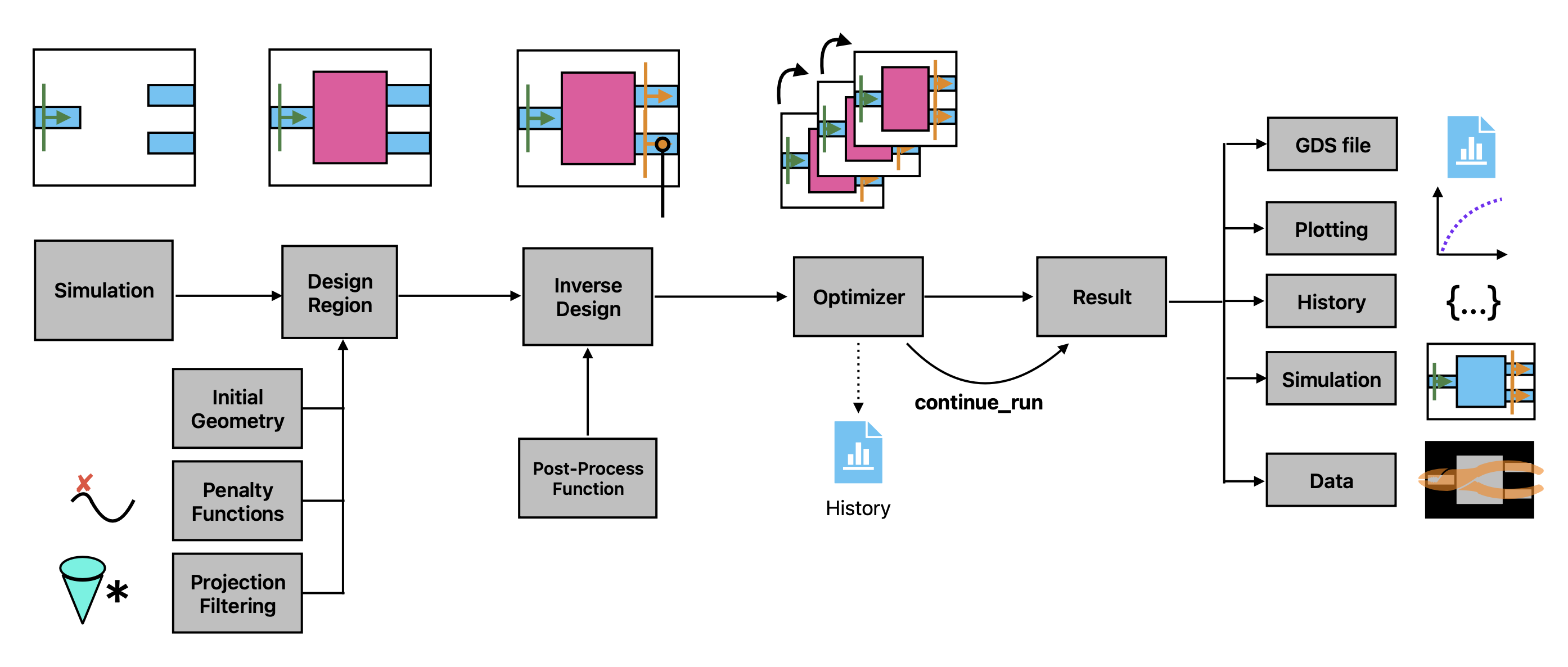

What is inverse design?

Inverse design is a method where you can automatically generate photonic devices that fulfill a custom performance metric and design criteria. One first defines the objective function to maximize with respect to a set of design parameters (such as geometric or material properties) and constraints. This objective is maximized using a gradient-based optimization algorithm, yielding a device that satisfies the performance specifications, while often displaying unintuitive designs that defy human intuition and outperform conventional approaches. This technique is enabled by the “adjoint” method, which allows one to compute the gradients needed using only one additional simulation, even if the gradient has thousands or millions of elements, as is common in many inverse design applications.

How does TidyGrad work?

TidyGrad uses automatic differentiation (AD) to make this inverse designing process as simple as possible. The TidyGrad simulation code is integrated directly within common platforms for training machine learning models. TidyGrad informs these platforms how to compute derivatives for FDTD simulations using the adjoint method and the AD tools handle the rest. As a result, one can write an objective function in regular python code involving one or many Tidy3D simulations and arbitrary pre and post processing. Then gradients of this function are computed efficiently using the adjoint method, with just a single line of code and without deriving any derivatives.

The simulations are backed by cloud-based GPU solvers, making them fast and enabling large scale 3D inverse design problems.

Why is it better than the other similar tools?

Other products require their users to use one of a select few supported operations. This is extremely limiting when designing objectives that do more than just the very basic operations. Because TidyGrad leverages automatic differentiation to handle everything around the simulation, native python and numpy code are all differentiable, making possible extremely flexible and custom metrics. We can support differentiation with respect to most of our simulation specifications and data outputs, enabling tons of possibilities.

TidyGrad’s adjoint code is general, well tested, and backed by massively parallel GPU solvers making it extremely fast. And the front end code interfaces seamlessly with python packages for machine learning, scientific computing, and visualization.

How to use TidyGrad?

All the user needs to do is write their objective as a regular python function metric = f(params) taking the design parameters and returning the metric as a single number. Then a single line of code can transform this function into one that returns the gradient using the adjoint method gradient = grad(f)(params). The resulting gradient can be plugged into an open source or custom optimizer of your choice. See the example for for inspiration.

How to quickly get started?

No matter whether you are a GUI user or Python enthusiasts, we recommend you start from this document and then go through a couple of examples after thatf. If you want to focus on GUI, we have prepared an example for you.

If you want a refresher on the concepts first, the inverse design course by Tyler and Shanhui is a useful tutorial. Tyler’s presentation is a useful introduction from fundamental physics to practical applications.

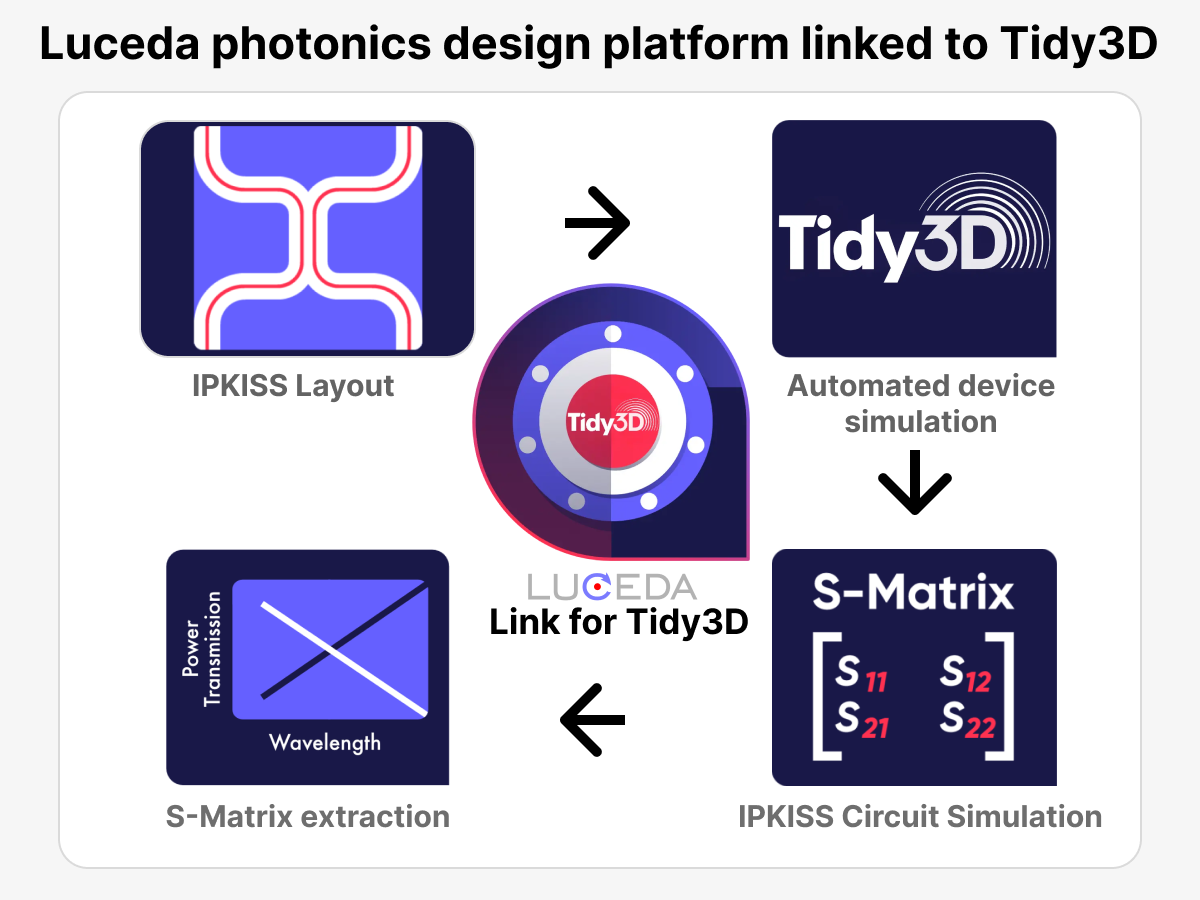

Luceda Photonics, a leading provider of photonic design automation solutions, announced a new integration with Tidy3D, a cutting-edge electromagnetic simulation solver from Flexcompute. This collaboration marks a significant advancement in photonic device design, offering users unprecedented efficiency and accuracy in their workflow.

The integration between Luceda Photonics’ powerful photonic integrated circuit (PIC) design platform and Tidy3D’s state-of-the-art FDTD simulation engine allows designers to streamline the process of creating, simulating, and optimizing photonic devices, from start to finish, on a single platform.

The key benefits of Luceda Photonics’ integration with Tidy3D include:

- Seamless Workflow: Users can now easily transfer designs from Luceda’s photonic integrated circuit (PIC) design platform to Tidy3D for fast and efficient electromagnetic simulations.

- Advanced Computational Power: Tidy3D’s high-performance computing capabilities, powered by hardware acceleration, ensure that even complex photonic devices are simulated with unparalleled speed and accuracy.

- Comprehensive Design Tools: The combined platforms provide a complete toolkit for photonics engineers, enabling them to perform both the design and simulation stages without switching between different software environments.

- Enhanced Collaboration: The integration supports collaboration between design teams, enabling them to efficiently iterate on PIC designs and simulations, leading to faster innovation and reduced time-to-market.

How it works:

The new integration allows the PIC designing on Luceda’s photonic platform and electromagnetic simulations running in Tidy3D. Users can visualize simulation results and adjust their designs with minimal hassle, ensuring a smooth and responsive design process. Additionally, users benefit from Tidy3D’s unique cloud-based hardware acceleration, making large simulations more accessible than ever.

Luceda Photonics and Flexcompute are committed to empowering engineers and researchers with the most advanced tools in photonics design. By bridging the gap between design and simulation, this partnership promises to transform how photonic devices are created and optimized across industries, including telecommunications, quantum computing, and sensing.

For more information on the Luceda Photonics and Tidy3D integration, visit https://www.lucedaphotonics.com/link-for-tidy3d.

FONEX Data Systems Inc., a leader in telecommunications innovation, is at the forefront of providing cutting-edge network infrastructure solutions across Canada and Europe. With their newly established R&D section, FONEX is pushing the boundaries of integrated external cavity lasers, a technology with far-reaching applications in telecommunications and various other areas.

FONEX ‘s R&D team found themselves facing a significant challenge when investigating the various integrated optical components, particularly micro ring resonators. The complexity of these interactions demanded powerful simulation tools that could provide accurate results without consuming excessive time and resources. Tidy3D has helped transform FONEX’s research and development process. The R&D team quickly realized that Tidy3D offered a unique combination of fast speed, high accuracy, and timely technical support that set it apart from other simulation tools in the market.

“Tidy3D has significantly improved our workflow with its exceptionally fast simulation processing times and reliable support team. It is an invaluable tool in our research and development of integrated lasers,” – Dr. Mohsen Rezaei, R&D engineer at FONEX.

The primary advantages of Tidy3D are its remarkable simulation speed and responsive support team. This allows FONEX to iterate designs rapidly, explore more parameters, and accelerate their innovation cycle while minimizing downtime. Moreover, Tidy3D is backed by a highly responsive support team, ensuring that any issues encountered during the simulation process are quickly addressed and resolved. This level of support has proven crucial in maintaining the momentum of FONEX’s research efforts, minimizing downtime, and maximizing productivity.

As FONEX continues to innovate, Tidy3D evolves alongside them, continuously enhancing its features and capabilities. This partnership exemplifies how advanced simulation tools can drive innovation in high-tech industries, helping translate visionary ideas into practical, patentable technologies.

As the telecommunications landscape continues to evolve, with increasing demands for faster, more efficient networks and more sensitive detection systems, the work being done at FONEX promises to play a crucial role. Their advancements in technology have the potential to unlock new possibilities in network infrastructure, paving the way for the next generation of telecommunications technology.

In the rapidly evolving field of proteomics, Pumpkinseed is making waves with its novel approach to protein sequencing. By leveraging advanced silicon photonic devices, this innovative biotechnology startup is working towards providing light-speed reads of the proteome, potentially revolutionizing our understanding of cellular processes and opening new avenues for therapeutic development. Pumpkinseed’s ambitious goal is to develop an optics-based approach to protein sequencing that can scale to the full complexity of the complete proteome. Unlike traditional methods that rely on complex biochemical probes, Pumpkinseed’s technology utilizes silicon photonic devices to sensitively extract the vibrational “fingerprint” of protein molecules without the need for any biochemical probes or labels.

|

|

|

|

Company LOGO (left) and a schematic illustration of Pumpkinseed’s advanced protein sequencing technology. |

|

“Proteins are critical to a wide array of cellular processes - from cell signaling to immune responses, nutrient transport, growth, and metabolic regulation,” explains Dr. Jack Hu, co-founder of Pumpkinseed. “Our improved understanding of the presence and sequence of proteins could inform new disease pathways and lead to new therapeutics.”

At the heart of Pumpkinseed’s innovative approach lies the design and optimization of nanophotonic sensor chips. This is where Tidy3D plays a crucial role. The Pumpkinseed team utilizes Tidy3D to enhance their sensor designs, enabling more efficient extraction of optical signals from protein molecules of interest and allowing for the physical miniaturization of devices to pack millions of sensors onto a single chip.

“The primary advantage of Tidy3D is the incredible speed of the simulations,” Dr. Hu emphasizes. In the fast-paced environment of a biotechnology startup, where projects across multiple disciplines need to converge simultaneously, Tidy3D’s speed is invaluable. It allows the team to rapidly iterate on chip designs, improving device performance while saving time and costs on actual semiconductor manufacturing. Moreover, Tidy3D’s Python API has proven to be a game-changer for Pumpkinseed’s multidisciplinary team. It enables them to quickly design simulation experiments and analyze and visualize results. This has led to a significant speed up of communication of results and findings across the multi-disciplinary team and enabled improved R&D progress across the entire team.

The impact of Tidy3D on Pumpkinseed’s work extends beyond just simulation speed. By facilitating rapid design iterations and enabling efficient communication of results, Tidy3D has become an integral part of Pumpkinseed’s R&D process. It has allowed the team to bridge the gap between nanophotonic design, optical and fluidic instrumentation, and chemistry – all crucial elements in their innovative approach to protein sequencing. The partnership between Pumpkinseed and Tidy3D exemplifies how advanced simulation tools can drive innovation in biotechnology, potentially leading to breakthroughs that could transform our understanding of cellular processes and pave the way for new therapeutic approaches.

At the forefront of nanophotonic innovation, the Quantum Nano-photonics group at the University of Arizona is making significant strides in developing state-of-the-art technologies for the future. Led by Prof. Mohammed Elkabbash, Assistant Professor at the Wyant College of Optical Sciences, the group is tackling complex challenges in quantum optics, industrial photonics, and optoelectronics.

Prof Mohamed ElKabbash’s research lab at the University of Arizona

|

|

Professor Mohamed ElKabbash (left) and PhD student Pritam Bangal (right).

Pritam Bangal, a PhD student in the group, kindly shares insights into their groundbreaking work and how Tidy3D is accelerating their research efforts. “Our group aims to pioneer cutting-edge nanophotonic technologies with transformative applications in quantum optics, industrial photonics, and optoelectronics,” Pritam explains. Their ambitious goals include advancing quantum computing and communication through innovative photonic devices, enhancing industrial optical systems, and developing next-generation optoelectronic components.

The group’s research spans the entire spectrum of innovation, from design and simulation to fabrication and application. Their projects are as diverse as they are impactful. Pritam has been using Tidy3D for over a year, working on various research projects including guided mode resonance structures and designing optical nano-antennas to enhance the spontaneous emission rate from quantum emitters for potential use in quantum computing.

More recently, the group’s focus has shifted towards UV and Extreme UV Nanophotonics. Pritam is working on designing optical elements in the EUV (13.5nm) range for potential use in EUV lithography. He is successful in designing metalens with better efficiency in both visible and UV (50 nm) range. The impact of Tidy3D on the group’s work is significant. As Pritam notes, “Tidy3D easily manages to set-up and run complex simulations in a very short time which not only makes rapid progress in our work but also inspires us to constantly engage ourselves in innovation.”

The software’s efficiency and ease of use have accelerated the research cycle, allowing the team to focus more on analysis and innovation rather than troubleshooting. This has been particularly beneficial for new students in the group, encouraging them to learn and use FDTD tools in their research.

As the Quantum Nano-photonics group continues to push the boundaries of light manipulation at the nanoscale, Tidy3D remains a cornerstone of their research toolkit. The partnership between this innovative research group and Tidy3D exemplifies how advanced simulation tools can drive progress in cutting-edge scientific fields, potentially leading to technological breakthroughs that revolutionize industries and enhance our scientific understanding.

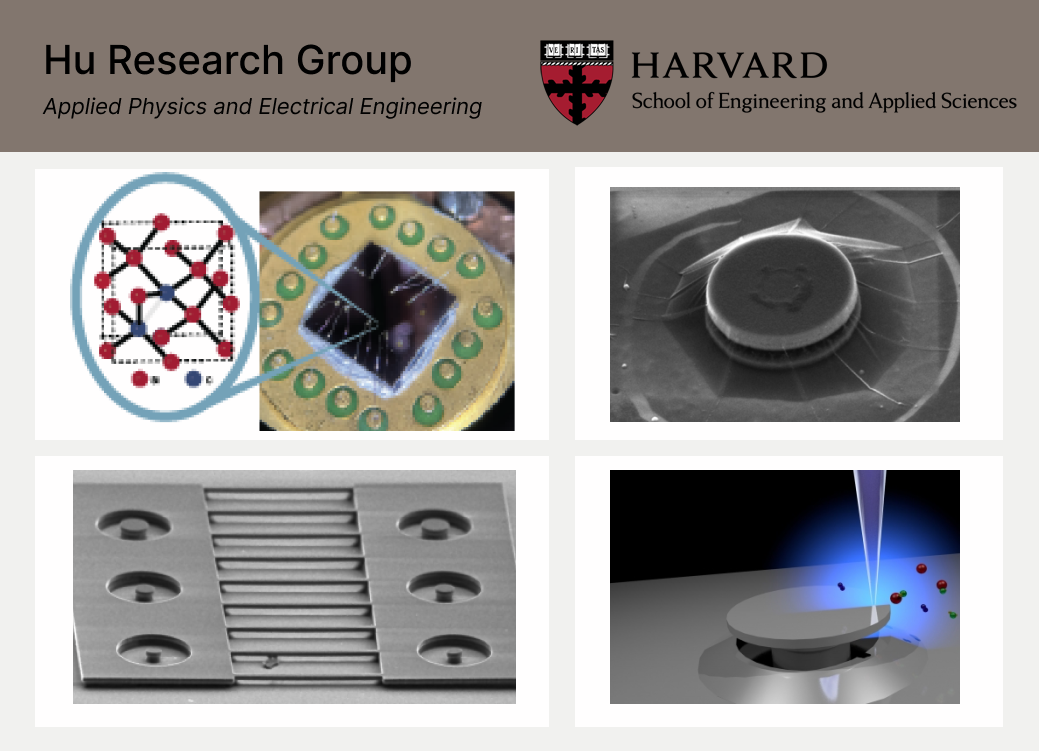

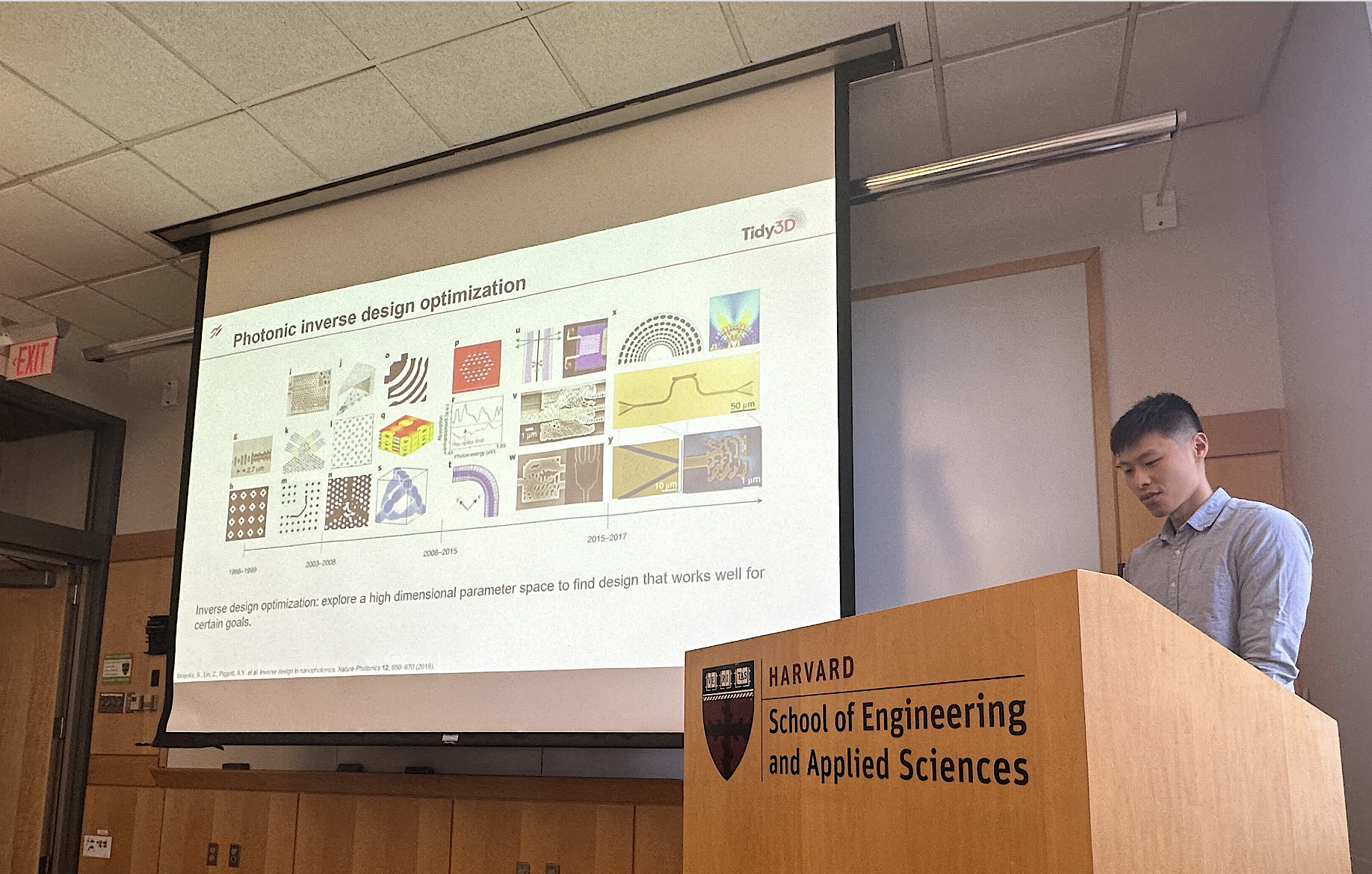

Professor Evelyn Hu’s research group from the School of Engineering and Applied Sciences at Harvard University is at the forefront of nanoscale optical and electronic research. The group is pushing the boundaries of light-matter interaction through innovative designs and nanofabrication techniques in 4H-silicon carbide and silicon. Their groundbreaking works in cavity-defect interactions and electrical control of defects have opened new avenues for probing fundamental material physics and developing high-performance devices for both classical and quantum applications.

|

| Prof Evelyn Hu’s researches on Silicon Color Centers (top left), Silicon Carbide and Diamond (top right), GaN (bottom left), and Two-Dimensional Materials (bottom right){: .align-center} |

|

| Prof Evelyn Hu’s group at a dinner gathering |

The Hu group is an early adopter of Tidy3D. “Tidy3D’s integration with Python made learning much easier for me!” says Amberly, a graduate student in the group. “The documentation was clear, and the library of examples allowed me to learn at my own pace.” This accessibility has been particularly valuable for team members new to FDTD simulations, lowering the entry barrier to advanced nanophotonic design.

The group has leveraged Tidy3D for various projects, including the simulation of a novel photonic crystal cavity design in 4H-SiC. This new design promises more reliable and facile fabrication, potentially advancing the field of nanophotonics.

Chaoshen, another member of the research team, highlights the software’s versatility: “The Python API and the fast computation speed enabled by the Tidy3D server makes it easier for me to run parameter sweeping and start simulating ideas without the constraint of server time.” This capability has significantly enhanced the group’s ability to explore and optimize complex designs.

The batch sweep feature has proven particularly valuable in the optimization of photonic structures. Chang, a graduate student focusing on structure optimization, notes, “The batch sweep feature is very helpful in optimizing our photonic structures.”

Beyond the software’s technical capabilities, the Hu research group found the support from the Flexcompute team to be exceptional. “The team at Flexcompute was super helpful and very responsive to any questions I had,” Amberly shares. “I was even able to send them snippets of my code for them to look through, and they were fast at helping me debug and better understand what was going on.”

As the Hu research group continues to push the boundaries of nanoscale light-matter interaction, Tidy3D remains an invaluable tool in their research arsenal. The combination of computational power, user-friendly interface, and responsive support has enabled the team to accelerate their simulations, explore more complex designs, and ultimately, advance the field of nanophotonics. The group’s experience with Tidy3D exemplifies how advanced simulation tools can drive innovation in cutting-edge research, helping translate visionary ideas into groundbreaking discoveries.

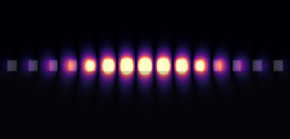

Pixel Photonics is a pioneering deep-tech start-up founded in 2021 as a spin-off of the University of Münster and based in Münster, Germany. The company has developed a unique approach for integrating superconducting nanowire single-photon detectors (SNSPDs) on a chip waveguide. Pixel Photonics combines the superior features of SNSPDs with the versatility of an integrated photonic platform to deliver highly parallelized, efficient, and ultra-fast single-photon detection.

|

|

|

|

Photographs of the photonic chip (left) and SNSPD products (right) of Pixel Photonics |

|

While SNSPDs are inherently threshold detectors and insensitive to the number of photons in a detection event, waveguide integration and parallelization enable using SNSPDs for photon counting, thus paving the way for high-performance and scalable photonic quantum computing.

One of the biggest challenges of Pixel Photonics’ unique approach to SNSPDs is efficient fiber-to-chip coupling from an external source to our on-chip platform, the design of which requires large-scale nanophotonic computation.

“Tidy3D helps us to perform these computations in a matter of minutes,” says Dr. Marek Kozon, R&D Engineer for Computational Optics at Pixel Photonics.

Using Tidy3D, Dr. Kozon, and his team have designed couplers that exceed the previous generation’s efficiency while allowing for a smoother design process.

“I especially value the flexibility of Tidy3D, which is seamlessly integrated within the Python environment. This means that we are not dependent only on currently implemented software features but can extend the functionality ourselves to whatever suits our needs. I also like that all the data files are transparent, and there are no hidden or encoded files, which, again, makes it very smooth to work with Tidy3D.”

Photonic design engineers like Dr. Kozon are revolutionizing photonic industries. We at Flexcompute proudly provide efficient tools to their excellent hands. At the same time, we help guide our customers through their simulation journey by providing complementary and timely technical support. Dr. Kozon says, “I have been delighted with the approachability and knowledge of the customer service.”

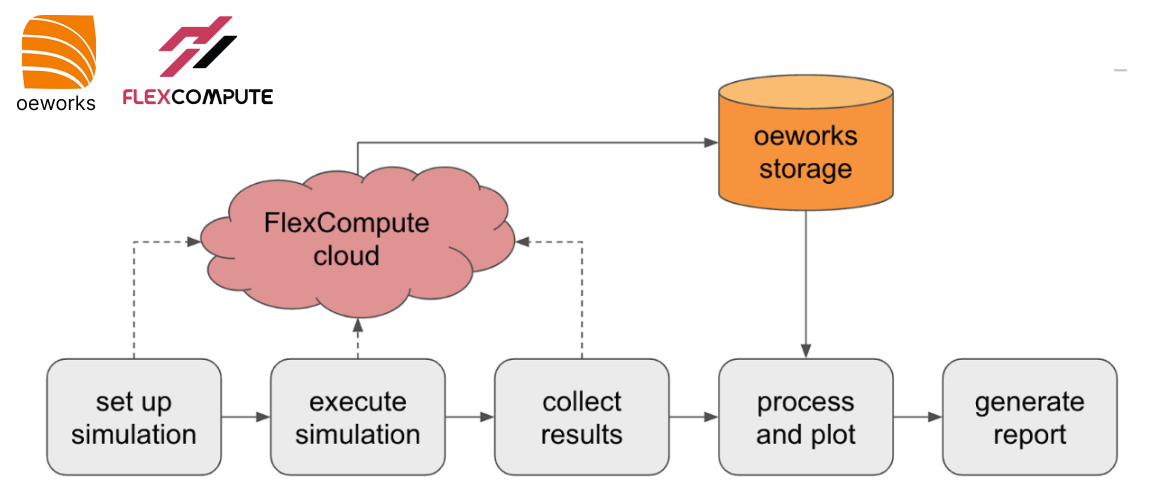

Who is oeworks

At oeworks we create solutions that empower technology innovators to rapidly advance their ideas from concept to prototype to production. We have worked on a large number of technical challenges across several domains and have developed and continue to evolve a toolkit consisting of hardware, software, and processes, including several commercial simulation tools. We structure our work using a technical risk mitigation framework, which, in conjunction with our toolkit, allows us to define and traverse the shortest path between a technical challenge and its solution.

Why we chose Tidy3D

When working on a design project, we typically use a combination of analytical models and simulations to better understand and mitigate technical risk. Once we achieve a fundamental understanding of the design landscape, we want to complete parametric explorations and quickly obtain precise results at specific design points.

Tidy3D has been a game change for us in a number of ways. The most obvious one is speed. Thanks to its substantial hardware acceleration, Tidy3D enables us to complete parametric simulations on a range and scale that were previously impractical, if at all possible.

A second advantage of Tidy3D is that it allows us to define our simulations as Python code. This enables us to streamline the process of defining simulations, particularly when we have the need to parametrize them. It also allows us to integrate many tools from the rich Python ecosystem, ranging from optimization and simulation orchestration to results storage, processing, and visualization. We have been able to generate code that, using a single command, can set up a number of parametric simulations, execute them, collect and store the results, and then process, interpret, and plot them. In some instances where the reporting requirements were known a priori we have even been able to generate the complete reports in slide format.

| Figure 1: Process flow from simulation set up to report generation. The entire process can be executed using a single command. |

Another advantage of simulation-as-code is that it can easily be integrated into our revision control system. Given the large number of projects we execute and the often rapidly evolving parameter spaces we are required to explore, this gives us a great advantage in tracking the evolution of our simulations and designs. Furthermore, it enables reproducibility in the event that we need to revisit a result or resume work that has been paused for a substantial period of time.

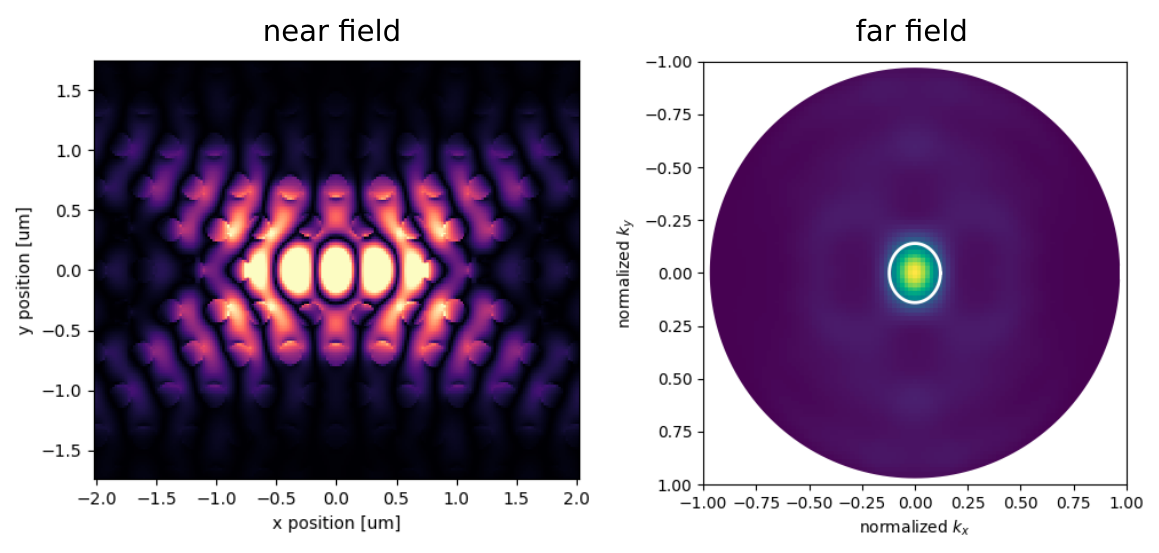

|

|

|

|

Figure 2: Far field emission patterns of micro-LED structures for different polarizations. |

|

We also had a great experience with Tidy3D from an IT infrastructure point of view. Simulation setup is very lightweight and can be worked on any of the standard development machines we routinely use. Simulation execution runs on Flexcompute’s cloud instances, freeing us from the requirement to acquire, operate, and maintain high-end workstations. In addition to that, license setup is practically trivial, freeing precious IT resources for an operation of our size.

|

(a) |

(b) |

|

Figure 3: Post-processing of light extraction simulation results. (a) Far-field radiosity vs. elevation angle; (b) Light extraction efficiency vs. a design parameter for two different configurations. |

|

Finally, we have been delighted with the support we have received from the Flexcompute team. All our requests for support have been addressed promptly, and without fail we were pointed in the right direction, minimizing downtime. We have also interacted with the development team and have found them to be very responsive to our requests for enhancements and new features.

Conclusion

Since we adopted Tidy3D as our main FDTD simulation tool, we have used it to complete many projects, ranging from micro-LED light extraction optimization to the modeling of silicon photonic components. Our clients have been very satisfied with our ability to quickly explore extended design spaces and simulate large structures with quick turnaround times. Internally, we have been able to streamline our workflows for simulation setup, execution, and result processing and visualization, leading to drastically improved efficiency.

Our engagement with the Flexcompute support and development teams has been extremely smooth and productive – we have the highest confidence in their ability to support us in our efforts to accelerate our client’s technology release to market.

We are continuously improving our understanding of Tidy3D’s evolving capabilities and are actively seeking projects that can benefit from streamlined FDTD simulations in the context of a thorough understanding of the corresponding design space. We look forward to discussing how our processes and software tools, combined with the power of Tidy3D, can help mitigate your technical risk, and allow you to reach your objectives faster.

We at Flexcompute are thrilled to have collaborated with NVIDIA to push the boundaries of scientific computing! Together, we have explored the incredible potential of 𝗚𝗣𝗨-𝗮𝗰𝗰𝗲𝗹𝗲𝗿𝗮𝘁𝗲𝗱 𝗽𝗵𝗼𝘁𝗼𝗻𝗶𝗰 𝗦𝗶𝗺𝘂𝗹𝗮𝘁𝗶𝗼𝗻𝘀, leveraging the latest hardware advancements to solve Maxwell’s equations at unprecedented speed and scale.

Our collaboration showcases how NVIDIA’s cutting-edge GPUs power AI and revolutionizes how we approach complex physical simulations. The possibilities are endless, from reducing simulation times by orders of magnitude to enabling the design and optimization of next-generation photonic devices!

𝗪𝗵𝘆 𝘁𝗵𝗶𝘀 𝗺𝗮𝘁𝘁𝗲𝗿𝘀:

𝗦𝗽𝗲𝗲𝗱: simulations that once took hours can now be completed in minutes

𝗦𝗰𝗮𝗹𝗲: we can simulate larger and more complex devices, accelerating innovation in photonics

𝗔𝗰𝗰𝘂𝗿𝗮𝗰𝘆: advanced simulations allow for more precise designs, pushing the limits of what’s possible

See the publication here.

This collaboration is just the beginning. As we continue to explore and develop these technologies, we are excited about the future of scientific computing and the breakthroughs that lie ahead.

Thanks to the amazing teams at Flexcompute and NVIDIA for making this possible. Here’s to driving innovation forward!

Renowned for its expertise in diamond photonics, the Lončar Lab at Harvard University has expanded its focus to lithium niobate in recent years, pioneering new avenues in the fabrication of photonic structures. The Lončar Lab has developed sophisticated in-house fabrication technologies, which can be used to quickly test the performance of designed devices. However, designing lithium niobate photonics often requires the designers to explore a large parameter space, which means too many chips must be fabricated and tested. Therefore, it’s critical to have a reliable numerical simulation tool to help with the design. Unfortunately, traditional 3D full-wave simulations are very slow. Tony Song, currently a graduate student from the Lončar Lab, and his teammates often rely on their efficient fabrication techniques and excellent testing capabilities for the parameter tuning of their designs rather than running numerical simulations. As another workaround, Tony also used to run highly simplified simulations to model devices, which is often not sufficiently accurate.

Recently, Tony and his teammates discovered Tidy3D. With the help of Tidy3D’s ultrafast simulations, they have already had exciting results that will be published in top scientific journals. Currently, Tony and his colleagues mainly use Tidy3D to design diamond photonic crystal cavities and passive lithium niobate components such as directional and grating couplers. In addition, they have also started to use inverse design to optimize some passive components.

Tony highly praised the well-designed user interface of Tidy3D compared to other simulation tools he has tried previously. The intuitive Python API of Tidy3D integrates effortlessly into his existing workflow, and the capabilities for importing and exporting GDS files facilitate a smooth transition between simulation and actual cleanroom fabrication. Moreover, Tony has commended the technical support team at Tidy3D for their prompt and effective assistance. Tony ran a broadband simulation in one specific case, but the result obtained had a strange discontinuity in the lower end of the wavelength range. Puzzled by the result, Tony contacted Tom and Emerson, the Senior Photonics Engineers at Flexcompute. They did a test together to identify the issue. “They helped me think a different way about the problem. Looking back, you might feel the solution was rather obvious, but it would have taken me a long time to figure that out myself. It’s always helpful to reach out to [Flexcompute’s] experts and get their opinion on the matter,” said Tony.

However, Tony and his teammates’ long-term goal is to explore active components utilizing the large electro-optic coefficient and nonlinear coefficients of lithium niobate. This vision aligns perfectly with Tidy3D’s development blueprint as the Tidy3D team continues to develop more advanced nonlinearity features and multi-physics solvers. These additions enable more rigorous simulations in thermo-optic and electro-optic domains, which is essential for the work Tony and his lab aspire to undertake.

The success story of Tidy3D in the Lončar Lab underscores the software’s pivotal role in advancing nanophotonics research. From its user-friendly interface and fast simulation speed to its advanced features, such as nonlinearity and inverse design plugins, Tidy3D has proven to be a transformative tool. As the Lončar Lab continues to explore new frontiers in photonics, Tidy3D stands as an essential companion, empowering researchers to unlock novel insights and accelerate the pace of discovery in the ever-evolving field of nanophotonics.

Flexcompute is proud to share that the National Academy of Engineering (NAE) has elected our co-founder Dr. Shanhui Fan to its 2024 Class for showing that “the coldness of space” relative to Earth can be a major energy source for humankind.

Dr. Shanhui Fan also serves as Joseph and Hon Mai Goodman Professor of the School of Engineering, Electrical Engineering at Stanford University in Stanford, California. His induction into the NAE comes on the heels of Shanhui Fan’s previous accolade, where he was awarded Optica’s R. W. Wood Prize in 2022.

He is also the second member from Flexcompute to join the ranks of the NAE, following in the footsteps of Dr. Philippe Spalart. Flexcompute is honored to support excellence and leadership in engineering.

With this news, Flexcompute’s highly-recognized team continues to make waves in the simulation technology space with its award-winning research and by developing cutting-edge electrodynamics and Computational Fluid Dynamics (CFD) solvers.

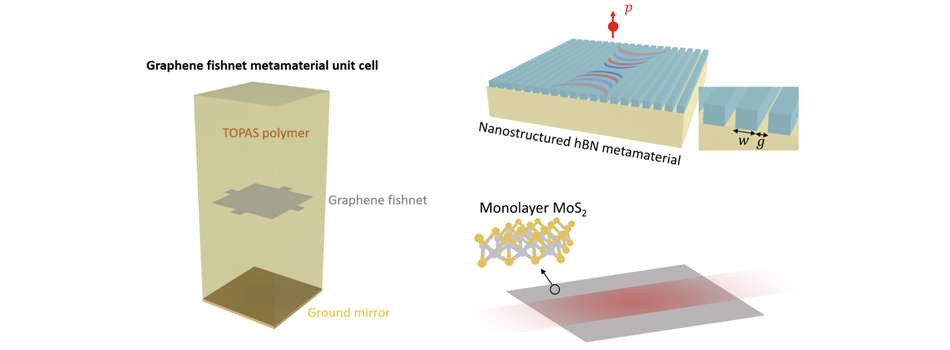

The Finite-Difference Time-Domain (FDTD) method is a computational modeling technique widely used in the field of photonics. FDTD has significantly changed the way researchers study and predict the behavior of electromagnetic waves. This review article delves into the recent applications of FDTD simulations in cutting-edge photonics research and engineering. Specifically, it showcases examples of the use of FDTD simulations in areas such as novel 2D materials (such as graphene and transition-metal dichalcogenides), quantum technologies (like quantum dots and single photon light extraction), and consumer-grade electronics (such as CMOS image sensors).

2D materials in photonics

The emergence of 2D materials represents a significant breakthrough. These ultra-thin materials, often mere atoms in thickness, demonstrate unique electronic, optical, and mechanical properties, markedly different from their bulk forms. Graphene, a monolayer of carbon atoms in a honeycomb lattice, exemplifies this with its exceptional conductivity and transparency. Beyond graphene, 2D materials like TMDs, black phosphorus, and hexagonal boron nitride (hBN) have been identified, each with distinctive optical properties. These materials have facilitated novel applications in photonics, including ultrafast photodetectors, flexible optoelectronics, advanced photovoltaic cells, and light-emitting diodes (LEDs). The nanoscale manipulation of light using these 2D materials paves the way for innovative developments in communication technologies, energy harvesting, and quantum computing, marking a transformative phase in photonics.

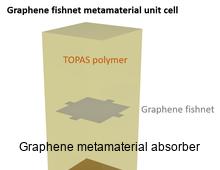

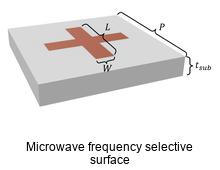

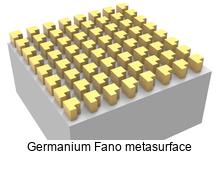

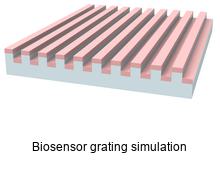

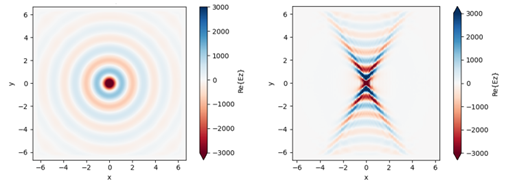

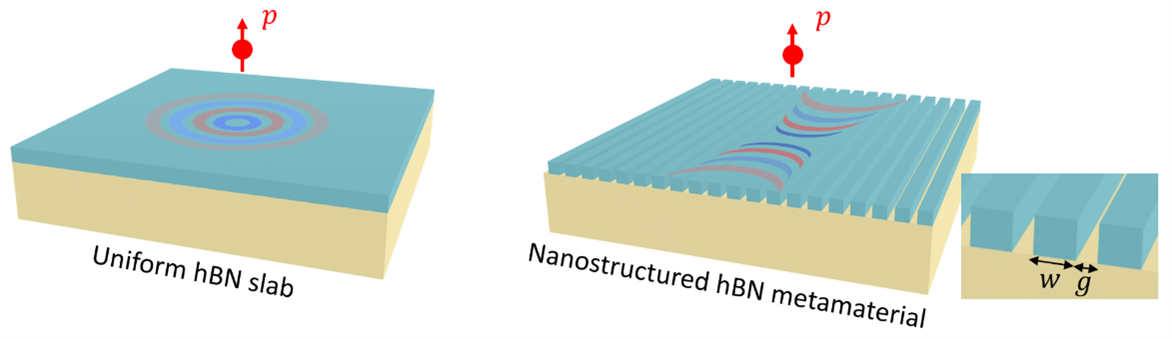

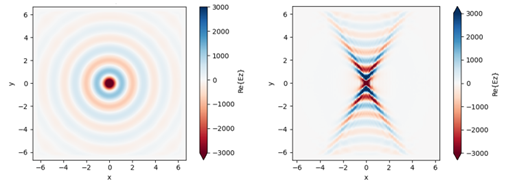

Our example library showcases various photonic devices that are integrated with 2D materials. Some examples include the graphene metamaterial absorber, the nanostructured hBN with hyperbolic polaritons, and the waveguide made of MoS2 monolayer.

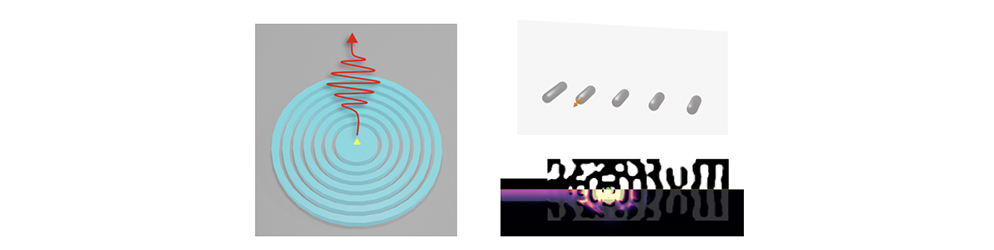

Photonic components for next-gen quantum technology

The rapid advancement of quantum technology is significantly influenced by the development of sophisticated photonic components, essential for driving the next generation of quantum systems. These components, encompassing single-photon sources, detectors, and integrated photonic circuits, form the foundation of quantum communication, computing, and sensing. They enable precise photon manipulation and control, facilitating critical quantum phenomena such as entanglement and superposition. In quantum computing, photonic elements are instrumental in generating and manipulating qubits, the quantum bits that exist in multiple states simultaneously, thereby significantly enhancing computing capabilities. In quantum communication, these components are crucial for establishing highly secure channels through quantum key distribution (QKD). Furthermore, progress in integrated quantum photonics is leading to more compact and robust quantum devices, advancing their practical application in various sectors, including secure data transmission and high-precision measurements. As ongoing research pushes the limits of current technology, photonic components are poised to be key in harnessing the full potential of quantum technology, ushering in a new era of information processing and other applications.

Our example library features several quantum photonics-related examples, including the Bullseye cavity quantum emitter light extractor, the inverse-designed quantum emitter light extractor, and the Plasmonic Yagi-Uda nanoantenna.

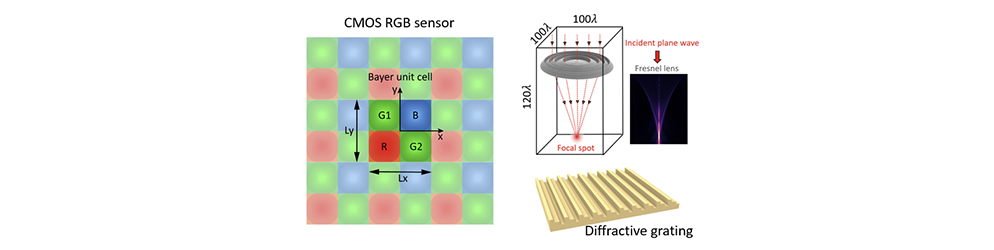

Photonic devices in consumer-grade electronics

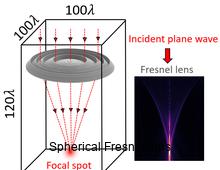

The integration of advanced photonic devices in consumer electronics is markedly enhancing user experiences, as seen in the use of CMOS image sensors in digital imaging as well as Fresnel lenses and diffractive gratings in augmented reality (AR) and virtual reality (VR) systems. CMOS sensors, notable for their low power consumption and high-quality imaging, are crucial in smartphones and digital cameras, capturing high-resolution images with excellent clarity and color fidelity. These sensors utilize photonics to convert light into electronic signals, resulting in the vivid, detailed digital images prevalent in modern devices. In AR and VR, Fresnel lenses, known for their concentric ring structure, offer a lightweight, thin alternative to traditional lenses, ideal for compact headsets. They effectively bend light for immersive visual experiences while reducing headset bulk, enhancing user comfort during prolonged use. CMOS image sensors and Fresnel lenses demonstrate the significant role of photonic technologies in consumer electronics, driving innovation and expanding the possibilities of user interaction in digital environments.

Conclusion

The advancements in technology have led to a significant increase in the demand for faster and more efficient computing methods. Photonic devices are becoming increasingly important in modern technology, and the demand for faster and more accurate electromagnetic simulations is growing. FDTD has been a critical tool in advancing the development of photonic devices, but conventional FDTD solvers can no longer keep up with the speed of technological development. Novel hardware-accelerated FDTD tools like Tidy3D are designed to overcome the limitations of traditional FDTD solvers. These tools leverage the power of modern computing hardware to deliver faster and more accurate simulations, making it possible to design and optimize photonic devices at a scale and speed that was previously impossible. With the advent of these innovative tools, a new era of photonic device development is emerging, one that promises to deliver faster, more efficient, and more reliable technology.

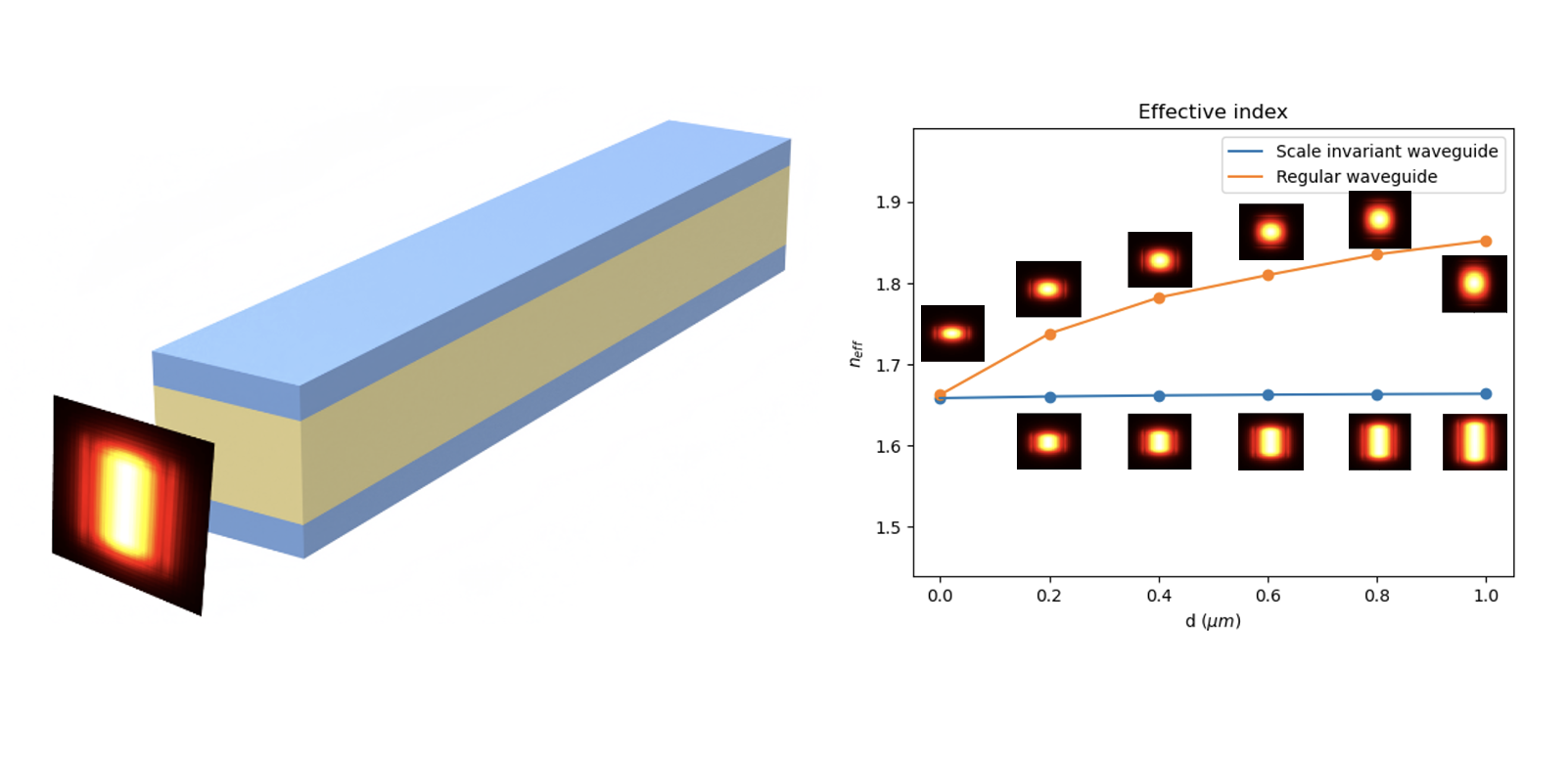

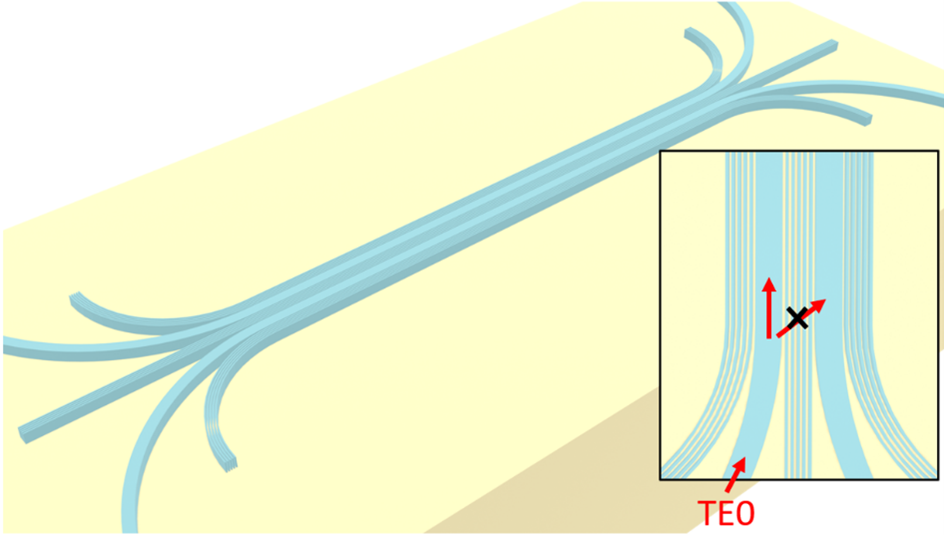

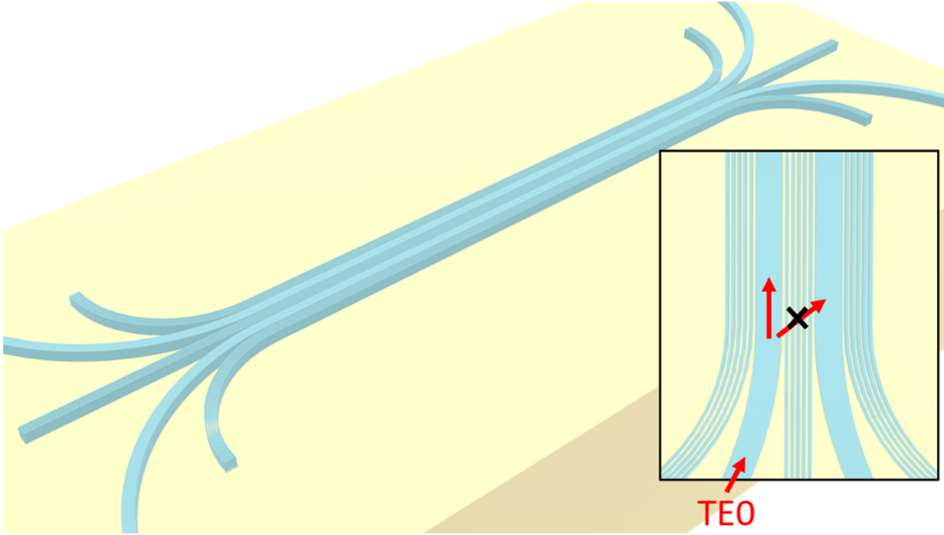

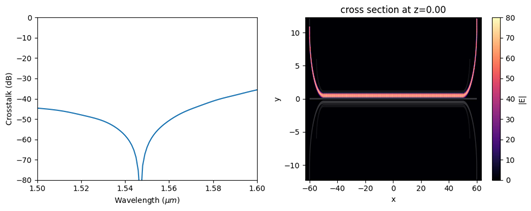

The field of integrated photonic circuits has seen a significant and rapid expansion in recent years, with strong indications of continued acceleration in the near future. This growth is largely fueled by innovative academic research.

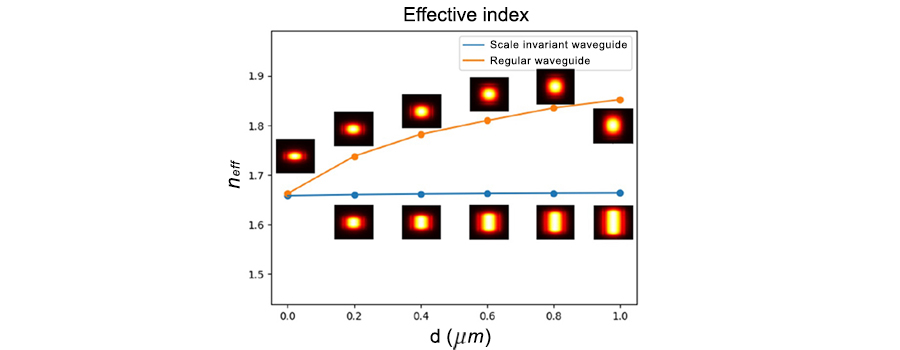

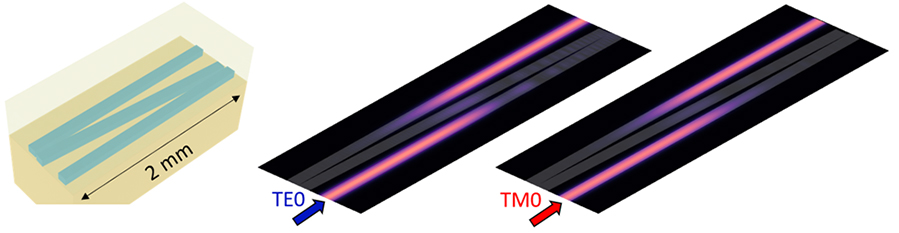

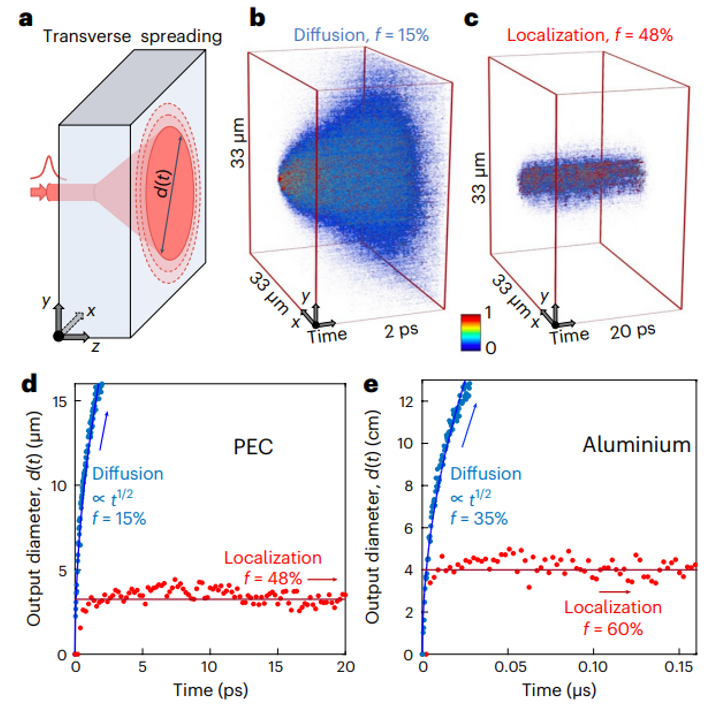

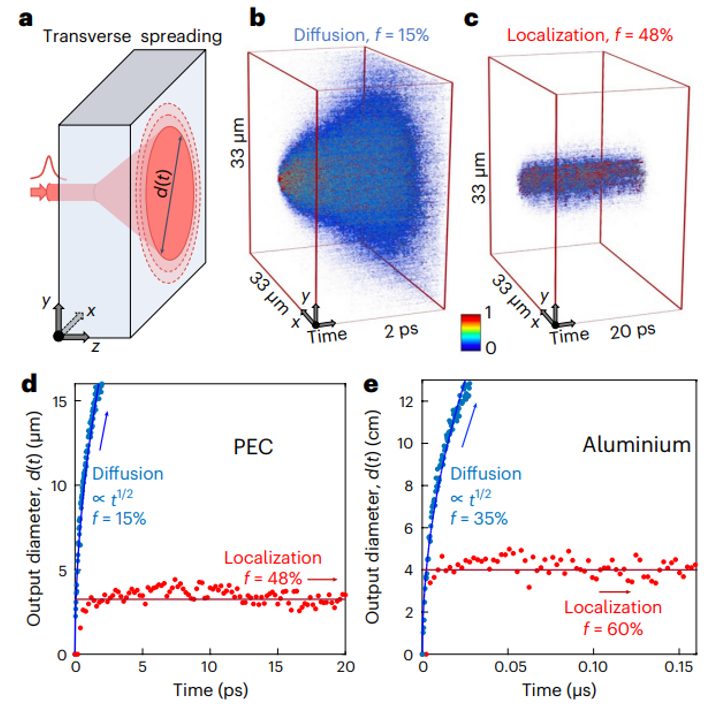

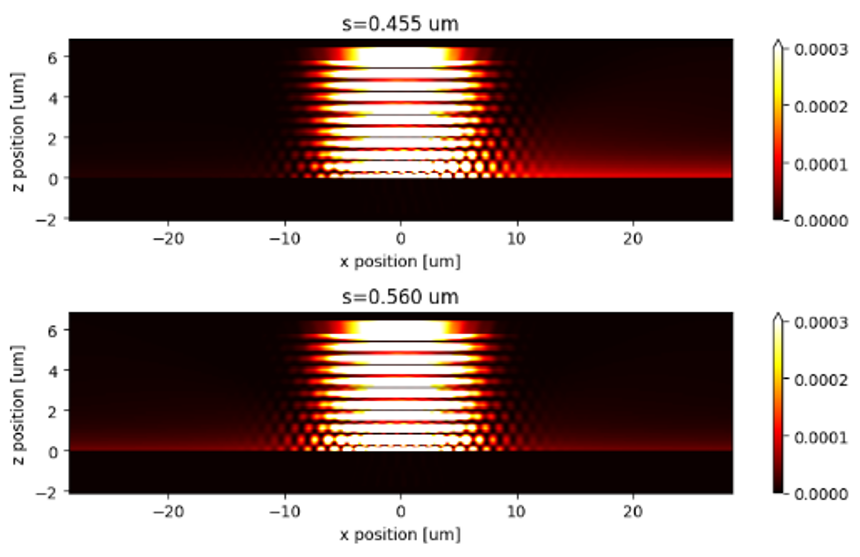

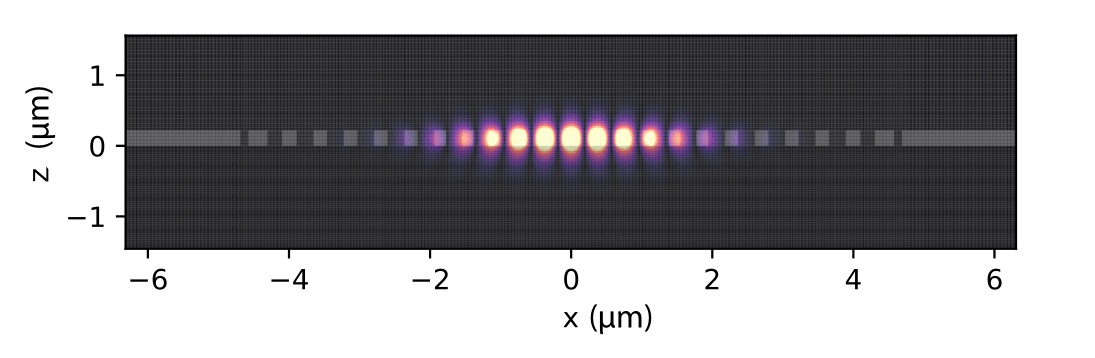

A notable example of such advancement is the study led by Dr. Janderson Rodrigues and Dr. Utsav Dave from Professor Michal Lipson’s team at Columbia University, as detailed in a recent publication in Nature Communications. This research introduces a new dielectric waveguiding mechanism. Their novel waveguide design effectively traps light within materials of low refractive index on a chip. The study reveals that at a specific threshold, the transverse wavevector transitions from imaginary to real, consequently forming an optical mode that remains consistent and scale-independent, regardless of the geometry. This phenomenon results in significant light localization within a low-index layer, presenting a substantial advancement in the field.

The waveguide design presented in the study is highly intriguing. We replicated the simulations described in the original paper, presenting them as a public example. Our simulation results align closely with both the theoretical predictions and the experimental observations reported in the study.

We had the privilege of interviewing Dr. Janderson Rodrigues and Dr. Utsav Dave, who graciously offered insights into their research. We are immensely grateful for the time and effort they devoted to this discussion. The following is the complete interview transcript, encompassing the questions and their insightful responses.

1. What inspired the initial concept for the scale-invariant waveguide design in the paper?

We were working on an unrelated project in which the goal was to reduce the evanescent fields in order to avoid the coupling between parallel waveguides. By analyzing the corresponding equations, it turns out that it is easier to increase the evanescent field instead of decreasing it. At the limit, the field becomes flat before it becomes radiation modes. This inflection point is exactly the cut-off condition of the asymmetric waveguide and therefore is an unstable point (the critical angle). We realized that two parallel waveguides operating at this condition would lead to a flat mode. Later we found out that something similar has been predicted in plasmonic waveguides, which helped us to understand the effect with the huge advantage of using only (lossless) dielectric materials.

2. What advantage does the scale-invariant waveguide have over conventional dielectric waveguide?

Janderson: One of the main advantages of the scale-invariant waveguide is the increasing overlap of the field intensity with low-index materials, mainly in heterogeneous structures, due to the limited number of alternative approaches. For this purpose, beyond the critical point is even more attractive. Furthermore, a flat mode distribution means a uniform distribution of light throughout the middle material (large mode effective area), which can be interesting to avoid non-linear effects or tailor the saturation of gain medium, for instance. Besides that, the starting point of increasing the evanescent coupling (i.e., shorter directional couplers) still can be explored.

Utsav: Besides, since the scale invariant waveguide has the same effective index as the low-index material width is changed, devices with different dimensions can retain the same overall phase shift, which can be useful in optimizing different structures without worrying about realigning phase shifts.

3. How do the uniform field distribution and non-Gaussian spatial profile in your waveguides impact their potential applications in integrated photonics? How do you envision the application of scale-invariant waveguides in the future?

Although, the paper shows the experimental demonstration in the telecommunication range, the same concept can be applied to other regions of the electromagnetic spectrum, for example, the visible or the terahertz range. We also notice that the proposed structure might find applications in electro-optic modulation, nonlinear integrated photonics, and temperature control.

4. What are you working on currently?

Janderson: Currently, I am working in the private sector in the field of integrated photonics and trying to find a good balance between my interest in basic science with the necessity of product development and engineering.

Utsav: I am working in a proteomics company where we use integrated photonics for single-molecule sensing of proteins, helping to usher in the next revolution beyond genomics.

5. Can you give some suggestions to students who want to work in the field of photonics?

Janderson: If I can give any suggestions for students in the field, it would be to use toy models. The fact that we can play with these relatively simple equations of 1D slab waveguides before going to more rigorous numerical simulations, was one of the greatest pieces of advice that was given to me.

Utsav: Photonics is both a great applications-oriented platform as well as a playground for exploring all kinds of cool physics like nonlinear dynamics, non-Hermitian or topological physics, etc. So you may not know from the beginning what kind of work/topic you like and the best way to know is to try a bunch of things. Try and get a broad overview of all aspects - from theory to fabrication and experiment. This will help you whether you end up in industry or academia (or something else). It’s also important to make sure that the research group culture where you will be spending your master’s or PhD matches your personality and is nurturing - that by far is the most important factor to success.

Author Bios

Janderson Rocha Rodrigues was born in Sao Paulo, Brazil, in 1980. He received the Microelectronics Engineering Diploma from the Sao Paulo State Technological College (FATECSP), Sao Paulo, Brazil, in 2008 and the M.S. and Ph.D. degrees in Space Science & Technologies Engineering from the Aeronautics Institute of Technology (ITA), Sao Jose dos Campos, SP, Brazil in 2011 and 2019. From 2018 to 2019, he was a Ph.D. visiting student at Michal Lipson’s group at Columbia University, NY, USA, where he rejoined as a postdoctoral Research Scientist from 2019 to 2023. Since 2023, he has been a Research Scientist at Corning Inc., NY, USA. His research interests include applications of integrated photonics to communications as well as to sensing and instrumentation.

Janderson Rocha Rodrigues was born in Sao Paulo, Brazil, in 1980. He received the Microelectronics Engineering Diploma from the Sao Paulo State Technological College (FATECSP), Sao Paulo, Brazil, in 2008 and the M.S. and Ph.D. degrees in Space Science & Technologies Engineering from the Aeronautics Institute of Technology (ITA), Sao Jose dos Campos, SP, Brazil in 2011 and 2019. From 2018 to 2019, he was a Ph.D. visiting student at Michal Lipson’s group at Columbia University, NY, USA, where he rejoined as a postdoctoral Research Scientist from 2019 to 2023. Since 2023, he has been a Research Scientist at Corning Inc., NY, USA. His research interests include applications of integrated photonics to communications as well as to sensing and instrumentation.

Utsav D. Dave, originally from western India, completed his B. Tech. nestled in the foothills of the Himalayas in 2010 from the Indian Institute of Technology, Guwahati. He then went on to do a M.S. in Photonics jointly from a group of UK and European universities through the Erasmus Mundus program followed by a PhD in integrated silicon photonics from Ghent University, Belgium. He later moved to Michal Lipson’s group at Columbia University as a postdoc in 2017 where he worked on subwavelength and non-Hermitian photonics, LiDAR and frequency combs before joining Quantum-Si in 2022 where he currently works on photonics for proteomics using single-molecular spectroscopy.